Hugging Face FineVision Dataset with 24M Multimodal Samples Targets Next-Gen VLM Training

- Olivia Johnson

- Sep 9, 2025

- 14 min read

FineVision dataset overview and why 24 million multimodal samples matter

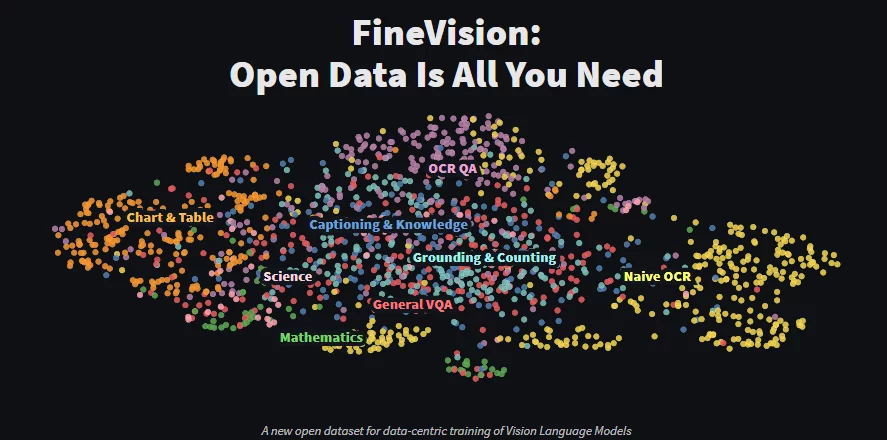

The FineVision dataset arrives as a purpose-built corpus of 24 million multimodal samples designed specifically to accelerate and improve Vision Language Model (VLM) training. At this scale and with a multimodal focus—image-text pairs, multi-image contexts, and dialogue-style visual interactions—FineVision aims to give next-generation VLMs a richer training signal than many smaller curated collections while avoiding some pitfalls of raw web-scale scraping.

Early documentation and technical design choices are described in the project’s foundational work, and an independent assessment has evaluated its effects on downstream model behavior. For a compact technical description of the dataset’s origins and objectives see the FineVision dataset original paper describing dataset creation and goals. More recent empirical analysis that evaluates how FineVision changes VLM training efficiency and benchmark outcomes is presented in the FineVision evaluation and impact study that measures training and generalization effects.

Independent evaluators report that models trained or fine-tuned with FineVision often show improved sample efficiency and stronger grounding on multimodal tasks compared to baselines trained on smaller curated datasets or generic web-scale mixes. These improvements are not uniform across all tasks—evaluation papers highlight particularly notable gains on captioning, multimodal retrieval, and certain visual question answering (VQA) benchmarks, while calling out diminishing returns in narrow, highly curated tasks where label quality trumps volume.

Who should care about the FineVision dataset? Researchers, ML engineers, and industry stakeholders looking to train or fine-tune next-generation VLMs—whether for prototype research or production systems—will find the resource relevant. The dataset’s scale and multimodal diversity make it attractive to academic labs exploring new architectures, startup ML teams building visual assistants, and enterprise AI groups aiming for domain-adapted visual search. For further context on dataset creation and composition see the detailed composition report of FineVision that lists sampling, annotation and schema decisions.

What FineVision contains, at a glance

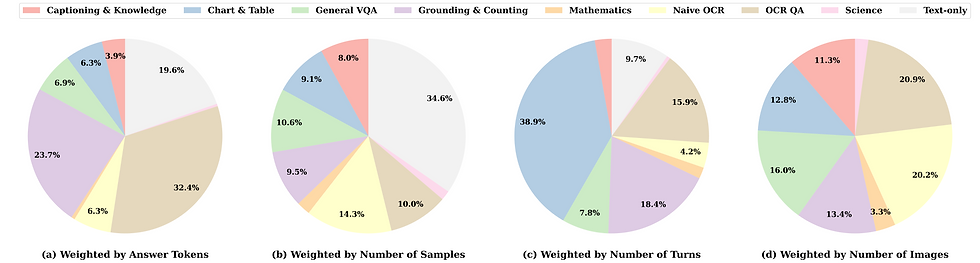

At a high level the FineVision dataset composition mixes multiple modality-enabled data types: millions of single image–caption pairs, multi-image narratives that provide temporal or multi-view context, and multi-turn visual dialogues intended to mimic conversational scenarios. Annotation types range from coarse captions to structured labels useful for VQA, grounding spans, and retrieval signal conditioning. The breadth and structure are deliberately tuned to support simultaneous pretraining across captioning, retrieval, and reasoning objectives.

Insight: large-scale multimodal diversity provides models with repeated contexts and cross-modal alignments that tend to improve generalization when training protocols exploit curriculum scheduling and multi-task objectives.

How FineVision fits into current VLM research

FineVision sits between carefully curated academic datasets and noisy web-scale collections. Compared to smaller hand-annotated corpora it trades some per-sample annotation richness for scale and breadth; compared to raw web dumps it applies cleaning, metadata tagging, and multimodal structuring investments that make samples more usable for supervised objectives. Independent evaluations report that the FineVision dataset enables faster convergence for many multimodal objectives (see the empirical evaluation of FineVision effects on VLMs), while also providing a stronger starting point for transfer learning than many bootstrapped web mixes.

Who should care about FineVision now

The primary audiences for the FineVision dataset are research labs exploring large multimodal architectures, startup ML teams building production-ready VLM capabilities with constrained labeling budgets, and enterprise AI groups seeking to accelerate domain adaptation efforts. For teams planning to adopt it, considerations include available compute budgets, desired model scale, and whether to combine FineVision with curated in-domain datasets for best downstream performance—topics covered in the dataset’s composition notes and follow-up analyses like the detailed composition report.

Key takeaway: The FineVision dataset’s large, structured multimodal corpus is designed to shift trade-offs in VLM training toward improved generalization and sample efficiency—particularly valuable for teams willing to invest in thoughtful training curricula and evaluation.

FineVision dataset structure, composition and metadata details for VLM training

Understanding how the FineVision dataset is organized is essential for integrating it into a robust VLM pipeline. The dataset’s designers provide structured sample types, explicit metadata fields, and recommended formats to help teams use the 24 million samples effectively at scale.

FineVision dataset structure and how samples are organized

The FineVision dataset structure includes multiple multimodal sample types captured in machine-friendly schemas—single image-caption records (text + image), multi-image sequences (arrays of images with shared narrative text), and multi-turn visual dialogues that pair chat-like messages with images. Each sample typically carries metadata fields such as source identifier, timestamp, content quality score, and annotation provenance. These fields are designed to support curriculum scheduling and selective sampling during large-batch or distributed training.

To support efficient training patterns, the 24M samples are sharded and indexed so pipelines can stream balanced minibatches that mix sample types and difficulty levels. The dataset authors discuss strategies for curriculum learning and efficient sampling in the FineVision dataset creation and composition report that outlines indexing and sampling strategies. Practically, this organization lets engineers prioritize high-quality captioning samples early in training and phase in more challenging dialog contexts later—an approach that many teams find reduces wasted compute.

FineVision dataset provenance, cleaning and documented limitations

The dataset paper and follow-up resources provide a provenance trail and describe cleaning steps such as deduplication, label normalization, and automated quality scoring. Those resources also explicitly document limitations—known gaps in geographic or topical coverage, residual noisy captions, and potential label biases—that researchers should consider when evaluating models trained on the corpus. For a clear statement of these design and provenance decisions consult the original FineVision dataset paper that includes provenance and limitations commentary.

Types of multimodal samples in FineVision

The sample palette is intentionally diverse. Typical types include:

Single image captions used for captioning objectives and contrastive alignment.

Multi-image narratives that support context-aware captioning and multi-view reasoning.

Multi-turn visual dialogues that map naturally to conversational VLM objectives and alignment training.

These sample types map neatly to common VLM objectives—captioning benefits from broad caption diversity, VQA benefits from grounded question–answer pairs, and grounding tasks gain from multi-image and span annotations. Labels are usually stored alongside images as JSON fields with structured keys for modality, language, and annotation confidence.

Metadata and annotation quality controls

Metadata in FineVision dataset records commonly include source domain tags, timestamp, annotator or automated scorer provenance, and a quality score that reflects heuristic or model-based assessments. The creators document cleaning steps—language filtering, duplicate removal, and automated toxicity screening—while acknowledging that scale introduces trade-offs between per-sample richness and overall coverage. For teams requiring high-precision labels, the recommended pattern is to combine FineVision’s scale with a smaller curated overlay for the target domain.

Storage, access formats and API integration

From an engineering perspective, FineVision dataset access is most practical using sharded binary formats or streaming APIs that avoid local full-dataset downloads. Common patterns include:

Sharded TFRecord or WebDataset-style tar shards for fast sequential reads.

Streaming integration via the Hugging Face Datasets ecosystem for lazy loading and transformation.

Torch Dataset wrappers that present multimodal samples in ready-to-batch structures.

For hands-on integration, the dataset documentation and community notebooks discuss connectors to the Hugging Face stack—useful starting points for teams adopting FineVision dataset access and loaders.

Key takeaway: The FineVision dataset structure balances scale with pragmatic engineering: metadata and sharding make large-scale training tractable while documented provenance helps teams reason about limitations.

Performance impact of the FineVision dataset on Vision Language Model training

Evaluators have focused on whether and how a large, structured multimodal corpus like FineVision concretely improves VLM outcomes. Independent studies and benchmark comparisons provide an evidence base that illustrates where the dataset delivers the most value and where careful engineering is still required.

FineVision dataset performance: empirical findings

Independent assessments show that models trained on or fine-tuned with FineVision often achieve faster convergence on multimodal objectives and superior transfer to downstream tasks relative to many smaller curated datasets. For a systematic measurement of these effects see the empirical evaluation of FineVision effects on VLMs that compares metrics across tasks and data mixes. Evaluators highlight consistent improvements in retrieval mAP, captioning fluency and grounding accuracy when models see the dataset’s multi-image and dialogue samples during training.

However, the evaluation also emphasizes caveats: the largest gains tend to appear when training protocols exploit multimodal context (multi-image and dialogue examples) and when curriculum and learning-rate strategies are tuned for the dataset’s diversity. Where teams simply replace a smaller dataset with FineVision without adjusting schedules, gains can be muted.

Measured gains in downstream tasks

Quantitatively, improvements are most pronounced in tasks relying on multimodal alignment and contextual reasoning—captioning, cross-modal retrieval, and certain VQA flavors. Multi-image context helps grounding and object disambiguation; dialogue-style samples improve turn-taking and short-term context retention in conversational use cases. The evaluation study documents these downstream gains and contrasts them with baselines trained on classic datasets, providing practical evidence for the dataset’s utility.

Training efficiency and compute considerations

Large datasets often increase training cost, but FineVision enables strategies that improve sample efficiency:

Sample reuse and selective replay of high-quality samples can lower needed epochs.

Curriculum scheduling—starting with simpler caption pairs and phasing in dialogues—reduces early noise.

Mixing FineVision with smaller, well-annotated domain datasets can allow shorter fine-tuning cycles.

Teams should remember that full pretraining on 24M samples will typically require multi-node clusters, while fine-tuning with adapter or LoRA-based PEFT approaches can be affordable on modest hardware. For empirical comparisons and best-practice training recipes consult the independent analysis in the FineVision evaluation and impact study.

When scale does not guarantee better results

Scale is not a universal solution. In narrow tasks that require high annotation fidelity—medical imaging diagnosis or fine-grained legal visual evidence—carefully curated, expert-labeled datasets still outperform generic scale. The right pattern for many applications is to pretrain or warm-start on FineVision and then fine-tune on domain-specific, high-quality labels.

Key takeaway: FineVision dataset downstream gains are real and practically useful for many multimodal objectives, but engineers must pair the dataset with tuned training schedules and, when necessary, curated overlays to achieve the best results.

Industry applications, scalability and commercial implications of FineVision dataset

FineVision’s combination of scale and multimodal structure opens pragmatic pathways for commercial VLM deployments across industries. Enterprises can use it as the backbone for vision-enabled products, combining pretraining scale with task-specific fine-tuning to reach production-readiness more quickly.

FineVision dataset industry applications

The dataset is a natural fit for product categories such as:

Visual search and recommendation systems that need robust cross-modal embeddings.

Multimodal customer support assistants able to reason over images in conversational contexts.

Automated visual inspection and quality control in manufacturing or logistics.

Content moderation and policy enforcement where broad prior data helps statistical coverage.

Consulting and use-case analyses that explore cross-industry adoption patterns for large VLM datasets give helpful context on how FineVision-like resources accelerate time-to-value for these scenarios; see analyses on large VLM dataset applications that discuss sector-specific uptake and innovation dynamics.

Enterprise productization scenarios

In production, FineVision can shorten prototyping cycles: teams can pretrain a backbone on the dataset, then iterate with small, labeled overlays for domain-specific constraints, reducing the need for extensive in-domain annotation early in the lifecycle. For example, a retail team might pretrain a visual embedding on FineVision to capture general object-context relationships and then fine-tune on a few thousand annotated catalog images to achieve high-precision product matching.

Domain adaptation and fine-tuning strategies

Effective domain adaptation recipes include:

Warm-starting backbones with FineVision-pretrained weights, then applying supervised fine-tuning with a small in-domain dataset.

Data augmentation and synthetic view generation to cover edge-case product photography or unusual medical imaging modalities.

Few-shot tuning and adapter-based PEFT approaches to limit compute costs while gaining domain specificity.

These strategies mirror recommendations found in practical tuning guides and model-specific examples that show how large multimodal corpora serve as a backbone for targeted fine-tuning.

Commercial and governance considerations

Commercial integration raises licensing, compliance, and cost-benefit tradeoffs—enterprises must verify dataset licensing terms, assess geographic and demographic coverage gaps, and measure expected infrastructure costs. Governance frameworks should include data cards, documented provenance checks, and third-party audits where required for regulated sectors.

Key takeaway: The FineVision dataset is a pragmatic enabler for many industry use cases, but commercial adoption benefits from careful adaptation strategies and governance planning to manage legal and operational risk.

Practical fine-tuning workflows for VLMs using the FineVision dataset and Hugging Face tools

Turning FineVision into working models requires practical workflow patterns that balance compute cost, performance goals, and safety. Hugging Face tooling and community notebooks provide common paths for both low-cost PEFT-style fine-tuning and full supervised fine-tuning (SFT) pipelines.

FineVision dataset fine-tuning workflow and low-cost PEFT recipe

A common minimal-cost PEFT workflow is: 1. Stream a curated FineVision subset using sharded streaming APIs to avoid large local storage. 2. Tokenize text fields and pre-process image inputs with the backbone vision transform pipeline. 3. Attach adapters or LoRA layers to a frozen VLM backbone, then train only those low-rank updates. 4. Use gradient checkpointing, mixed precision (AMP), and sharded optimizers to fit larger batches on constrained hardware.

For hands-on guidance, community notebooks and walkthroughs show concrete code patterns and memory-saving tips—useful pointers include practical PEFT fine-tuning notebooks and the broader Hugging Face TRL supervised fine-tuning documentation that discuss multimodal SFT patterns and parameter-efficient methods. See the Practical PEFT fine-tuning notebook example that demonstrates low-cost adapter training patterns and the Hugging Face TRL supervised fine-tuning docs for multimodal SFT guidance.

Insight: For many teams, PEFT yields 80–90% of the gains of full fine-tuning at a fraction of the cost—making it the pragmatic first step when adopting large datasets like FineVision.

Full SFT pipeline with evaluation hooks

When higher performance or full-model adaptation is required, a supervised fine-tuning pipeline typically includes:

Controlled validation splits stratified by sample type (caption, retrieval, dialogue).

Human preference evaluation cycles, especially for conversational tasks, to detect regressions.

Early stopping, checkpointing and robust logging for reproducibility.

Hugging Face TRL docs provide operational advice for supervised multimodal fine-tuning. Teams should also bake in offline and online evaluation hooks—periodic evaluation against validation benchmarks and small-scale A/B experiments—to ensure practical deployment readiness.

Example model-specific notes and resources

Choosing the right backbone depends on goals:

Small, efficient backbones (SmolVLM2-style) are ideal for latency-sensitive applications and for teams experimenting with small budgets.

Large backbones offer top-tier performance for complex grounding and reasoning tasks but require larger compute budgets.

Community model examples (for instance, SmolVLM2 training write-ups) provide concrete parameter regimes and training schedules useful when pairing the FineVision dataset to a targeted model family.

Key takeaway: Start with PEFT to minimize cost and iterate quickly; move to targeted SFT if domain performance demands full-model adaptation. Community guides and TRL docs are practical companions when building these workflows.

Ethical governance, risks, and solutions for using the FineVision dataset in VLM research

Large multimodal datasets introduce real ethical, privacy, and representation risks. Responsible adoption requires a governance-first mindset: clear documentation, audits, and conservative deployment patterns where uncertainty exists.

FineVision dataset ethical considerations and major risks to monitor

Major risks include:

Personal data leakage from images or captions that contain identifying information.

Visual stereotypes and biased label distributions that amplify harmful representations.

Label provenance drift where annotations reflect cultural or temporal biases.

Dataset creators and evaluators are explicit about these risks in their documentation and evaluation papers; teams should treat those sections as the starting point for governance planning. See the broader policy guidance that outlines how dataset governance should evolve for large multimodal corpora.

FineVision dataset governance and compliance best practices

Recommended governance practices include:

Publishing and maintaining a detailed data card that records provenance, cleaning steps, and known limitations.

Running bias audits and third-party reviews before production deployments.

Applying redaction or consent validation for images and metadata where required by regulation.

For regulated sectors, enterprises should consult domain-specific compliance requirements and consider third-party audits before deploying models trained on broad corpora.

Technical mitigations and responsible deployment

Technical mitigations include:

Automated filtering pipelines for personal data detection and removal.

Fairness-aware reweighting and bias-mitigation fine-tuning to reduce known imbalances.

Red-teaming and adversarial testing to surface emergent failure modes.

When deploying user-facing systems, conservative capability gating is often appropriate—restricting generation or automated decision-making in scenarios where misinterpretation could cause harm.

Key takeaway: Ethical governance is integral—not optional. Combining documentation, audits, and technical mitigations reduces risk and increases trust in FineVision-powered systems.

Complementary datasets and real world user behavior signals for VLM evaluation

Evaluation that pairs large-scale pretraining with human-centered signals is critical for alignment and product readiness. Two complementary datasets commonly used alongside FineVision are VisionArena and MOSI; together they help bridge pretraining gains to user-relevant outcomes.

FineVision dataset evaluation with VisionArena for preference and dialogue testing

VisionArena provides labeled user-VLM conversations useful for preference modeling and RLHF-style fine-tuning. The dataset’s conversational labels and preference signals are especially valuable when assessing dialogic behavior and alignment after FineVision pretraining. Teams commonly pair FineVision-pretrained backbones with VisionArena-style evaluation runs to calibrate response appropriateness and conversational safety. See the VisionArena user-VLM conversation dataset that offers labeled conversations for preference modeling.

FineVision dataset MOSI integration for sentiment and multimodal subjectivity testing

MOSI is a multimodal sentiment dataset that helps calibrate affect recognition and subjectivity judgments. When combined with FineVision outputs, MOSI-style evaluations help teams detect when models misinterpret affective signals in images-plus-text inputs, reducing the risk of producing insensitive or tone-deaf outputs. The original MOSI paper outlines how sentiment signals are annotated across modalities and how they can be used in evaluation.

Metrics and experimental design for real-world evaluation

A practical evaluation plan mixes offline and online signals:

Offline metrics: captioning BLEU/ROUGE, retrieval mAP, VQA accuracy and grounding F1.

Online metrics: user preference rates, task completion, click-through and complaint rates.

Experimental design: A/B tests, targeted red-team scenarios, and longitudinal monitoring to detect distributional drift.

Designing experiments that combine FineVision pretraining with real-world user signals yields a more robust picture of readiness than benchmark metrics alone.

Key takeaway: Complementary datasets like VisionArena and MOSI allow teams to move from dataset-driven improvements to human-centered evaluations that reflect product and safety priorities.

Frequently asked questions about the FineVision dataset

Top questions practitioners ask about FineVision dataset adoption

What is the FineVision dataset and why is it useful?

The FineVision dataset is a 24M-sample multimodal corpus focused on image-text pairs, multi-image contexts, and visual dialogues. It is useful because its scale and structure improve multimodal alignment and transfer for many VLM tasks; see discussions and early coverage in community analysis and dataset papers.

How much compute do I need to get started with FineVision?

You can get started with a subset and PEFT adapters on a small GPU (e.g., a 24–48GB class card). Full pretraining across 24M samples typically requires distributed clusters. Community threads and practical notebooks offer sample budgets and configuration tips.

Can I fine-tune popular VLMs with FineVision on a single GPU?

Yes for low-cost PEFT or adapter approaches where only a fraction of parameters are trained. Full model retraining for best performance usually requires multiple GPUs or TPU pods.

What are licensing and ethical issues to check before using FineVision?

Review the dataset’s license and provenance documentation, run privacy detection and bias audits, and consult governance guidance to ensure compliance for your domain.

How do I evaluate FineVision-trained models in production?

Combine offline benchmarks with VisionArena-style conversational tests and MOSI-like sentiment checks, and use A/B testing to measure user impact. Pair these evaluations with safety red-team scenarios.

Where can I find step-by-step fine-tuning examples for multimodal models?

Look to community notebooks that show PEFT and adapter recipes, and the Hugging Face TRL supervised fine-tuning docs for multimodal-specific patterns.

A forward-looking view on the FineVision dataset future role in VLM research and products

The FineVision dataset summary is simple in scope but profound in implication: a structured, 24 million-sample multimodal corpus designed for VLM objectives nudges the field toward models that understand images in richer contextual frames—multi-image narratives and conversational visual contexts—not just isolated caption pairs. Over the next 12–24 months this resource is likely to accelerate several trends.

First, research labs and startups will push architectures that better exploit multi-image context and dialogue-style pretraining signals; we should expect more work on cross-image attention patterns and memory-enabled visual reasoning. Second, product teams will increasingly adopt hybrid workflows: FineVision pretraining for broad visual grounding, then lightweight PEFT or adapter fine-tuning on in-domain labels to meet operational requirements quickly. This pattern balances the advantages of scale with the precision of curation.

At the same time, governance, bias auditing, and provenance tracking will become non-negotiable as models trained on massive multimodal corpora reach user-facing products. The dataset’s documented limitations and the independent evaluations remind us that scale amplifies both capability and risk. Responsible teams will therefore invest early in bias audits, privacy filters, and incremental deployment strategies that combine human oversight with automated monitoring.

Uncertainties remain. The exact return-on-investment for FineVision-like pretraining depends on model architecture choices, compute pricing trends, and how well teams can engineer sample curricula and evaluation pipelines. Some niche domains will still require bespoke annotation. But the practical opportunities are clear: better base VLMs, faster production cycles for multimodal services, and richer user experiences that blend vision and language more fluidly.

For individuals and organizations ready to act, an achievable near-term plan is to pilot FineVision dataset adoption by running a 90-day experiment: warm-start a backbone on a modest FineVision subset, use PEFT to fine-tune for one product task, pair evaluation with VisionArena-style conversational checks, and document governance decisions. This pragmatic path captures much of the dataset’s upside while keeping cost and risk manageable.

In sum, the FineVision dataset future role is as a catalytic foundation for the next wave of VLM capabilities—powerful, flexible, and demanding of thoughtful stewardship. Teams that treat it as an amplifier of both technical opportunity and ethical responsibility will be best placed to translate its promise into trustworthy products and meaningful research advances.