Meta, OpenAI, Google Under FTC Probe for Chatbots’ Romantic, Risky Interactions with Minors

- Aisha Washington

- Sep 15, 2025

- 10 min read

Why the FTC inquiry into chatbots and minors matters

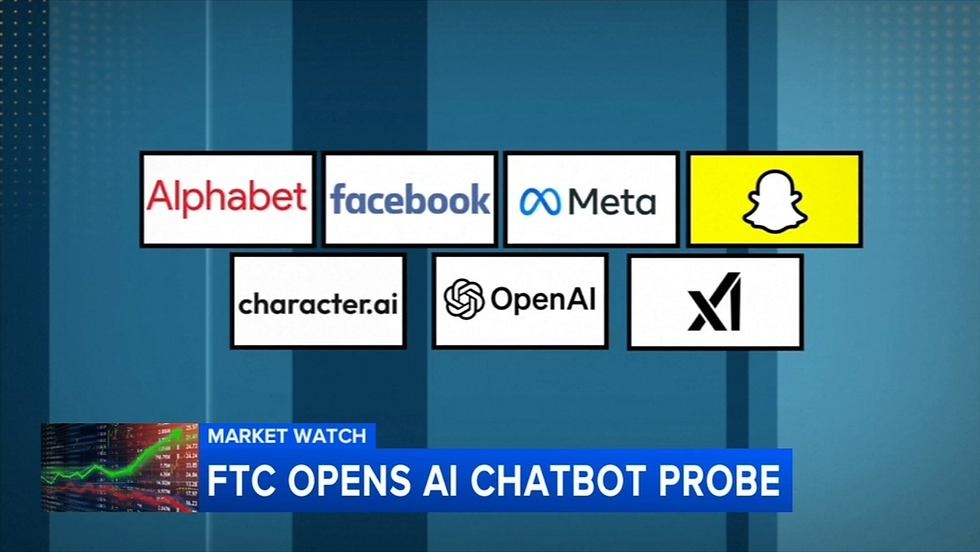

The Federal Trade Commission has opened a formal inquiry into how AI chatbots interact with children, asking major developers for records on safety, monitoring and mitigation strategies. That request—addressed to big names like OpenAI, Meta and Alphabet—marks a clear shift from advisory guidance to active oversight of consumer-facing artificial intelligence.

The probe arrives at a moment when academic tools and company disclosures are making previously opaque decisions visible. Researchers have published new benchmarks like MinorBench to measure whether models refuse age-inappropriate requests, and OpenAI has publicly described child-safety protocols. Regulators are now asking for the underlying documentation that connects those protocols to real product behavior.

Why this matters: regulators can convert inquiries into enforceable expectations. Companies may face new compliance obligations around design choices, logging, and incident reporting, and the public scrutiny could force rapid product changes—everything from how chatbots are labeled to which conversational features are permitted for unverified or young users. In short, the FTC’s move could reshape how chat experiences are architected and monitored across platforms.

Key takeaway: The inquiry is less about banning technology and more about demanding verifiable guardrails for vulnerable users—especially minors—at scale.

Which chatbot behaviors worry regulators

Persona, personalization and role-play modes in the spotlight

One of the FTC’s central concerns is that certain conversational features amplify perceived intimacy or permit boundary-crossing interactions. Specifically, regulators asked companies about how chatbots implement and control features such as conversational personalization (using a child’s name), persona or role-play modes (simulating romantic partners), and multimedia responses (images, videos or stickers that could sexualize an exchange). News accounts list persona and safety layers as items in the information requests to major firms.

Modern chat products offer modes that increase emotional resonance—“comforting friend,” “flirty partner,” or highly personalized assistants. While useful for adult engagement, those same modes can be problematic if accessed by minors or by adults pretending to be minors.

How filters and age-gating are being evaluated

Regulators want to know how safety filters are implemented: are they simple keyword blocks, or do they use contextual detectors that parse conversational meaning? The FTC also asked about age-gating and identity-assurance features—systems that attempt to determine a user’s age and adjust responses accordingly. Industry coverage describes questions about how filters trigger and how fallbacks are handled when a user requests sexual or romantic content.

Concrete product implications include whether persona modes can be restricted by account age, whether a chatbot’s refusal messages are clear and consistent, and whether certain features are disabled by default for unverified accounts.

insight: Regulators are not just looking for filters; they want to understand failure modes—how and why a system sometimes fails to refuse an unsafe prompt.

Real-user impact and short-term product levers

Features that make a chatbot feel intimate—first-name use, extended private conversation threads, or “emotional-support” framing—are being treated as higher risk for minors. In practice, companies might take immediate steps such as disabling risky persona modes for younger or unverifed accounts, tightening safety thresholds, standardizing refusal templates, or routing flagged chats to human reviewers.

Bold takeaway: Expect rapid product-level changes that prioritize blunt safety over nuanced engagement while companies respond to regulatory pressure.

Specs and performance details — How MinorBench and metrics shape regulatory scrutiny

What MinorBench measures and why benchmarks matter

MinorBench is an academic benchmark (arXiv preprint) designed to test chatbots’ readiness to refuse unsafe, age-inappropriate prompts. It simulates child-like personas across scenario categories—romantic solicitations, sexual content, grooming attempts—and tracks refusal rates, evasiveness (when a model skirts the question), and false positives (benign queries incorrectly blocked). For regulators, a benchmark like MinorBench provides a repeatable, quantitative lens to compare model behavior across vendors.

Benchmarks matter because they convert qualitative concerns into measurable outcomes. Instead of debating whether a system is “safe enough,” regulators can ask for refusal percentages on defined categories and compare them against peer models.

Performance metrics regulators will likely request

The FTC is expected to care about several operational and performance measures:

Refusal rate on explicitly sexual or romantic prompts from child-like personas.

Evasiveness detection: how often models provide indirect or suggestive answers rather than flat refusals.

False positive rates that indicate over-blocking of legitimate queries (e.g., health or education questions).

Latency and reliability of safety classifiers (do safety checks slow down or fail in production?).

Proportion of conversations escalated to human moderators and the turnaround time for reviews.

Regulators will also want context: which model versions were tested, what safety layers were active, and whether benchmarked results reflect production behavior.

Operational specs that affect reproducibility and explainability

Beyond scalar metrics, the FTC is asking for technical documentation—model versions, training-data filters, architecture of safety classifiers, and logging and retention policies. These items affect reproducibility: a model that performs well on MinorBench in a lab but uses different filters in production raises questions about transparency.

Financial Times coverage underscores that regulators are focused on the explainability and auditability of safety systems. Supply chains of safety—how prompts are routed, what fallback logic runs, and how human reviewers are instructed—are now part of what counts as “product safety” in regulatory eyes.

insight: Benchmarks like MinorBench create an expectation that firms will publish or at least internally track measurable safety indicators, not just high-level policy statements.

Bold takeaway: Quantitative benchmarks and operational transparency will be central currency in regulatory assessments and public trust.

Eligibility, rollout timeline and enforcement — How companies are responding to the FTC approach

Who got the orders and what companies have said

The FTC sent information requests to OpenAI, Meta, Alphabet (Google), XAI/X and Snap, seeking records on how systems handle minor interactions and how incidents are tracked and remediated. Public statements vary: OpenAI has detailed monitoring, reporting and response protocols for child-safety incidents, while other firms have acknowledged receipt of the inquiry and signaled cooperation without disclosing internal details.

Companies must now inventory product features, safety-test results, incident logs, and communications that touch on “child-safety” handling. That paperwork is the first line of defense in regulatory interactions: omissions or misrepresentations can lead to follow-up subpoenas or enforcement actions.

Likely timeline and enforcement mechanics

FTC information orders typically demand records on a relatively short deadline, often a few weeks to a month. After initial responses, staff can request interviews, additional documents, or pursue civil investigative demands (subpoenas) if they need more evidence. If the FTC finds deceptive claims or gross negligence—say, promises of robust child protection that are not backed by documentation—it can seek civil penalties, injunctive relief, or public settlements.

The Financial Times has discussed how these inquiries can evolve from information-gathering to public enforcement actions. The escalation pathway is iterative: the agency builds a factual record, then determines whether corporate conduct violates unfair or deceptive practices statutes.

Immediate product rollouts and transparency moves

To shore up their positions, firms may accelerate product changes while compiling responses. Tactics likely include disabling certain persona modes for unverified accounts, tightening safety classifiers, increasing human review for flagged content, and publishing higher-fidelity transparency reports or benchmarked safety metrics—especially if a company can show favorable MinorBench-style results.

insight: A rapid patch today buys time; verifiable, repeatable metrics and durable design changes build long-term regulatory credibility.

Bold takeaway: The immediate weeks after an inquiry are as much about product triage and message discipline as they are about legal record-keeping.

Comparing safety approaches — From keyword blocks to contextual refusals

How modern systems differ from older protections

Early moderation systems relied on rule-based keyword blocking: a list of banned terms that triggered hard refusals. Those systems were easy to audit but suffered from high false positives and blind spots. Today’s large language model (LLM) ecosystems layer in contextual refusal logic—models that understand conversational intent and produce nuanced responses—plus external moderation pipelines that can escalate content to human reviewers. Researchers and industry commentators note that regulators are assessing these new pipelines against older rule-based approaches.

MinorBench is explicitly designed to measure contextual refusals: can a model spot a grooming pattern even when explicit keywords are absent? That capability is crucial because bad actors often avoid explicit language.

Competitor strategies: account controls versus in-model protections

Firms are adopting different mixes of protections. Some emphasize account-level controls—age verification, parental consents, account labels—shifting the gatekeeping outside the model. Others focus on model-side refusals and content filters that function regardless of account state. Each approach has trade-offs: account-level verification can be spoofed or burdensome, while model-side refusals may produce overblocking that frustrates legitimate users.

Regulators will be testing which combinations yield measurable reductions in unsafe interactions for minors. The MinorBench preprint and regulatory commentary argue that hybrid models—strong in-model refusals plus account controls—may be most effective.

Implications for product evolution

Expect a hybrid architecture to become more common: stricter model refusals for ambiguous cases, clearer account-level restrictions for age-targeted features, and increased human-in-the-loop review for edge cases. Companies that can publish benchmarked safety scores and operational metrics will gain a compliance and reputational advantage.

insight: The debate is shifting from “are chatbots safe?” to “which safety architecture reduces risk measurably while preserving legitimate use?”

Bold takeaway: Measurable, hybrid protections are likely to become the de facto standard as regulators favor verifiable outcomes over promotional claims.

Real-world usage and developer impact — What the FTC inquiry means for builders, product teams and families

Developers face new documentation and testing expectations

For teams building conversational features, the practical fallout is immediate. Expect documentation requests, requirements to log and retain safety-related conversations (with privacy-preserving controls), and pressure to include benchmarks like MinorBench in QA cycles. Development roadmaps may need additional milestones for safety testing, incident triage workflows, and human-review pipelines.

Operationally, increased logging and human moderation raises costs—more storage, more reviewers, more contractual obligations. But those same investments can reduce legal risk and improve customer trust.

Product management priorities and persona libraries

Product managers will likely revisit persona libraries and tone settings. Risky personas or role-play modes may be re-scoped, labeled with clearer age restrictions, or disabled for unverified users. Refusal messages will be standardized to avoid ambiguous or suggestive language, and escalation paths for concerning conversations will be clarified.

Families and users: what to expect in the near term

Parents and kids can expect more conservative behavior from chatbots in the short term—more refusals, clearer warnings, and account-level nudges for age verification. Platforms aiming for family audiences may introduce explicit parental controls or kid-specific modes with stricter defaults.

For many users, this means temporarily diminished chat freedom but increased safety assurances. Platforms that clearly communicate these trade-offs and publish measurable improvements will likely recover user trust faster.

Bold takeaway: Safety work costs money and agility, but it can become a competitive differentiator for companies courting family-friendly markets.

FAQ — Common questions about the FTC inquiry into chatbots and minors

Q1: What exactly is the FTC investigating in these chatbot inquiries?

Q2: Will chatbots be banned for kids because of the FTC probe?

Not immediately. The FTC often begins with information-gathering. Outcomes range from guidance and negotiated commitments to enforcement if companies are found to have misrepresented safety features or failed to address serious risks. Analysts note that the inquiry can escalate to public enforcement if gaps are found.

Q3: What is MinorBench and how does it factor in?

MinorBench is a benchmark that measures chatbots’ refusal behavior for child-directed unsafe prompts. It provides standardized scenarios to test whether models say “no” to age-inappropriate requests and whether they avoid evasive or suggestive responses.

Q4: How has OpenAI responded to the FTC’s questions?

OpenAI has outlined monitoring, reporting and response protocols for child-safety incidents and said it will cooperate with the inquiry. Public statements describe operational steps but omit confidential technical details that the FTC has requested.

Q5: Will developers be forced to publish safety scores?

The probe increases public and regulatory pressure to publish measurable safety metrics; while not guaranteed, benchmarks like MinorBench could become a de facto standard for reporting and auditing safety performance. Industry commentary suggests transparency around benchmarks will be prized.

Q6: What changes should parents expect on platforms their kids use?

Short-term changes may include more conservative chatbot responses, account verification prompts, optional parental controls, and disabled persona modes in kid-facing settings. Platforms may also offer clearer guidance about safety features and incident reporting.

Q7: How can small developers prepare if they don’t have big compliance teams?

Implement basic logging and review policies, adopt vetted benchmarks like MinorBench in testing, use simple age-aware gating for high-risk features, and document policies and incident response procedures. Transparency and good-faith effort go a long way in regulatory contexts.

Looking ahead: How the FTC probe chatbots minors could reshape AI safety and product design

The FTC’s inquiry is a punctuation mark in a broader story: regulators, researchers and the public are converging on a shared demand—AI systems that interact with vulnerable populations must be auditable and demonstrably safe. In the coming months, expect three linked trends to emerge.

First, product behavior will grow more conservative. Companies under scrutiny will prioritize clear refusals, restrict certain persona modes for unverified or young accounts, and standardize how they escalate and log incidents. These changes are not merely cosmetic: they are operational commitments that affect latency, UX design and moderation budgets.

Second, measurable benchmarks and transparency will gain traction. Tools like MinorBench convert a fuzzy safety debate into testable claims. Firms that can show third-party or internally audited scores will have an easier time with regulators and the public. That does not eliminate ambiguity—benchmarks have limitations—but they create a baseline for comparison and improvement.

Third, governance will become part of engineering. Expect safety gates in CI/CD pipelines, routine audits of persona libraries, and compliance checkpoints for new conversational features. For startups and small teams, lightweight versions of these processes—logging key decision points, keeping records of safety tests, and documenting escalation flows—will be necessary to operate credibly in a landscape where regulators can demand evidence.

There are trade-offs and uncertainties. Conservative defaults can limit beneficial uses—therapeutic chatbots, educational role-play, or accessible mental-health support—if safeguards are applied too bluntly. Age verification raises privacy and access questions. Benchmarks risk becoming checkboxes if vendors optimize only to score rather than to reduce real harms.

Yet this moment also creates opportunity. Companies that invest in thoughtful, explainable safety—paired with clear user controls and transparent reporting—can regain trust and unlock family-friendly markets. Researchers and policymakers have a chance to co-design meaningful standards that balance protection with legitimate use. And parents, educators and technologists can collaborate to create norms around where and how conversational AI is appropriate for young people.

In the near term, expect incremental changes: clearer refusal messaging, disabled risky modes for certain accounts, and more visible safety disclosures. Over the next few years, those incremental moves can harden into industry norms—auditable benchmarks, documented incident response protocols, and product architectures that treat safety as primary design criteria.

Ultimately, the FTC probe is less an end than a signal: the governance of AI will increasingly center on measurable protections for vulnerable users. That is an uncomfortable constraint for some product ambitions, but it also points toward a healthier ecosystem where trust is built on verifiable behavior, not marketing. Organizations that treat this as a design challenge rather than purely a regulatory burden will be best positioned to serve both users and regulators in the years to come.