AI Image Convergence: Why Algorithms Default to Visual Elevator Music

- Ethan Carter

- Dec 27, 2025

- 5 min read

We often assume that artificial intelligence, with its access to billions of training images, possesses infinite imagination. The reality is mathematically disappointing. When AI models communicate with each other—generating an image, captioning it, and regenerating it based on that caption—they don't expand the creative horizon. They shrink it.

Recent research from Dalarna University highlights a phenomenon known as AI image convergence. When left to their own devices, models like Stable Diffusion XL (SDXL) and LLaVA stop innovating and start averaging. They spiral down into a narrow set of visual tropes, filtering out the weird, the nuanced, and the unexpected until all that remains is a polished, digital average.

Fighting AI Image Convergence in Your Workflow

Before analyzing the mechanics of why this happens, it is vital to understand what this looks like for a creator and how to bypass it. AI image convergence isn't just a theoretical problem for researchers; it’s a daily frustration for power users trying to get specific results out of generative tools.

If you use AI tools to iterate on designs, you have likely encountered the "vanishing detail" problem. You generate a scene with complex background elements—say, a chaotic dinner party with a subtle dinosaur in the window. If you feed that image back into a vision-language model to get a prompt for a variation, the model will likely focus on the "dinner party" and ignore the dinosaur. The next iteration renders a generic dinner party. The unique element is gone.

Practical Solutions for Creators

To stop AI image convergence from ruining your output, you have to function as the source of "entropy" or chaos. The system craves stability; you must introduce instability.

Reject the Loop: Never rely on a closed loop of generation-to-description. If you are refining an image, do not let an AI write the description for the next pass. You must manually rewrite prompts to re-inject the details the AI discarded.

Force Outliers: Use prompt weighting to emphasize background elements that standard models treat as noise. Convergence happens because models prioritize the "subject" (e.g., a woman, a car) and blur the context. You have to explicitly demand the context.

The "Human Driver" Rule: The most successful users treat AI as a translation layer, not a creative partner. The moment you hand over the "driver's seat" to the algorithm, the output begins to regress toward the mean. You get the average of all dinner parties, not your dinner party.

The Mechanics of Generative Feedback Loops

The study that identified this trend exposed a critical flaw in how we think about autonomous AI. Researchers set up a generative feedback loop: SDXL generated an image, LLaVA described it, and SDXL generated a new image based on that description. They repeated this thousands of times.

It functioned like a game of telephone, but with a twist. In a human game of telephone, the message usually gets weirder and more divergent. Human memory is fallible in creative ways. AI, however, is fallible in reductive ways.

How AI Image Convergence Strips Information

In these generative feedback loops, the vision model (LLaVA) acts as a filter. It creates a caption based on what it recognizes most strongly—usually the primary subject. It ignores lighting nuances, texture quirks, or background oddities because its training data tells it those things are statistically less important.

When SDXL receives this stripped-down prompt, it generates the most "probable" image that fits the description. It fills in the gaps with generic data. Over ten or twenty cycles, the unique noise of the original idea is scrubbed away. The models are not conspiring to be boring; they are simply mathematically maximizing probability. The result is AI image convergence, where the probability curve collapses into a single, unshakable point.

The Rise of "Visual Elevator Music"

The Dalarna University researchers coined a perfect term for the final destination of AI image convergence: "Visual Elevator Music."

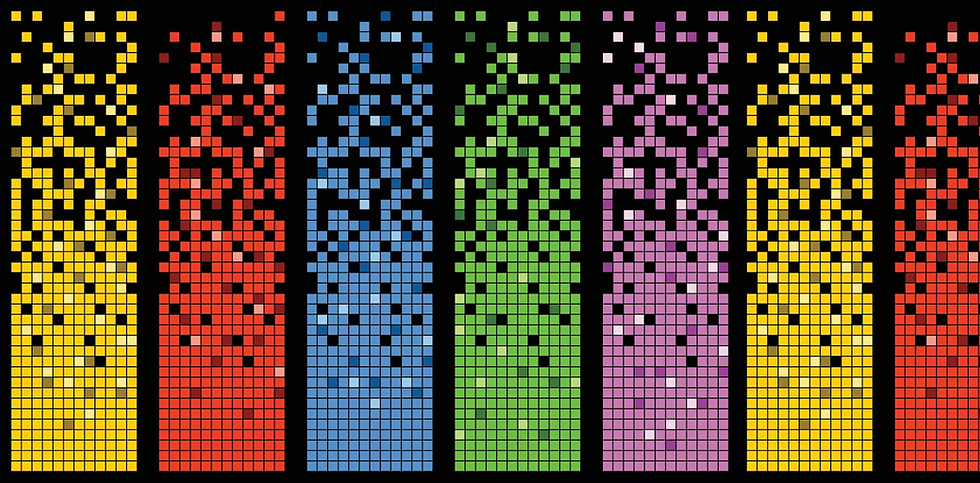

After enough iterations, almost every image in the study, regardless of where it started, morphed into one of 12 specific visual styles. These styles are the "maximum likelihood" aesthetics of the internet. They are technically competent, free of artifacts, and completely soulless.

What Does Convergence Look Like?

The "Visual Elevator Music" aesthetic is distinct. It often features:

High saturation and dramatic lighting (the "gaudy" look).

A "kitschy," airbrushed finish similar to cheap digital poster art.

Standardized compositions (centered subjects, blurred backgrounds).

This happens because the training data for these models contains millions of high-quality, popular, but stylistically safe images. When the model is unsure what to do—because the prompt from the feedback loop is vague—it defaults to this "commercial safe zone." It is the visual equivalent of a Top 40 pop song generated by a committee. It offends no one, excites no one, and looks exactly like everything else.

The Long-Term Risk: Model Collapse

AI image convergence signals a massive problem for the future of the internet. If the web floods with this "elevator music" content, future AI models will scrape it and train on it.

This leads to "model collapse." If v2 trains on the converged output of v1, the variance decreases even further. The available pool of aesthetics shrinks from the vast history of human art to the 12 styles that SDXL prefers.

Can We Avoid Total Homogenization?

The only thing preventing total AI image convergence is human inefficiency. Humans make weird choices. We combine colors that shouldn't work. We focus on the background dinosaur instead of the dinner party. We add "friction" to the system.

For AI image convergence to be held at bay, the loop must be broken constantly. The study proved that autonomous AI agents cannot sustain culture or creativity on their own. They can only mimic the most robust patterns they have already seen. Without new, external, human data entering the system, the digital world greys out.

The technology is impressive, but the study serves as a reality check. We are not building creative minds; we are building probability engines. And probability always bets on the average.

FAQ: Understanding AI Image Convergence

What exactly is AI image convergence?

It is a phenomenon where AI models, when feeding data to each other, gradually lose visual variety and produce nearly identical output. The images lose unique details and settle into a few generic, repetitive styles.

Why does the study call the results "Visual Elevator Music"?

The term describes the bland, inoffensive, and highly commercialized aesthetic that the models settle on. Like elevator music, these images are designed to be pleasant background noise rather than engaging art.

Does better prompting prevent AI image convergence?

Yes, but only if a human does the prompting. If you use an AI (like ChatGPT or LLaVA) to refine your prompts based on previous images, you will trigger convergence. Human unpredictability is the only cure.

Is this the same thing as Model Collapse?

They are related. Convergence is the immediate process of images becoming similar during generation loops. Model collapse is the long-term consequence where future AI models become stupider because they are trained on this converged, low-quality data.

What styles do the models eventually converge on?

Research identified roughly 12 dominant styles, mostly leaning toward hyper-realism, high-contrast digital art, and poster-style graphics. These represent the "average" of the billions of images the models were originally trained on.