AI scaling laws are rewriting the rules of innovation: why bigger models don’t just mean better results

- Ethan Carter

- Aug 15, 2025

- 13 min read

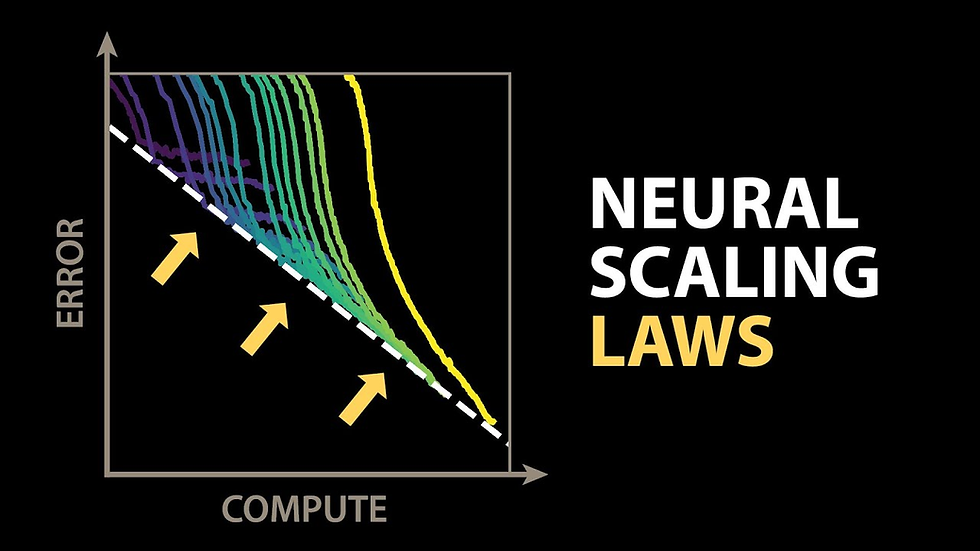

AI scaling laws describe the predictable relationships between the amount of compute, data, and parameters used to train artificial intelligence models, and their resulting performance. These empirical patterns have transformed how researchers and companies approach AI development by suggesting that increasing resources leads to consistent improvements in capabilities. However, these laws also reveal that simply building larger models does not guarantee indefinite gains. Instead, there are complex trade-offs that introduce diminishing returns and practical limits on performance improvements.

This topic is especially relevant now, as commercial forecasts and investment strategies increasingly hinge on scaling up AI systems. Tech giants and startups alike are pouring billions into compute infrastructure, while policymakers debate the implications of concentrated power in large-scale AI models. The narrative that "bigger is better" drives much of this momentum, but emerging research questions this assumption, urging a more nuanced understanding.

This article aims to unpack the core concepts behind AI scaling laws, examine the evidence for their limits, and explore the practical consequences across industry and governance. It will also highlight promising alternatives to brute-force scaling, offering a holistic view of where AI innovation is heading. By understanding these dynamics, stakeholders can make better-informed decisions about investments, research priorities, and policy frameworks.

1. What are AI scaling laws and why they matter — background and core concepts

AI scaling laws are empirical relationships that describe how improvements in model performance follow predictable patterns as the size of a model’s compute budget, training data, and parameter count increase. Typically measured through metrics like cross-entropy loss or accuracy on downstream tasks, these laws emerged from systematic research showing consistent power-law trends across diverse model families.

The concept first gained traction when researchers noticed that larger neural networks trained with more data tended to improve predictably rather than erratically. These findings have roots in both academic experiments and open-source community efforts, offering a quantitative foundation for forecasting model capabilities based on scale.

Large language models (LLMs) — such as GPT-style transformers — have become the poster children of scaling laws. Their remarkable ability to improve at tasks like language understanding, coding, and reasoning as they grow in size illustrates both the promise and complexity of scaling. Notably, some capabilities emerge only after crossing certain scale thresholds, demonstrating non-linear improvements in behavior.

Understanding these background concepts is essential because it frames expectations moving forward: while larger models often outperform smaller ones, this success comes with increasing costs and diminishing marginal gains. This trade-off shapes decisions around research funding, infrastructure investment, and product development.

For a comprehensive overview of these principles, the Alignment Forum’s analysis offers an in-depth exploration of scaling law patterns, while practical discussions on LLMs can be found in resources like Hackernoon’s review.

1.1. The empirical patterns: compute, data and parameter relationships

The mathematical heart of AI scaling laws lies in power-law functions, which express performance improvements as proportional to some power of compute resources or dataset size. For example, loss often decreases predictably according to a function like:

Loss ∝ (Compute)^(-α)

where α is a positive constant less than 1, indicating diminishing but consistent gains.

These predictable curves appear across different architectures and datasets, allowing researchers to estimate how much additional compute or data will improve model quality before training even begins. Metrics such as cross-entropy loss during training or zero-shot task accuracy demonstrate these patterns clearly.

Practically, this means training schedules and resource allocation can be optimized by forecasting when returns will be worthwhile or when costs outweigh benefits. For instance, if doubling compute yields only a small gain in accuracy due to diminishing returns, it may be more efficient to explore other innovations.

The Alignment Forum’s detailed analysis provides key insights into these predictable empirical trends, helping organizations plan their AI development pipelines with greater precision.

1.2. Why LLMs show emergent capabilities as they scale

Beyond smooth performance curves, large language models exhibit emergent phenomena — qualitatively new abilities that appear suddenly once models reach critical sizes. Unlike steady incremental improvements, emergent capabilities might include advanced reasoning, following complex instructions, or coding proficiency that smaller models lack entirely.

This distinction between continuous gains and discrete jumps challenges traditional views of model improvement. Emergence suggests that some aspects of intelligence are not merely scaled-up versions of simpler behaviors but arise from complex interactions within massive parameter spaces.

However, emergent capabilities remain poorly understood. While researchers observe these phenomena consistently in LLMs, the precise mechanisms behind them are still under investigation. This means predictions about what a model will do at larger scales retain uncertainty despite the overall predictability from scaling laws.

For those interested in practical examples and ongoing debates about emergence in large models, Hackernoon’s discussion offers accessible commentary grounded in recent research.

2. How AI scaling laws drive innovation and reshape market dynamics — industry and market impact

The existence of predictable scaling laws has galvanized a surge of investment and innovation in AI technologies. Knowing that increasing compute and data can reliably improve model capabilities encourages firms to allocate massive resources toward building ever-larger models and the infrastructure needed to support them.

This phenomenon reshapes market dynamics by enabling new products that were previously impossible or impractical. From advanced natural language processing APIs to AI-powered analytics and automation tools across sectors like healthcare, finance, and customer service, larger models often provide the foundation for cutting-edge solutions.

The market impact is substantial: according to Gartner’s forecast, global AI revenues are expected to hit $500 billion by 2025—driven largely by products enabled through scaling-driven innovations. This growth reinforces a feedback loop where successful scaling attracts further investment.

At the same time, this dynamic concentrates power among entities with access to specialized hardware, massive datasets, and cloud infrastructure—shaping competitive advantages and raising concerns about ecosystem diversity.

For a detailed analysis of how scaling laws are translating into commercial success and market shifts, McKinsey’s report on the impact of AI scaling laws on innovation offers valuable insights alongside coverage by the Financial Times on user behavior changes driven by these trends.

2.1. Investment strategies: why firms pour resources into scale

Investors recognize that scaling laws provide a relatively predictable path to competitive advantage. By committing significant capital to acquiring compute power and proprietary datasets, companies build moats that are hard for rivals to replicate quickly.

Cloud providers and hardware vendors play pivotal roles by offering scalable infrastructure that reduces upfront costs for customers but simultaneously raises barriers for new entrants who lack access to state-of-the-art resources. This dynamic accelerates an arms race centered on scale.

Within corporations, strategies often split between heavy R&D investments aimed at pushing model boundaries and productization efforts focused on delivering scalable services through APIs or cloud platforms.

McKinsey’s analysis highlights how these investment patterns reflect confidence in scaling laws as a reliable foundation for growth while also signaling risks associated with escalating costs and concentration.

2.2. Market forecasts and revenue implications of scaling-driven products

Gartner’s projection that AI-related revenues will reach $500 billion by 2025 underscores the economic weight of scaling-driven innovation. Industries expected to benefit most include technology services, financial institutions deploying predictive analytics, healthcare applications using natural language processing for diagnostics, and retail sectors enhancing customer experiences through chatbots.

Scaling enables diverse monetization models such as pay-per-use APIs, vertical-specific AI solutions tailored to industry needs, or embedded intelligent features within enterprise software suites.

However, this growth also raises concerns about market concentration risks where dominant players capture disproportionate value due to their scale advantages.

Coverage by the Financial Times emphasizes economic consequences tied to user behavior shifts enabled by larger models—such as increased reliance on AI assistants—and discusses implications for regulation and competition policy moving forward.

3. The limits of scale: diminishing returns, cost curves and technical ceilings

Despite the enthusiasm for larger models driven by AI scaling laws, emerging evidence points to diminishing returns: beyond certain points, adding more compute or parameters leads to progressively smaller performance gains relative to cost increases.

Academic research published on arXiv identifies flattening improvement curves where metrics like loss reduction slow down markedly as scale grows. This suggests technical ceilings may eventually constrain benefits from naive scaling alone.

These diminishing returns impact return-on-investment (ROI) calculations substantially: firms must weigh whether extra expenditures yield enough incremental capability to justify costs in compute time, energy consumption, and infrastructure maintenance.

Public discourse reflected in mainstream media such as the BBC increasingly questions whether “bigger is always better,” highlighting concerns about sustainability, concentration of power, and limited consumer upside beyond a certain scale threshold.

3.1. Empirical evidence for slower marginal gains

Recent empirical studies show that while initial increases in compute produce steep improvements in loss metrics during training, the curve tapers off significantly at high scales. This pattern also appears when evaluating downstream task performance—such as question answering or summarization accuracy—where progress plateaus despite exponential resource increases.

Researchers interpret this statistically as an inevitable consequence of optimization difficulties and model capacity saturation. Practically, this forces reconsideration of cost-benefit trade-offs when deciding whether to scale further or explore alternative approaches.

The work available at arXiv provides detailed quantitative analyses supporting these conclusions with rigorous experiments across model architectures.

3.2. Media and public concern: why journalists are asking “is bigger always better?”

Journalistic coverage amplifies concerns about the social impact of endless scaling efforts. The BBC reports on debates around the environmental costs of massive compute runs, the risk of monopolistic control over AI capabilities by few players with deep pockets, and questions about whether consumers truly benefit proportionally from larger models’ incremental improvements.

These narratives underscore tensions between hype-driven expectations and technical reality, urging stakeholders to consider sustainability and equitable access alongside pure capability growth.

By connecting public discourse with scientific evidence on scaling laws limits, this coverage fosters more balanced conversations about responsible innovation pathways.

4. Economic, environmental and infrastructure challenges from scaling

The pursuit of ever-larger AI models brings tangible economic and environmental costs that extend beyond raw compute budgets. Building and running these systems requires vast physical infrastructure—including data centers with specialized hardware—and consumes significant energy with associated carbon footprints.

These factors contribute to operational overheads that can strain organizational resources while raising questions about sustainability within tech ecosystems.

Research addressing sustainability challenges appears in publications like arXiv’s study on efficiency and limits, while major outlets such as the Financial Times report on pressures faced by big tech companies balancing investment cycles alongside growing scrutiny from regulators and investors concerned with environmental impact.

Similarly, industry timelines discussed by The Atlantic highlight strategic pressures imposed by scaling deadlines tied to competitive positioning.

4.1. Energy, carbon footprint and sustainability trade-offs

Training large models involves running thousands of GPU hours across clusters consuming megawatts of power—leading to significant greenhouse gas emissions unless offset by renewable energy strategies.

Inference workloads at scale also contribute ongoing energy demands as AI services become widely used in consumer applications worldwide.

To mitigate these impacts, organizations pursue several strategies:

Improving hardware efficiency through next-generation processors

Optimizing data center cooling and energy management

Investing in renewable energy sources

Enhancing algorithmic efficiency to reduce unnecessary computation

Transparent reporting of carbon footprints linked to AI workloads is gaining traction as part of corporate sustainability commitments—a trend documented in recent research focusing on balancing efficiency with scalability (arXiv).

4.2. Infrastructure and competitive dynamics

High capital requirements for building cutting-edge silicon chips and operating hyperscale data centers create formidable barriers to entry for smaller players.

Cloud providers like AWS, Google Cloud, and Microsoft Azure serve as critical infrastructure enablers but also become gatekeepers controlling access to advanced hardware needed for large-model training.

National policies encouraging domestic semiconductor manufacturing or cloud infrastructure investments further shape geopolitical dynamics around AI competitiveness.

This concentration risks regional monopolies over AI innovation while raising concerns about resilience and diversity within global AI ecosystems—a theme explored by the Financial Times and The Atlantic.

5. Alternatives to brute-force scaling: efficient methods, architectures and research directions

As the limits and costs of pure scale become clearer, researchers increasingly explore efficient scaling strategies that achieve capability improvements without exponential resource demands. These alternatives emphasize smarter architectures, algorithmic innovations, and hybrid approaches integrating external knowledge sources.

Recent work highlighted on arXiv advocates for methods such as sparse computation techniques or retrieval-augmented models that selectively activate parts of networks or incorporate relevant external data dynamically instead of relying solely on parameter count increases.

Community discussions at venues like the Alignment Forum emphasize optimizing trade-offs between complexity and maintainability while tailoring solutions for specific applications rather than pursuing ever-larger generalist models blindly.

5.1. Algorithmic and architectural innovations that beat brute-force size

Key promising techniques include:

Mixture-of-experts (MoE): selectively activating subsets of model components per input instance reduces computation without sacrificing expressiveness.

Sparse computation: only processing relevant parts of data or network layers dynamically.

Retrieval-augmented generation (RAG): integrating external databases or documents at inference time to supplement knowledge without expanding model parameters.

Model distillation: transferring knowledge from large models into smaller ones retaining essential capabilities with lower cost.

Each approach involves trade-offs related to implementation complexity or applicability to specific tasks but collectively offers paths toward cost-effective performance gains beyond raw size increases (arXiv).

5.2. Practical playbook: how companies can pursue capability without exponential cost

Organizations looking to optimize must first define clear use cases aligned with business goals rather than defaulting to maximum scale.

A practical approach includes:

Benchmarking smaller models rigorously against task requirements.

Investing in data quality enhancements over sheer quantity.

Exploring retrieval mechanisms or fine-tuning strategies tailored to domain-specific needs.

Monitoring cost-benefit profiles continuously during deployment.

Balancing investments between scaling compute versus algorithmic innovations.

This decision framework helps avoid runaway expenses while capturing sufficient capability improvements—a strategy reinforced by discussions at the Alignment Forum.

6. Policy, governance and the regulatory response to scaling-driven AI

The rapid capability growth enabled by large-scale models introduces unique governance challenges around concentration, transparency, risk assessment, and deployment safety. Traditional regulatory frameworks designed for earlier tech eras struggle to keep pace with these dynamics.

Emerging policy proposals focus on adaptable oversight mechanisms sensitive to scale-driven risks—recognizing that regulatory levers must evolve beyond simple pretraining-era assumptions (arXiv).

Balancing innovation incentives with societal risk mitigation remains critical as governments worldwide consider frameworks addressing potential misuse or unintended harms from powerful AI systems.

Expert perspectives detailed by the Financial Times stress cross-sector collaboration between policymakers, industry leaders, and civil society for effective governance models attuned to evolving technical realities.

6.1. Regulatory priorities tied to scale: transparency, risk assessment and auditability

Key regulatory priorities include:

Model disclosure requirements: mandates for documenting training data sources, parameter counts, intended use cases.

Risk assessment frameworks: standardized protocols evaluating potential harms before deployment.

Auditability provisions: ensuring independent reviewability of high-risk systems through technical or procedural means.

These levers must adapt when dealing with extremely large or opaque models whose inner workings defy easy interpretation—a challenge explored in recent governance literature (arXiv).

6.2. Industry self-regulation and technical standards

Alongside formal regulation, industry-led initiatives promote voluntary safety commitments including:

Cross-company audits.

Sharing best practices around data governance.

Developing open standards balancing transparency with intellectual property protection.

Such collaborative efforts help build trust while accelerating responsible innovation—a nuanced approach highlighted in expert commentary featured by the Financial Times.

7. Case studies and real-world use cases: where scaling added value — and where it didn’t

Examining concrete examples reveals that AI scaling laws translate unevenly across sectors depending on task complexity, resource availability, and business models.

Successful cases often involve enterprise APIs powered by large foundation models that enable flexible workflows such as automated document processing or customer support chatbots—leveraging scale for robust generalization (Gartner).

Conversely, other scenarios benefit more from smaller or specialized models optimized for narrow domains where cost-effectiveness outweighs marginal performance gains from bigger systems (McKinsey).

Journalistic investigations like those from the BBC highlight projects where overly large models failed to justify their expenses compared to leaner alternatives designed for targeted use cases.

7.1. Example: enterprise APIs and commercial scaling wins

APIs providing AI-as-a-service based on large language models have unlocked new revenue streams by enabling companies without deep ML expertise to integrate advanced NLP functionalities quickly.

These platforms capitalize on scale-driven robustness across diverse tasks—from summarization to code generation—enabling scalable customer value propositions tied directly to usage volume (Gartner).

7.2. Example: when smaller or specialized models were more effective

In domains like legal document review or medical imaging analysis where context is specialized but well-defined, smaller task-specific or distilled models complemented by retrieval methods often outperform massive generalist systems when considering total cost-performance balance (McKinsey).

These examples suggest product teams should carefully assess whether scale is truly necessary before committing resources—a lesson underscored by investigative reports covering cost-effectiveness challenges (BBC).

FAQ

Q1: What exactly are AI scaling laws and how predictable are they? AI scaling laws describe empirical power-law relationships showing how increasing compute resources, training data size, or model parameters predictably improves model performance metrics such as loss or accuracy. While highly consistent across many experiments (OpenAI), they do not guarantee indefinite linear gains due to emerging limits at extreme scales (arXiv).

Q2: Do bigger models always outperform smaller ones? Generally yes for many tasks due to capacity advantages; however, diminishing returns mean larger isn’t always more cost-effective or necessary depending on use case complexity (OpenAI). Sometimes smaller specialized models achieve better ROI (McKinsey).

Q3: How should startups decide whether to scale a model? Startups should benchmark smaller models thoroughly against their needs first; invest in data quality; consider algorithmic efficiency; then evaluate if added scale justifies costs using frameworks informed by scaling law insights (Alignment Forum).

Q4: What are the environmental costs of large-scale training? Training large models consumes significant energy leading to carbon emissions unless offset strategically through efficiency measures or renewables (arXiv). Measuring operational footprints transparently is increasingly important for sustainability commitments.

Q5: Are there policy or regulatory standards for large models today? Currently emerging frameworks focus on transparency mandates, risk assessments, and auditability tailored for scaled systems but are still evolving globally (arXiv). Industry self-regulation complements formal efforts (Financial Times).

Q6: Can alternative architectures eliminate the need for huge models? Techniques like mixture-of-experts or retrieval augmentation can reduce dependence on sheer size while maintaining capabilities (arXiv). These approaches offer promising efficiency gains but require careful design trade-offs (Alignment Forum).

Conclusion: Trends & Opportunities in AI Scaling Laws

AI scaling laws have become foundational principles guiding modern AI innovation—demonstrating that larger models generally bring improved capabilities but also exposing limits where bigger does not always mean better results. The era ahead will likely see a convergence where efficiency innovations complement scaled architectures rather than replace them outright.

For executives navigating this landscape, adopting cost-benefit frameworks focused on use-case fit will maximize ROI while managing growing compute demands through partnerships with infrastructure providers is crucial. Researchers should prioritize efficiency improvements alongside interpretability research that demystifies emergent behaviors arising from scale. Policymakers must develop adaptable governance frameworks balancing innovation incentives with robust risk mitigation reflecting scale-driven challenges.

Over the next three to five years expect a more nuanced ecosystem shaped by efficient methods gaining prominence alongside selective scaling investments—and evolving regulatory regimes ensuring responsible deployment across society.

Ultimately understanding AI scaling laws equips stakeholders with critical insight into the future of AI, reminding us that bigger models don’t always mean better results, but thoughtful application of scale combined with innovation holds transformative potential.NVIDIA’s exploration captures this balanced vision eloquently while industry analyses like those from the Financial Times reinforce its practical significance in shaping long-term strategy today.

Bold takeaway: Understanding when—and how—to scale intelligently is key to unlocking sustainable AI innovation that balances capability gains with economic viability and societal responsibility.