Alibaba Qwen3-Max vs OpenAI and Google: Who Leads the AI Race?

- Aisha Washington

- Sep 25, 2025

- 10 min read

Alibaba has released Qwen3-Max — its largest AI model to date, a move that signals a clear strategic push into flagship-scale generative models and direct competition with OpenAI and Google DeepMind. The timing matters: hyperscalers are rapidly iterating on model scale, multimodal capabilities, and enterprise tooling while governments and customers demand clearer governance and deployment options. For product teams, engineers, and technology leaders, the launch reframes procurement and architecture choices—especially for organizations operating in Asia or with Alibaba Cloud relationships.

This article explains what Qwen3-Max brings to the table, summarizes Alibaba’s performance claims and practical deployment options, compares the offering to OpenAI and Google, walks through developer and enterprise considerations, and highlights policy implications. Along the way you’ll find concrete examples and links to primary documentation so you can evaluate whether Qwen3-Max fits your technical and compliance needs.

Feature breakdown: Alibaba Qwen3-Max key features

What Qwen3-Max is designed to do

Alibaba positions Qwen3-Max as its most capable model to date, built for broad multimodal and large-language workloads. “Multimodal” means the model can handle more than text—for example, combining text with images or other inputs—while LLM (large language model) refers to neural networks trained on huge corpora of text to generate and reason with language. Alibaba’s public messaging emphasizes larger model scale, improved contextual understanding, and closer parity on industry benchmarks with leading Western models.

Qwen3-Max is marketed toward enterprise scenarios that require higher-quality generation, longer contexts, and integration into cloud-native data pipelines. That positioning shows in the way Alibaba bundles the model with developer tooling: the company highlights Model Studio and cloud services for tasks such as search, retrieval-augmented generation (RAG), and semantic search, suggesting the target buyer is an enterprise already using Alibaba Cloud.

Insight: framing Qwen3-Max as a cloud-native enterprise model gives Alibaba an angle many enterprises find attractive—tight integration between model, tooling, and data infrastructure.

Tools, integrations, and usability

Alibaba pairs Qwen3-Max with product tooling designed to shorten time-to-production. Model Studio and associated cloud integration features provide UI workflows and APIs for indexing documents, building semantic search, and orchestrating RAG pipelines. These workflows reduce bespoke engineering work for teams that want to add knowledge retrieval to QA or support agents.

Practically, this means an organization can prototype semantic search or RAG in Model Studio, then scale to production with Alibaba Cloud’s hosting and monitoring. For enterprises that already host data in Alibaba Cloud, that end-to-end stack is a persuasive argument—especially where data residency and latency matter.

Scale, specs, and performance details for Qwen3-Max

What Alibaba officially claims and what that means

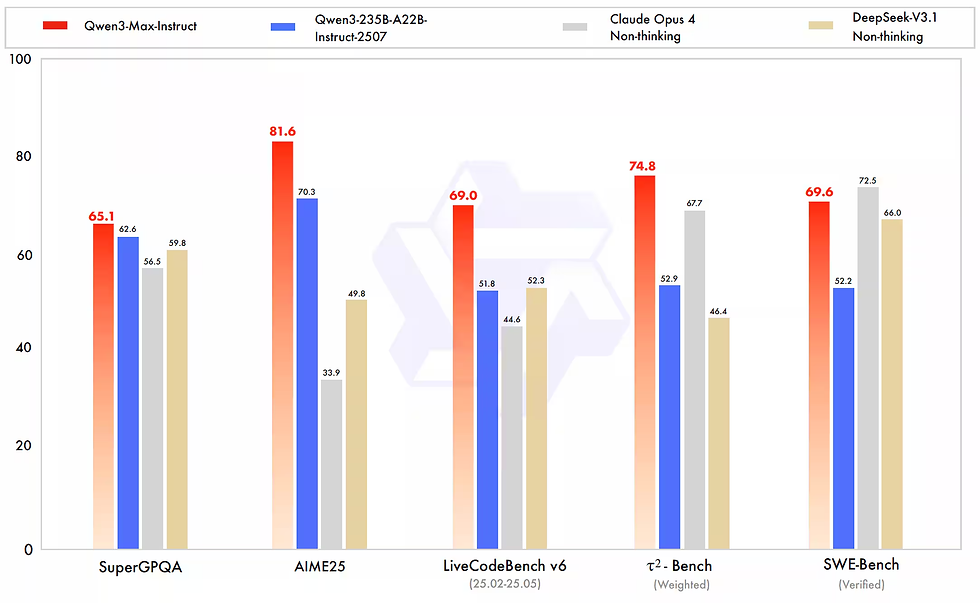

Alibaba has described Qwen3-Max as its largest and most capable model, and company statements assert that it narrows the gap with top models from OpenAI and Google DeepMind on standard benchmarks. Coverage emphasizes competitive benchmark results reported by Alibaba but stops short of publishing exhaustive public metric tables or independent third-party validations. For readers, that distinction matters: vendor benchmarks are useful indicators but independent head-to-head evaluations are the gold standard for feature parity and robustness.

Press coverage captures Alibaba’s claim that Qwen3-Max rivals the best offerings from OpenAI and Google DeepMind. Yet those articles highlight company-reported scores rather than open benchmark artifacts or reproducible tests. Expect independent benchmarks to follow as researchers and cloud customers gain access.

Hardware, cloud stack, and deployment trade-offs

Alibaba ties Qwen3-Max to its cloud infrastructure. That means practical deployment will involve Alibaba Cloud’s compute, networking, and Model Studio orchestration. Large models bring predictable trade-offs:

High memory and GPU/accelerator requirements for low-latency inference.

Choices between latency-optimized single-instance deployments and throughput-optimized batched inference.

The need for retrieval and caching layers when using long-context prompts or RAG to avoid repeated expensive model calls.

These are not unique to Alibaba—OpenAI and Google customers face the same engineering choices—but the integration with Alibaba Cloud can simplify some of the operational work where the customer already uses that provider.

Implementation caveat: without publicized exhaustive hardware specs and cost benchmarks, teams should start with a pilot and capacity tests before committing to production traffic.

Benchmarking notes and uncertainty

Reporting so far emphasizes comparative claims rather than a full public release of datasets and validation artifacts. That leaves a few unknowns: model robustness on niche tasks, safety and hallucination rates in different languages, and performance at extreme prompt lengths. Expect follow-up from independent labs and community benchmarks as access expands.

Availability, pricing, and developer access

Launch, staged rollout, and who can get access

Alibaba publicly announced Qwen3-Max as released and appears to be rolling it out in stages, focused first on Alibaba Cloud and Model Studio users. Media coverage and Alibaba’s documentation suggest an enterprise-first distribution model rather than a broad consumer API launch. For organizations that already use Alibaba Cloud, that means a faster path to trial and integration; for others, regional availability and compliance constraints may delay access.

Model Studio’s web-search documentation provides step-by-step guidance for implementing search and RAG features—an indicator that enterprise users are a primary audience.

Pricing signals and procurement expectations

Public reporting does not list a universal consumer price for Qwen3-Max; instead, pricing is likely to follow Alibaba Cloud’s usage-based billing model or enterprise agreements. Vendors typically price flagship models based on consumed GPU-hours, request volumes, and additional managed services (indexing, vector stores, etc.). Organizations should expect to engage Alibaba Cloud sales for capacity planning and to negotiate SLAs that match latency, throughput, and residency requirements.

Practical advice: plan for a pilot that includes representative datasets and workload patterns, then convert those measurements into a cost model before wide rollout.

How developers get started

Developers can begin with Model Studio tutorials such as the web-search integration guide. Typical first steps include:

Indexing a sample dataset and configuring a retrieval layer.

Creating a prompt template and testing generation quality.

Measuring latency and cost per query under realistic traffic.

Adding monitoring and safety filters for sensitive data.

If your organization needs a multi-cloud architecture or follows strict data residency rules, engage early with Alibaba Cloud to understand regional availability and solutions for hybrid deployments.

Insight: starting with a focused, production-like pilot reduces surprises and exposes integration and compliance issues early.

How Qwen3-Max stacks up against OpenAI and Google DeepMind

High-level market positioning

Coverage frames Qwen3-Max as a deliberate challenger to OpenAI and Google DeepMind’s flagship models, with Alibaba emphasizing benchmark improvements and enterprise integration. The narrative is not merely about raw model size; it’s about packaging the model inside an enterprise cloud experience that includes Model Studio, data connectors, and managed services.

Alibaba has said Qwen3-Max rivals the best offerings from OpenAI and Google DeepMind, but the claim should be read alongside the reality that OpenAI and Google have invested heavily in global API infrastructure, third-party partnerships, and a wider base of independent evaluations.

Strengths relative to OpenAI and Google

Alibaba’s advantages are structural and regional:

Tight integration with Alibaba Cloud services—indexing, storage, and networking—can reduce engineering overhead for customers already on that platform.

Strong presence and compliance handling in Asia, where Alibaba Cloud has established regional infrastructure and market relationships.

Enterprise-focused tooling in Model Studio that surfaces common patterns like RAG and semantic search with ready-made workflows.

For organizations whose data, customers, or compliance footprint is Asia-centric, those advantages can be material.

Where the unknowns remain

Public reporting does not yet provide a comprehensive, independent head-to-head on robustness, multilingual safety, or adversarial performance. OpenAI and Google benefit from a larger base of third-party audits, community benchmarks, and customer telemetry visible in diverse production contexts. Until independent researchers publish detailed comparisons, assertions of parity remain provisional.

Bold takeaway: Qwen3-Max narrows the conversation from “who can build the biggest model” to “who can offer practical, safe, and cost-effective enterprise deployment,” with Alibaba staking a claim on the latter through cloud integration.

Real-world adoption, developer impact, and use cases

Practical use cases and early adoption patterns

Alibaba’s product messaging and tutorials orient Qwen3-Max toward enterprise tasks such as customer support automation, internal knowledge search, and domain-specific content generation. Typical implementations you’ll see in the field include:

Retrieval-augmented generation for knowledge workers, where a vector index supplies relevant documents that the model uses as context to answer questions.

Semantic search for large knowledge bases, improving discovery inside large organizations.

Augmented customer support agents that combine RAG with intent classification to improve accuracy.

For instance, a regional bank using Alibaba Cloud could implement a secure internal knowledge base indexed for RAG to reduce support response times while keeping customer data inside the same cloud region.

Developer tooling, tutorials, and community resources

Alibaba Cloud’s Model Studio docs include step-by-step guides, lowering the barrier to run pilot projects. There is active discourse in podcasts and technical blogs analyzing integration patterns and best practices, which helps accelerate knowledge sharing. Early adopters often publish patterns for prompt design, chunking documents for retrieval, and monitoring output quality.

Adoption friction points include data governance needs, latency constraints for real-time applications, and the engineering lift to instrument safety checks and content filters. Migrating from smaller models or third-party APIs requires integration testing and verification that expected gains in quality justify cost increases.

Insight: developer productivity gains come from integrated tooling, but cost and compliance trade-offs still drive cautious, staged rollouts.

Community and ecosystem signals

Podcasts and technical roundups are exploring Qwen3-Max’s implications and sharing integration patterns, signaling active interest within the developer community. That community activity is valuable for spreading practical lessons—sample prompt templates, RAG architectures, and monitoring recipes—that accelerate mature deployments.

Policy, governance, and geopolitical implications

Regulatory spotlight and cross-border questions

The debut of Qwen3-Max renews global discussion about governance for large AI models—topics ranging from export controls to data residency and model oversight. The launch increases pressure on governments and industry groups to clarify rules for cross-border model access and responsible deployment.

Coverage of Alibaba’s rollout highlights the geopolitical dynamics shaping the AI race, including how market competition intersects with national policy on data and technology exports.

Industry response and enterprise implications

Industry observers recommend greater transparency on benchmark methodology, shared safety practices, and clearer deployment guardrails as flagship models proliferate. For enterprises, the practical implications are immediate: adoptors of Qwen3-Max should include compliance checks for data residency, contractual terms for model behavior and liability, and governance around high-risk applications. Where cross-border data flows are restricted, the localized availability of Alibaba Cloud can be an advantage—but it also demands rigorous contractual and technical safeguards.

Managing uncertainty and risk

Enterprises should treat model selection as a multi-dimensional decision that covers technical fit, cost, governance, and long-term vendor strategy. Where regulatory ambiguity exists, choosing a deployment model that allows for defensible data governance—private model hosting, on-prem or region-locked cloud tenancy—reduces policy risk.

FAQ: common questions about Alibaba Qwen3-Max vs OpenAI and Google

Quick answers to common questions

Q: What is Alibaba Qwen3-Max? A: Alibaba Qwen3-Max is the company’s largest LLM to date, aimed at enterprise and cloud deployments; it supports multimodal workloads and is packaged with Model Studio tools.

Q: When was Qwen3-Max released and who can use it? A: The model was publicly announced as released, with staged availability focused on Alibaba Cloud and Model Studio customers; enterprises should contact Alibaba Cloud for access and onboarding details (company reporting indicates enterprise-first rollout).

Q: How does Qwen3-Max compare in raw performance to OpenAI and Google models? A: Alibaba claims competitive benchmark performance, but independent head-to-head public benchmarks were not included in initial reporting and remain to be validated by third parties.

Q: What infrastructure or costs should developers expect? A: Expect cloud-hosted deployment via Alibaba Cloud and Model Studio, with typical large-model compute and latency/cost trade-offs; pricing will likely be usage-based and negotiated for enterprise contracts.

Q: Are there tutorials or integration guides? A: Yes—Alibaba Cloud’s Model Studio documentation includes guides like web-search integration to help developers implement RAG and semantic search.

Q: Is Qwen3-Max suitable for highly regulated data (finance, health)? A: Suitability depends on deployment model, contractual assurances, and regional compliance. Enterprises should evaluate data residency options, encryption, and auditability before production use.

Q: Will Qwen3-Max be available globally? A: Public reporting points to a phased rollout tied to Alibaba Cloud regions; global availability may be influenced by regulatory and commercial factors.

Q: How quickly can developers move from prototype to production? A: That timeline depends on dataset size, latency requirements, and compliance checks. A realistic pilot-to-prod path is weeks to months, not days, when enterprise-grade SLAs and governance are required.

Where Qwen3-Max leaves the AI ecosystem and what comes next

Alibaba’s Qwen3-Max signals a shift that’s as strategic as it is technical: the company is moving from research and model-building to offering fully integrated flagship models tied to cloud infrastructure. That approach narrows the decision space for many enterprises—particularly in Asia—by packaging large-model capabilities with data connectors, deployment tooling, and region-specific support. In the coming years, expect a few clear trends to shape how organizations respond.

First, the competition will accelerate the commoditization of core model capabilities while differentiating on ecosystem and compliance. OpenAI and Google have built broad third-party ecosystems and public audit trails; Alibaba responds with tighter cloud integration and enterprise workflows. Customers will increasingly choose on the basis of where their data sits, which SLAs they need, and which vendor gives the cleanest path to production.

Second, independent benchmarking and transparency will grow in importance. Vendor claims will be tested by third-party labs and community benchmarks; buyers should insist on reproducible tests, stress cases for multilingual and safety-sensitive scenarios, and observable metrics for hallucinations and bias. The industry-level conversation will likely tilt toward agreed-upon benchmark suites and safety reporting over the next 12–24 months.

Third, governance and policy will shape availability and adoption. As regulators evolve rules around cross-border model access, AI export controls, and data residency, vendors that provide flexible deployment (region-locked hosting, private models, contractual data protections) will have a commercial advantage for regulated customers.

For technology leaders and developers, the practical opportunity is to run focused pilots that measure quality versus cost and assess governance readiness. If your organization already uses Alibaba Cloud, Qwen3-Max may shorten the path to higher-quality search, RAG, and automation. If you operate globally or under strict regulatory constraints, build evaluations that include legal, security, and compliance stakeholders from day one.

There are uncertainties: vendor-reported benchmarks need independent validation, and long-term costs and safety behaviors will only become clear with real-world usage. Yet the broader outcome is clear—flagship models are becoming features of cloud platforms, and that integration will change procurement, architecture, and governance discussions. For readers willing to experiment carefully and measure outcomes, this competition promises better tools; for regulators and industry groups, it underscores the need for shared transparency and safety standards as these models scale.

In the near term, watch for independent evaluations, clearer pricing signals from Alibaba Cloud, and product updates to Model Studio that make onboarding smoother. The AI race is no longer just about who trains the biggest model; it’s about who can deliver trustworthy, supported, and cost-effective capabilities to production customers around the world.