Amazon Launches Lens Live: Point Your Camera and AI Generates Instant Product Matches via Rufus

- Olivia Johnson

- Sep 4, 2025

- 13 min read

Introduction to Amazon Lens Live and Rufus, and why it matters

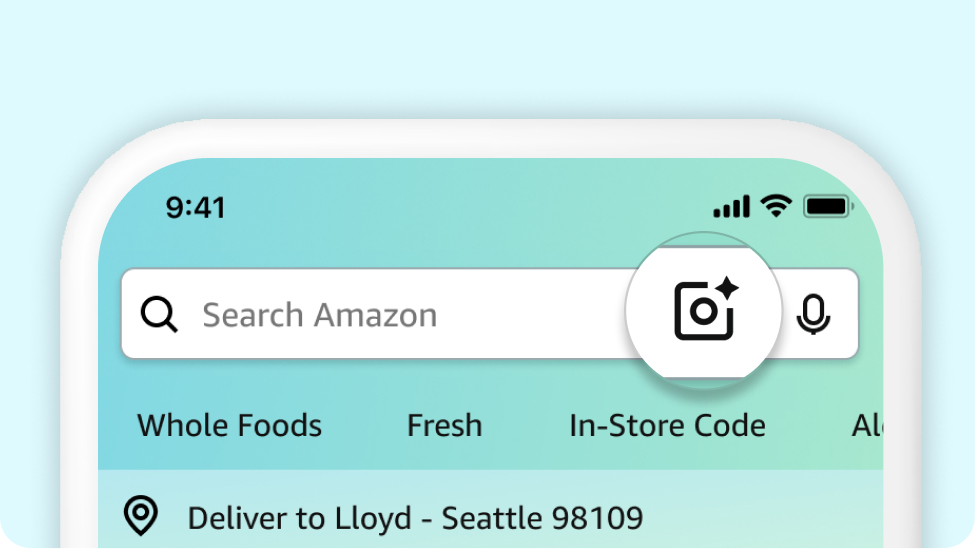

Amazon Lens Live is a new real‑time AI visual search capability that lets shoppers point their camera and get instant product matches, powered by Amazon’s conversational AI assistant Rufus. In plain terms: aim your phone at a shoe, lamp, or snack on a store shelf, and within moments Lens Live returns likely product matches from Amazon’s catalog while Rufus offers conversational follow‑ups — comparisons, clarifications, or purchase actions. This is visual search reimagined as a live, camera‑first shopping loop.

Why this matters: shopping discovery is increasingly multimodal. Text search still dominates for deliberate queries, but real world discovery — seeing something in a window display, a friend’s jacket, or an in‑store shelf — often starts with an image, not words. Amazon Lens Live and Rufus combine on‑device camera capture, large‑scale vision‑language matching, and a conversational layer so that the shopper’s next step is low friction: inspect matches, ask Rufus questions like “Find similar in blue,” and proceed to buy or save. Tech coverage framed the launch as a shift toward blending offline and online commerce, enabling faster discovery and richer interactions between sight, language, and transaction (TechCrunch recap of the launch and use cases).

Key takeaway: Amazon Lens Live plus Rufus signals that visual search is moving from isolated image queries to integrated, conversational commerce where immediacy and context matter as much as raw retrieval accuracy.

How Amazon Lens Live visual search works, and the vision‑language models behind it

What vision‑language representation learning enables for Lens Live

At the core of Amazon Lens Live are large vision‑language models (VLMs) that create shared representations — or embeddings — for images and text. In practice, the system maps a photo (camera frame) and product metadata (titles, descriptions, attributes) into a common vector space so that semantically similar items are close together. This enables fast image‑to‑product retrieval: a single camera frame can be converted into an embedding and used to retrieve nearby catalog vectors ranked by similarity.

Large‑scale vision‑language research provides the foundations for this approach, showing how joint image‑text embeddings scale retrieval and downstream tasks. For e‑commerce, adaptations like e‑CLIP — variants of CLIP optimized for commercial content — are particularly relevant. e‑CLIP style approaches train on paired product images and text at scale, improving robustness to product variants (color, angle, accessory differences) and enabling multi‑label matching when an object belongs to multiple categories (e.g., a smartwatch that is both fitness and fashion). The result is better recall of relevant SKUs even when the shopper’s photo differs from catalog imagery.

Define: embeddings — fixed‑length numeric vectors that encode semantic content; re‑ranking — a second stage that refines an initial candidate list with richer signals (e.g., metadata, pixel alignment, user intent).

Example scenario: a user snaps a photo of a patterned dress in a boutique. The image encoder produces an embedding; retrieval returns several close SKUs (exact match, same pattern different brand, similar silhouette). A re‑ranker uses textual attributes and price range cues to surface the most likely purchases.

Key takeaway: Amazon Lens Live vision language capabilities allow camera images to become first‑class queries, bridging the gap between sight and Amazon’s product graph.

Real‑time inference, mobile camera input, and latency considerations

The mobile experience demands tight latency budgets: shoppers expect near‑instant feedback when they point a camera. The typical Lens Live pipeline looks like this: camera capture → image pre‑processing (crop, normalize) → image encoder produces an embedding → nearest neighbor retrieval against a product index → re‑ranking with multimodal signals → response delivered to Rufus for conversational presentation. Each stage is optimized for low latency.

Real‑time VLM inference and quantification techniques are detailed in modern research, and product‑focused models like e‑CLIP inform how to tune retrieval for catalog noise and visual variance (e‑CLIP and vision‑language for e‑commerce). Engineering tradeoffs are central:

On‑device inference reduces round‑trip time and can preserve privacy for transient frames, but device compute and battery constraints limit model size.

Cloud inference enables larger models and richer contextual fusion (session history, catalog signals) at the cost of network latency.

Hybrid options run a compact encoder on device to produce a compressed embedding and then send that representation to the cloud for fast retrieval. Quantization (reducing numeric precision) and pruning (removing model weights) are common to shrink model size while maintaining acceptable accuracy.

Latency targets vary by context: a live camera feed might aim for sub‑500ms response to feel fluid, while more complex re‑ranking that fetches pricing, inventory, and personalization can run asynchronously with a quick initial match. Index engineering — approximate nearest neighbor (ANN) search with sharded indices and prioritized caching for high‑velocity SKUs — is crucial for scale.

Insight: real‑time constraints push design toward modular pipelines where a quick visual match is presented immediately, with richer personalization results streamed in seconds later.

Actionable point: For product teams, instrumenting end‑to‑end latency metrics (capture → first match → full contextualized result) is essential to maintain conversion‑friendly responsiveness.

Multimodal product matching and personalization in Amazon Lens Live

Multimodality for improved product matching accuracy

Multimodal product matching means combining visual features with text, contextual cues, and structured metadata to improve precision. A raw image match is often ambiguous — lighting, occlusion, or angle can reduce confidence. By fusing captions (automatically generated image captions or user text queries), historical purchase preferences, device context (location, store layout data), and product metadata (brand, color tags), Lens Live reduces false positives and surfaces more relevant items.

Multimodal fusion layers can operate at different stages:

Early fusion: concatenate or cross‑attend image and text embeddings before retrieval for context‑aware indexing.

Late fusion: retrieve candidates with image embeddings, then score and re‑rank candidates with text and metadata signals.

Cross‑modal re‑ranking: apply a lightweight cross‑attention model to refine top candidates using full image pixels and candidate descriptions.

Research into bringing multimodality to visual search outlines methods to integrate image, language, and structured attributes for robust product matching. Practically, Lens Live may generate an image caption (e.g., “long brown leather boot with buckle”) and combine that with nearby scene cues (is the user in a sporting goods store?) to narrow results.

Example scenario: In a grocery aisle, a can with a partially obscured label is snapped. Visual features suggest canned tomato soup; OCR captures legible text, and the user’s history (frequent brand X purchases) nudges the ranking toward the exact SKU the user usually buys.

Key takeaway: multimodal fusion reduces mistaken matches and provides the contextual signals that are fundamental to shopping relevance.

Personalization, adaptive discovery and item diversification

Personalization tailors Lens Live suggestions to the individual. Signals include purchase history, saved lists, returns history, price sensitivity inferred from past purchases, and session context (browser vs in‑store). When a user points a camera, Rufus can surface results that match both the image and the user’s preferences: favored brands first, price bands prioritized, or eco‑friendly alternatives shown if that has been a stated preference.

Diversification strategies avoid returning near‑identical SKUs and instead surface complementary or alternative items to increase discovery value. Adaptive diversification is dynamic — if a user repeatedly rejects exact matches, the system broadens the candidate set to include stylistically similar or lower‑cost alternatives.

Re‑ranking strategies often balance three signals: visual similarity, personalization score, and business constraints (inventory, sponsored placements). Multi‑objective optimization can be used to tune those tradeoffs, with user experience metrics (click‑through, add‑to‑cart, purchase) as feedback.

Insight: personalization must be balanced with serendipity — too much narrowing creates echo chambers; intelligent diversification keeps discovery productive.

Actionable point: Brands should ensure structured attributes (material, fit, colorways) are accurate and comprehensive; those attributes are often used by re‑rankers to match user preferences and diversify results.

Architecture and Rufus integration: creating a seamless AI shopping assistant

How Lens Live connects to Rufus for conversational shopping

Lens Live and Rufus form a two‑part interaction: visual retrieval and conversational orchestration. A typical user flow: 1. The shopper opens the Amazon app or Rufus interface and grants camera permission. 2. The shopper points the camera; Lens Live generates an embedding and returns initial product matches. 3. Rufus presents the matches conversationally: “I found these similar items — want to compare prices or see other colors?” 4. The user asks follow‑ups (e.g., “Show me only Prime eligible,” “Find similar but under $50”) and Rufus issues refined queries to the retrieval and re‑ranking services. 5. Rufus can guide to purchase, add to wishlist, or suggest complementary products.

Amazon’s announcement describes Lens Live as integrated with Rufus to enable follow‑up questions and guided shopping actions. This is more than a static results page — Rufus carries context, interprets intent, and orchestrates next steps.

Example dialogues:

“What is this?” → Rufus: “These look like ‘brand X running shoes.’ Want similar options in size 9?”

“Compare prices” → Rufus fetches sellers, shipping, Prime eligibility, and shows a summarized comparison.

Key takeaway: Lens Live Rufus integration turns visual results into an interactive decision flow, reducing friction between discovery and purchase.

Backend architecture, developer and brand touchpoints

The backend is modular and scalable. Core components include:

Image encoder service: produces embeddings from camera frames or merchant images.

Retrieval index: an ANN index of product embeddings optimized for low latency.

Product catalog sync: pipelines to keep embeddings, textual attributes, pricing, and inventory in sync.

Personalization service: scores candidates with user signals and business rules.

Re‑ranking layer: multimodal cross‑encoders that refine top candidates.

Rufus conversational layer: dialog manager that formats results, asks clarifying questions, and triggers transactional flows.

Brands can optimize for Lens Live discovery by focusing on the inputs the system uses: high‑quality image assets from multiple angles, accurate and granular structured metadata (colors, materials, use cases), and catalog tagging that supports variant matching. In addition, imagery that reflects real‑world contexts (products in use) can improve robustness for camera captures.

Industry commentary on how conversational AI like Rufus transforms ecommerce outlines the kinds of integrations and brand opportunities available, and technical explanations of Rufus capabilities help explain the conversational layer’s role (IEEE Spectrum explainer on Rufus capabilities and design choices).

Actionable point: Sellers should prioritize multi‑angle images and clean structured data feeds; these materially improve Lens Live’s chance of surfacing a SKU and Rufus’s ability to answer follow‑ups.

Insight: Think of Lens Live as a funnel — better upstream assets (images + metadata) make downstream conversational commerce more accurate and profitable.

Privacy, data policy and regulatory compliance for Amazon Lens Live

User data handling, privacy controls and transparency

Image data raises understandable privacy questions. Amazon’s public statements indicate that Lens Live will have controls for image use, including local caching for transient frames and opt‑in choices for allowing images to be stored for model improvement. Users should expect transparent prompts about camera access and options to delete any images associated with their account. In practice, apps that implement camera‑based search typically provide temporary caching to improve responsiveness and explicit settings to opt out of data retention.

Amazon’s broader AI and product policy communications explain how image and model data have been handled in related features and emphasize user control and safety considerations. Additionally, Amazon’s public guidance about generative AI and product descriptions provides precedents for disclosure and governance practices that could apply to Lens Live (Amazon policies on generative AI product search and descriptions).

Users should expect transparency features in Rufus: brief explanations for why a match was suggested (“matching pattern, brand, and price range”), and the ability to ask Rufus how a recommendation was generated. These explanation affordances are increasingly important for trust.

Key takeaway: Expect opt‑in controls and deletion options; brands and product teams should design for both utility and clear, user‑facing transparency.

Compliance and industry regulatory considerations

Lens Live sits at the intersection of consumer protection, biometric privacy (in some jurisdictions), and AI regulation. Key compliance vectors:

Data minimization: store the least amount of visual data necessary and for the shortest period required.

Consent: explicit camera permission and transparent purpose‑limited disclosures.

Auditability: logs and model audit trails that show training data provenance and decision rationale, useful if regulators request explanations.

Cross‑border data flows: regional availability and model behaviors may differ depending on local laws.

Amazon’s internal governance frameworks for generative AI and product integrity provide governance precedents; companies deploying similar features should anticipate audit requests and regulatory scrutiny around automated product claims, counterfeit detection, and consumer safety. Rufus compliance — ensuring generated recommendations and descriptions adhere to advertising and product representation regulations — is also a relevant precedent.

Insight: Compliance is both legal and product design work; integrating privacy and auditability early reduces costly retrofits.

Actionable point: Product and legal teams should map data retention flows and ensure user‑facing settings are simple and discoverable; engineers should log provenance information to support audits.

Industry impact, trends and what Lens Live means for retailers and shoppers

How Lens Live accelerates the shift to visual, real‑world shopping discovery

Lens Live exemplifies a broader trend: search is becoming multimodal and rooted in the physical world. Users increasingly start discovery from images — a storefront item, a garment on the street, a piece of furniture in a cafe — and want immediate context and commerce options. Instant camera‑based matching shortens the discovery funnel and creates stronger links between offline browsing and online conversion.

Analysis of visual search evolution highlights how real‑world discovery is unlocking new shopper behaviors and conversion pathways. As a result, we can expect:

Increased impulse buys triggered by instant identification and easy checkout.

Richer comparison behavior through Rufus conversational flows — price, reviews, and alternatives accessible in seconds.

More in‑store experimentation as cameras replace memory or note‑taking.

Key takeaway: Retailers should treat visual discovery as an acquisition channel, not just a novelty; it changes when and how shoppers enter the purchase funnel.

Competitive landscape and opportunities for brands

Lens Live enters a space with other visual search players (search engines, retail apps, and specialized startups). Brands that optimize for Lens Live gain an advantage in visibility. Practical steps:

Optimize images: multiple angles, accurate product context, and high resolution for robust embedding matches.

Enrich metadata: precise attributes and synonyms to help text‑guided reranking.

Expand SKU diversity: units with complementary items and multiple variants increase the chance of meaningful matches.

Test Rufus conversational commerce tactics: sample conversational scripts, guided bundles, and quick FAQ entries.

Retailers should track KPIs that reflect visual discovery success: visual discovery conversions (visits to product pages originating from camera matches), engagement with Rufus (follow‑up question rates, conversion after Rufus interactions), and return rates for visually discovered purchases. Industry coverage highlights how visual search tools are being adopted by major retailers and the metrics to watch.

Insight: Visual search shifts the attribution model — brands must connect camera discovery events to downstream revenue to justify investments.

Actionable point: Build tracking hooks for incoming camera queries, and run A/B tests for image and metadata enhancements to quantify uplift.

Frequently Asked Questions about Amazon Lens Live and Rufus

How do I use Amazon Lens Live with Rufus on my phone?

Open the Amazon app or the Rufus interface, grant camera permission, point your camera at the item, and wait briefly for matches to appear. Rufus will summarize results and you can ask follow‑ups like “Show only Prime options” or “Find similar under $75.” This is the expected surface flow to use Amazon Lens Live with Rufus.

Will my photos be stored by Amazon when I use Lens Live?

Amazon’s public materials indicate a mix of temporary caching for fast responses and explicit options to opt‑in for storage to help improve models. You should find camera permissions and image retention settings in the app’s privacy controls; look for explicit choices to delete stored images or to opt out of data‑use for model training (Lens Live photo storage).

How accurate is product matching for off‑brand or unique items?

Matching accuracy tends to be highest for branded and well‑photographed items. For off‑brand, handmade, or highly unique items, accuracy can drop, but multimodal signals (OCR, location, text prompts) and personalization can improve results. If an item is rare, Rufus may offer visually similar alternatives rather than an exact match (Lens Live matching accuracy).

Can brands opt in or optimize product data specifically for Lens Live discovery?

Yes. Brands should supply high‑quality, multi‑angle images, complete structured attributes, and consistent tagging to increase discoverability. Optimizations in images and metadata materially affect whether Lens Live surfaces the correct SKU (Lens Live for brands).

Is Lens Live available globally and what languages does Rufus support?

Availability will roll out regionally; Amazon often staggers launches across markets. Rufus uses multilingual models and is expected to support multiple languages where launched, but capabilities and feature parity may vary by region (Lens Live availability Rufus languages).

How does Lens Live affect returns and fraud risk?

Better discovery can reduce returns by matching shopper intent more closely; however, camera‑based discovery also raises authenticity and counterfeit detection needs. Amazon will likely use verification models and policy enforcement layers to mitigate fraud, but brands should monitor returns and work with Amazon on detection strategies (Lens Live returns fraud).

Can developers build on Lens Live or access APIs for integrations?

Amazon has historically offered partner programs and developer tools for shopping features; public APIs or SDKs for Lens Live may be introduced or evolve. Brands and developers should watch Amazon developer channels and partner programs for announcements about Lens Live API access (Lens Live API).

What if Rufus gives an incorrect or misleading product match?

You can ask Rufus follow‑ups for clarification or request sources for its suggestion. Amazon’s transparency and user controls should allow reporting or deleting problematic matches; for brands, monitoring misclassifications can inform image and metadata corrections.

Conclusion: Trends, opportunities and a 90‑day checklist

Amazon Lens Live, paired with Rufus, pushes visual search from experimental to operational by making camera‑based queries conversational, contextual, and transaction‑ready. Over the next 12–24 months we expect several trends:

Near‑term trends (12–24 months) 1. Ubiquitous multimodal discovery: camera + voice/text will become a common entry point to shopping. 2. Tighter conversational loops: Rufus will evolve to handle more complex purchase flows and negotiation of constraints (size, price, shipping). 3. Measured ROI for visual discovery: retailers will develop standard KPIs linking camera events to revenue. 4. Increased regulatory attention: privacy, biometric concerns, and model auditability will be front and center. 5. Growth in counterfeit detection models: visual matching will be paired with authenticity signals to reduce fraud.

Opportunities and practical first steps 1. For shoppers — how to get best results: use well‑lit photos, try multiple angles, and ask explicit follow‑ups in Rufus (e.g., “only show these colors”); treat Rufus like a shopping assistant. 2. For brands — optimizations to prioritize: audit and expand product images, improve structured metadata and tagging, and run pilot campaigns focused on camera discovery. 3. For product/AI teams — technical and compliance priorities: instrument latency metrics, implement multimodal re‑ranking experiments, and build clear data retention and audit trails.

90‑day checklist for brands

Audit all product images for multi‑angle coverage and realistic use contexts.

Update product metadata with granular attributes and synonyms that Lens Live might use.

Run small tests with sponsored or organic placements for Lens Live matches and track visual discovery KPIs.

Review privacy and data flows with legal to ensure readiness for image‑based queries.

Monitor Rufus interactions and prepare quick FAQ scripts and entity descriptions for common conversational flows.

Uncertainties and trade‑offs

There are open questions about how broadly and quickly Lens Live will roll out across regions and how precisely Amazon will balance personalization with privacy. Performance tradeoffs between on‑device responsiveness and cloud‑based depth of context will remain an engineering challenge. Finally, brands must weigh investments in imagery and metadata against uncertain near‑term uplift; empirical testing remains the most reliable compass.

Final takeaway: Amazon Lens Live and Rufus make visual discovery immediate and conversational. For shoppers it lowers friction; for brands it creates a new surface to be found. For teams building these systems, the work is as much about data quality, latency engineering, and governance as it is about model accuracy. Monitor performance, prioritize image and metadata quality, and design transparent user controls — those steps will deliver the most durable value from Lens Live.