China Enacts Strictest AI Content Labeling Law, Requiring Visible and Metadata Tags on All AI-Generated Media

- Aisha Washington

- Sep 10, 2025

- 15 min read

China AI content labeling law explained and why it matters

China’s new AI content labeling law, effective September 1, 2025, requires that all AI-generated media be clearly marked with both visible labels and embedded metadata. The law’s core rule is simple in aim but broad in scope: any content created or substantially produced by artificial intelligence — whether images, video, audio, or text from large language models — must carry a human-facing UI indicator and machine-readable provenance data embedded in the file or delivery stream. This is the practical meaning of the phrase China AI content labeling law in everyday use.

Why does this matter for users, platforms, publishers and regulators? At its heart the regulation is about transparency and accountability: the government frames the measure as a way to protect public information ecosystems by making the origin of content explicit, discouraging deceptive deepfakes, and enabling regulators and platforms to audit provenance when disputes arise. For ordinary users, the law promises clearer signals about what they read, watch, and listen to; for platforms and publishers it creates a significant compliance burden tied to both UI design and backend recordkeeping.

The law’s scope is unusually broad. Social media, news aggregators, audio streaming, image and video hosting, and outputs from large language models are covered — in practice, most digital publishing that reaches a Chinese audience. The rule’s two-part technical requirement — visible and metadata tags — means platforms must render an on-screen label that a user can see, and also attach machine-readable tags or provenance records that travel with the content for crawlers, automated monitors, and auditors to analyze. For a compact overview of the regulation’s origins and how it fits into China’s regulatory arc, see a detailed review that maps state policy developments over recent years and explains the administrative logic behind new AI rules. A detailed chronology links China’s evolving AI rules to broader governance goals. For a practical tracker of provisions and timeline, legal firms maintaining global regulatory trackers provide ongoing summaries of enforcement expectations in China. A legal tracker compares China’s measures with other regimes and updates compliance timelines.

Key takeaway: The China AI content labeling law creates a dual visibility requirement — human-facing labels plus machine-readable metadata — that applies to a wide range of AI-generated media and places new duties on platforms and publishers.

Key provisions of the AI content labeling law, required labels and metadata

The law establishes several interlocking obligations. First, content that qualifies as AI-generated must carry mandatory visible labels in the user interface — clear, unambiguous wording such as “AI-generated,” positioned near the media so users can’t miss it. Second, platforms must embed machine-readable metadata tags alongside the content; these tags must include provenance information that enables automated systems and auditors to trace the chain of creation (for example, the model used, the generation timestamp, and whether human edits followed the generation). Third, platforms and producers must maintain provenance records and make user-facing disclosures when content is algorithmically generated or substantially assisted by AI. Taken together these provisions create both on-screen transparency and backend auditability.

Enforcement is operationalized through platform duties, recordkeeping, and penalties for noncompliance. Platforms are charged with implementing workflows to detect, label, and store metadata for AI-generated content; they must retain records for regulator inspection and submit periodic reports. The law sets out escalating penalties for breaches, ranging from fines to service restrictions for persistent violators. In short, the law combines proactive technical obligations with retrospective audit authority. A practical legal briefing compares these labelling rules with EU approaches and explains enforcement mechanics in more detail. A practical comparison highlights how labeling and recordkeeping are enforced by platform duties and administrative oversight.

Definitions and borderline cases are central. The statute defines “AI-generated” to mean content created primarily by an algorithmic model without substantive human authorship, but it also specifies treatment for mixed outputs and human edits. When an AI draft is heavily revised by a human editor the content may be considered human-authored and exempt from the AI label; when AI supplies a substantial proportion of the final output, the label is required. For models that produce partial material — for example, an AI-generated image that is then retouched — the law treats such content as mixed human-AI outputs, requiring labels that explain the hybrid nature of creation. Detailed legal analysis of how China distinguishes generative systems and LLM outputs provides useful context for interpreting the law’s “definition of AI-generated.” A legal overview of generative AI regulation explains how mixed outputs and LLMs are classified.

Visible UI labels, wording and placement guidance

Practical UI guidance in the law favors short, standard wording: labels like “AI-generated,” “Assisted by AI,” or “Edited with AI” are recommended depending on the contribution of the machine. Labels must be legible on mobile and desktop, placed close to the content (for images and video this often means a corner badge), and persist when content is reshared. User affordances are required: users should be able to tap or click a label to view a concise provenance card that explains what “AI-generated” means in context and links to more detailed metadata where available.

insight: Consistency matters — users learn to trust labels when they are uniform across apps and formats.

Best practice: Present a short label plus a two-line provenance summary on hover or tap, then expose full metadata to regulatory request mechanisms.

Metadata and machine-readable tagging standards

The law does not prescribe a single schema but requires machine-readable tags that include minimal provenance fields. Recommended fields include content type, model identifier, model owner, generation timestamp, prompt summary (or a hashed cue), and an integrity signature. Embedding can be accomplished via file metadata (EXIF/XMP for images), container-level fields for video/audio, or structured headers and JSON-LD for web-delivered text. Interoperability is essential: crawlers, archives, and moderation bots must reliably parse tags, so use stable field names and namespaces.

Bold takeaway: Machine-readable metadata is the backbone of accountability — it must be durable, parseable, and cryptographically verifiable where possible.

Compliance timelines and recordkeeping obligations

The law imposes immediate and staged obligations. At the effective date platforms must begin labeling new AI-generated uploads and embedding tags; within a specified window they must demonstrate end-to-end provenance retention and reporting mechanisms. Retention windows are significant: platforms must store provenance records and metadata for a regulator-defined period (commonly 1–3 years in similar regimes) and maintain audit trails that include detection logs, human review decisions, and takedown records. During inspections, regulators will expect coherent chains of custody linking a visible label to an embedded tag and recorded moderation actions.

For a concise comparison to the EU framework and more on retention and operational expectations, see the legal comparisons that contextualize China’s labeling obligations against European transparency obligations. A legal comparison explains how retention and platform duties differ from the EU approach.

Platform requirements and the WeChat case study for labeling AI-generated media

Platforms now face design, engineering, and policy choices: how to surface labels, how to collect and store metadata, and how to weave these processes into existing moderation and advertising systems. The law’s platform requirements extend beyond UI: they require label controls, audit interfaces for regulators, and internal recordkeeping that ties content to provenance metadata and moderation histories.

WeChat AI content labeling case study

Tencent’s WeChat — one of China’s largest social ecosystems — offers an early example of how a major platform implemented the law. WeChat rolled out UI changes that surface AI labels in friend feeds, public articles, and message previews. It added prompts during content creation that ask users to disclose whether they used AI tools, and it required mini-program developers to attach metadata when their services generated media on a user’s behalf. The platform also integrated metadata tagging across accounts and third-party mini-programs so that a shared image retains its provenance tags as it moves through chats and public timelines. Reporting channels were created for regulators to query platform logs and for users to appeal mislabels.

The rollout was iterative: initial updates focused on the most visible flows (public posts and article headers), followed by deeper integrations for file attachments, group chats, and shared mini-program content. For reporting on how China’s social platforms complied and the staged nature of implementations, see contemporaneous coverage of platform updates. Industry reporting captures how social platforms adapted label controls and tagging across services. A technical perspective documents how firms made it clear to users and indexing bots which content was AI-generated.

insight: When a platform the size of WeChat moves, it shapes UX norms — small apps and mini-programs commonly mirror the same simple “AI-generated” badge to avoid user confusion and regulator scrutiny.

UI and user flow changes on social apps

Label placement decisions matter. In feeds, labels attach directly to the media thumbnail; in long-form articles, a banner near the headline explains AI assistance; in private messages, a subtle icon plus a tappable provenance card meets the law without disrupting conversation flow. Platforms must also ensure labels are included when content is reshared, quoted, or excerpted — preserving provenance across contexts. For crawlers and third-party services, platforms expose metadata via APIs or embedded tags so automated systems can enforce policy or index provenance for search.

Moderation and enforcement workflow inside platforms

Operationally, platforms reconcile automated detection with human review. The internal workflow looks like this: an automated classifier flags suspected AI-generated material; a metadata parser verifies embedded tags; a human moderator resolves borderline cases (for example, mixed human-AI outputs); the platform logs decisions and, when required, applies labels or takes down content. Appeals and false-positive management are built into the pipeline to avoid over-blocking. Platforms must also provide regulators with audit-ready reports showing how many items were labeled, removed, or appealed — data that will be central in enforcement.

Bold takeaway: Platform requirements are as much about backend governance as they are about visible labels; successful compliance requires engineering, policy, and user-facing product design to work together.

Technical challenges for AI service providers implementing visible and metadata tags

AI service providers and platforms now must solve a series of technical puzzles at scale. Chief among these is detection accuracy and provenance: distinguishing AI outputs from human edits is rarely binary. Watermarking and metadata are complementary approaches, but each has limits. Watermarks can be removed or degraded by image transforms; metadata can be stripped during format conversions or by intermediaries that don’t honor tags. Providers must therefore design resilient provenance systems that combine multiple signals.

Designing machine-readable metadata and standards

A recommended metadata model includes these fields: content type, creator (account or service), model identifier and vendor, generation timestamp, prompt summary (or a hashed fingerprint for privacy), a version identifier for both model and prompt, and an integrity signature (digital signature or cryptographic hash). Standardizing names and units, and publishing a schema registry, improves cross-platform parsing and interoperability. APIs for embedding metadata must support common file formats (JPEG/PNG XMP/EXIF, MP4 containers, audio metadata, and HTTP headers/JSON-LD for web content) and provide both synchronous tagging at generation time and asynchronous reconciliation when provenance is later established.

Engineering note: Tagging schemas and APIs for embedding metadata should prioritize stability and backward compatibility; fields are easier to map than constantly changing namespaces.

Watermarking and AI-detection toolkits

Watermarking — visible or invisible — helps identification but is not a panacea. Invisible watermarks can survive moderate edits, but savvy actors can remove them, and detectors can yield false positives. Artifact-based detectors (classifiers that look for model-specific fingerprints) have accuracy limits and can mislabel heavily edited human work. Cryptographic provenance (signing content at generation and preserving signatures in metadata) is the strongest technical assurance but requires end-to-end support and key management.

A layered approach is best: visible labels for UX clarity, embedded metadata for auditability, cryptographic signatures for high-assurance provenance, and detection toolkits as a fallback. Where watermarking fails or metadata is stripped, platforms should fall back to policy-driven human review and conservative labeling choices.

Scalability, privacy and provenance storage

Storing provenance at scale raises privacy trade-offs. Provenance records can be linked to user accounts and may include prompt summaries or partial inputs that reveal sensitive data. Providers must balance regulatory transparency with data protection obligations: hash or redact prompts, store minimal personally identifiable information where possible, and apply strict access controls and retention limits. Audit logs should be immutable and timestamped, but access should be strictly logged and limited to essential personnel and regulators under lawfully authorized requests.

Bold takeaway: Scalability demands a pragmatic approach — implement lean, interoperable schemas and cryptographic primitives early, and adopt privacy-preserving practices for provenance storage.

Legal and compliance strategies for businesses under China’s AI content labeling law

Businesses operating in or serving users in China must move from ad hoc tactics to formal compliance programs. A practical compliance checklist includes policy updates, contractual clauses with vendors, internal audit routines, and designated compliance officers who can interface with regulators. Organizations should assess where AI is used across the product lifecycle, identify data flows that create AI-generated outputs, and ensure contractual rights to obtain model provenance information from third-party vendors.

Contractual and vendor management changes

Procurement teams must update SLAs to require metadata support, durable logging, and cooperation with platform audits. Contracts with model vendors should include obligations to provide model identifiers, generation timestamps, and signed assertions about system behavior. For vendors that refuse to provide metadata (e.g., cross-border model providers), businesses need fallback mitigations: flagging content as potentially AI-generated pending verification or restricting distribution inside China to mitigate regulatory exposure.

Risk assessment and regulatory fines

Conducting a risk assessment helps prioritize remediation. Key risks include regulatory fines, operational restrictions (such as suspension of services), and reputational harm when mislabeled content reaches the public. Penalties for noncompliance can be meaningful; businesses should budget for remediation costs, possible penalties, and insurance covering regulatory fines when available. A thoughtful approach balances operational continuity against exposure: where uncertainty remains, lean on conservative assumptions and document decisions.

insight: A written risk assessment that shows active mitigation steps often reduces enforcement severity in administrative regimes.

Implementation roadmap and pilot deployments

An effective implementation roadmap starts with a gap analysis: map AI-generated content touchpoints, inventory where metadata can be captured, and model vendor dependencies. Pilot tagging projects in high-impact product flows to test UX, metadata fidelity, and audit logging. Use pilots to refine detection thresholds and human-review workflows before scaling. Training programs for product, moderation, legal, and engineering teams are essential; designate compliance leads who can coordinate regulatory reporting.

For legal and operational context around enforcement and how Chinese administrative mechanisms engage firms, see discussions of PRC case law and administrative action frameworks. Discussion of enforcement, PRC court decisions, and administrative processes helps shape litigation readiness. For ongoing tracking of China’s regulatory posture against other regimes, consult the regulatory trackers maintained by legal firms. A regulatory tracker summarizes enforcement expectations and timelines.

Litigation and dispute readiness

Prepare for enforcement actions by preserving evidence: immutable logs of content generation, timestamps, and the chain of custody. If regulators raise questions, rapid production of coherent provenance and audit trails reduces exposure. For high-profile disputes, coordinate legal, compliance, and communications teams early; controlling the narrative while providing transparent evidence is often the most effective defense.

Bold takeaway: A compliance program is both preventative and evidentiary — it prevents many problems and creates the records you’ll need if enforcement arrives.

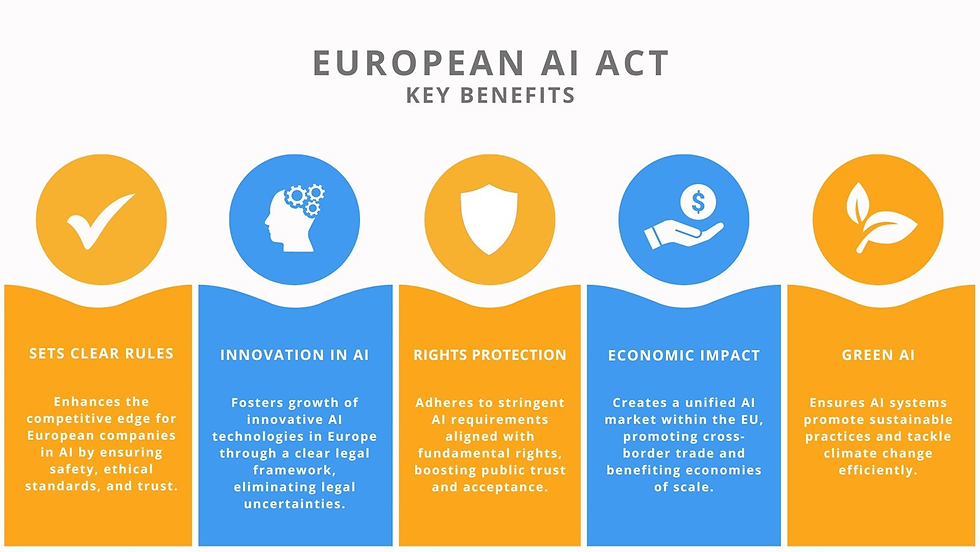

Global impact, comparisons with the EU AI Act and implications for cross-border services

China’s labeling law is part of a patchwork of global AI regulations that prioritize transparency but differ in scope and enforcement. Comparing China’s approach with the EU AI Act highlights both alignment and divergence: both regimes emphasize transparency obligations for AI systems, but China’s law places a stronger operational focus on visible labeling and embedded provenance for content accessible domestically. The EU’s framework distinguishes between high-risk AI systems and general-purpose transparency rules, while China’s content labeling rule applies broadly to AI-generated media and demands platform-based enforcement.

For a detailed comparison that highlights differences in scope, obligations, and enforcement models, consult analysis comparing China’s labeling rules with the EU AI Act. A legal comparison explains overlaps and key differences between China’s labeling measures and EU standards.

Cross-border compliance and data localization

Cross-border compliance complexity is tangible. Platforms must wrestle with whether content created outside China but accessible in China requires labeling and metadata retention within Chinese jurisdiction. Data localization rules and registration requirements can mean that cross-border services need local data-handling arrangements or edge routing that ensures provenance metadata remains accessible to Chinese regulators. Where operations are global, many companies pursue a dual approach: regional feature flags that turn on stricter labeling for Chinese audiences, or a global baseline that satisfies multiple regimes to avoid fragmentation.

Practical strategy: Consider a modular architecture that supports regional compliance modules while preserving a global baseline of metadata fields.

Harmonization challenges for platform UX and metadata

Harmonizing metadata across legal regimes is hard because different regulators demand different fields and retention rules. The practical answer is to design schemas that map primary fields (creator, model, timestamp, signature) into region-specific extensions. Maintain a schema registry and translation layer so the same underlying provenance can be rendered to different auditors without fragmenting the user experience.

Potential global ripple effects and product roadmaps

China’s law may accelerate international standard-setting efforts and push vendors to build provenance features as default. Expect product roadmaps to include compliance-as-a-feature: model vendors will increasingly offer signed provenance, structured metadata, and turnkey SDKs to embed tags. Over time, these features may become de facto global standards as firms prefer single implementation paths that reduce engineering fragmentation.

For broader commentary on how China’s moves influence global governance and business strategy, see perspectives that connect domestic rules to international market impacts. An opinion piece discusses the geopolitical and market implications of stricter national AI rules.

Industry response, market dynamics and long-term trends for media and technology sectors

Industry reactions vary. Media companies and creators are adapting workflows so that content is labeled at the point of creation — “labeling at source” — rather than relying on platform-side detection. That shift reduces friction for platforms and clarifies responsibility chains for publishers. Agencies and creators are also rethinking monetization for AI-assisted content; advertisers may demand additional disclosures, and publishers may establish premium tiers for human-authored material.

Case examples of platform-driven adaptation

Early deployments indicate mixed costs and benefits. Large platforms reported initial engineering costs to add tagging and metadata pipelines, but found that clear labels reduced user complaints about misinformation in early trials. On the moderation side, firms noted higher human-review burdens for mixed human-AI outputs, but also improved regulator relations because audit data was readily available. Industry reporting captures examples of how Chinese social firms implemented labels rapidly after the law took effect. Reporting documents how Chinese platforms rolled out labeling tools to comply with the new law.

Business opportunities and new services

New markets are emerging: compliance-as-a-service vendors provide hosted metadata registries, signing services, and cross-platform mapping tools; detection tool startups sell classifiers that flag likely AI outputs; consulting demand surges for global labeling programs. Model vendors are pressured to include provenance features in their APIs, and firms that can provide cryptographic signing at generation time gain an advantage.

Long-term trend: Expect consolidation in the compliance tooling market as enterprises prefer integrated stacks that handle tagging, signing, storage, and audit reporting.

insight: Consumer trust may become a competitive differentiator — platforms that consistently surface accurate provenance could win user loyalty, while repeated mislabels erode credibility.

FAQ about China’s AI content labeling law, visible tags and metadata

Q1: What exactly must be labeled as AI-generated content? A: Anything created primarily by an algorithmic model — images, video, audio, and textual outputs — must carry an “AI-generated” or equivalent UI label. Mixed outputs require contextual labels such as “AI-assisted” or “Human-edited after AI generation.” See legal comparisons that explain how LLM outputs and hybrid content are classified. A legal overview explains when mixed human-AI outputs require labels.

Q2: How must metadata be embedded and what fields are required? A: Metadata should be machine-readable and travel with the content. Typical fields include content type, model identifier, creator/service, generation timestamp, a prompt summary or hashed fingerprint, and an integrity signature. Use EXIF/XMP for images, container-level metadata for video/audio, or JSON-LD/HTTP headers for web-delivered text to meet the law’s machine-readable metadata tags requirement.

Q3: Will foreign platforms operating in China be subject to these rules? A: Platforms accessible to users in China are generally within scope; practical obligations include data localization, keeping provenance records accessible to Chinese authorities, and registration requirements for services. Regulatory trackers outline how territorial scope and registration expectations apply. A regulatory tracker summarizes cross-border enforcement expectations.

Q4: What penalties or enforcement actions can businesses expect? A: Penalties include fines, operational restrictions, and orders to remediate labeling failures. For persistent noncompliance regulators can escalate administrative actions. Firms should expect inspections and requests for provenance records; having audited logs reduces enforcement risk. See legal analyses that discuss enforcement mechanisms and potential administrative remedies. A legal comparison notes enforcement approaches and penalties.

Q5: How do detection tools and watermarking fit into compliance strategies? A: Detection tools and watermarking are complementary: invisible watermarks and cryptographic signatures help prove provenance, while detection classifiers help surface unlabeled content. A layered approach — metadata first, signatures where possible, detectors as backup, and human review for edge cases — is recommended. For technical guidance on watermarking and detection trade-offs, consult engineering overviews that outline pros and cons. Technical guides explore watermarking limits and metadata strategies.

Q6: Does the law affect content created outside China but accessible in China? A: Yes. Content accessible in China that meets the definition of AI-generated will generally need to be labeled and have provenance records available. Multinational publishers should implement regional feature flags or metadata policies to ensure cross-border compliance.

Q7: How should small creators and SMEs comply without heavy engineering resources? A: Use platform-provided tools and third-party vendors that offer tagging and signing as a service; where unavailable, add clear visible labels at the point of publishing and include minimal required metadata (model name/vendor and generation timestamp) in captions or file metadata. Look to compliance vendors and platform SDKs for low-cost solutions.

Looking ahead on China AI content labeling law and AI transparency

The law marks a turning point: policy makers in China have moved from defining risk categories to operationalizing transparency at scale. Over the next 12–24 months, expect an acceleration in infrastructural work: model vendors will add provenance endpoints; platforms will harden metadata pipelines and UX conventions will coalesce around a small set of label types; compliance tooling will shift from bespoke integrations to standardized SDKs and hosted registries.

Three themes will shape outcomes. First, interoperability wins. Firms that adopt shared tagging schemas and signing primitives will streamline audits and reduce friction across borders. Second, privacy will remain a constraint. Provenance must avoid exposing sensitive inputs; hashing and redaction techniques will be widely adopted, but legal tension between transparency and data protection will persist. Third, market consolidation in compliance services is likely: from signature providers to metadata registries, the firms that can offer secure, auditable, and privacy-conscious provenance solutions will be in demand.

There are uncertainties. Attackers will test watermark robustness; format conversions will continue to strip tags; and different jurisdictions will push divergent metadata expectations. But there is opportunity too: platforms that embed clear provenance as a trust signal can differentiate on consumer confidence, and creators who label at source will reduce friction and regulatory exposure.

For boards, CTOs and product leaders the practical action plan is familiar but urgent: conduct a gap assessment, run pilot tagging and signing in key product flows, select vendors with proven metadata support, and train moderation and legal teams on the new workflows. Monitor KPIs — labeling coverage, false-positive rates, audit response times — and establish a regular audit cadence to demonstrate proactive governance.

insight: The move toward mandatory labeling reframes provenance from an optional feature to an operational necessity; companies that treat it as infrastructure rather than a one-off project will be best positioned to adapt and compete.

The China AI content labeling law creates operational friction but also clarifies expectations. Transparent AI ecosystems are not just a regulatory compliance task; they are a platform design choice with implications for trust, discoverability, and market differentiation. Actors who embrace provenance — with attention to privacy and interoperability — stand to gain credibility in a world where knowing what is real matters more than ever.