CodeRabbit’s Surge: From 2-Year Startup to Enterprise Code Review Tool with 8,000+ Customers

- Ethan Carter

- Sep 17

- 9 min read

Why CodeRabbit’s recent funding and customer momentum matter

Two-year-old CodeRabbit has just crossed a milestone that signals a shift in how enterprises view AI-assisted software delivery. The company announced a $60 million Series B round that valued it at about $550 million, coming after a $16 million Series A the prior year. That combination of capital and speed of adoption—CodeRabbit reports more than 8,000 customers—is not just a vanity metric; it marks a transition from experimental tooling toward vendor products built for large, regulated engineering organizations. See CodeRabbit’s own summary of the Series B for the company’s framing of growth and intended priorities in the near term: CodeRabbit newsroom on the Series B.

Key takeaway: CodeRabbit’s Series B positions it to move from early adopters to mainstream enterprise customers, accelerating the product investments that make AI code review practical at scale.

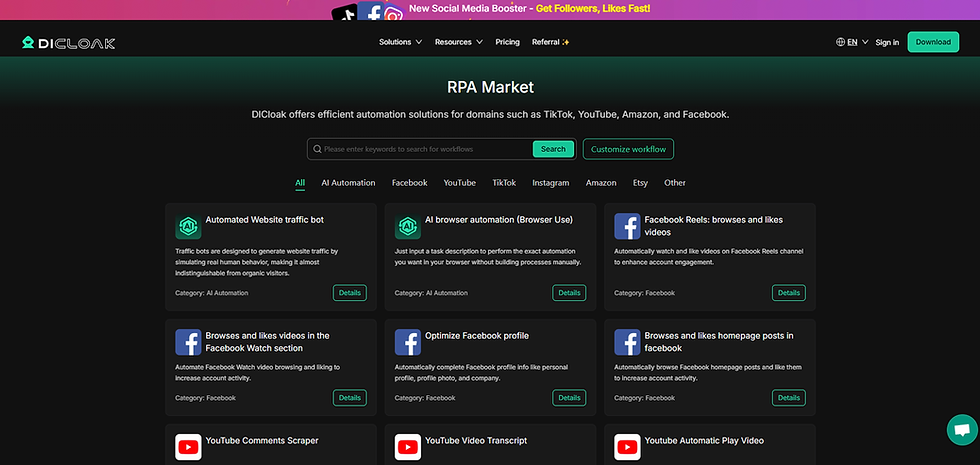

Product features and enterprise capabilities — what CodeRabbit actually does

Core AI-driven review capabilities and how they reduce friction

At its core, CodeRabbit is an AI-powered code review platform that focuses on producing actionable guidance during pull requests. The product surface centers on three interlocking capabilities: automated suggested changes, contextual review comments, and inline code-edit suggestions. Those features are designed not merely to annotate code, but to propose concrete edits and rationale so reviewers can accept, refine, or reject with less back-and-forth. For the product framing and rationale, see CodeRabbit’s product overview and the company’s earlier fundraising context from its Series A coverage at TechCrunch.

Automated suggestions are intended to reduce the typical comment-to-commit cycle: rather than leaving a text comment that a developer must later translate into a patch, the system proposes a ready-to-apply diff. Studies in the space show that suggested-change workflows can materially speed reviews, which aligns with CodeRabbit’s product emphasis and recent funding direction. See later citations on academic work supporting suggested-change effectiveness.

Enterprise controls, auditability, and workflow integration

On the enterprise side, CodeRabbit layers familiar enterprise controls over its AI core: role-based access, repository integration settings, audit logs, and compliance hooks that feed into governance processes. These features are essential when a product moves beyond small teams—security, traceability, and permissions matter to legal and platform engineering teams.

The product is designed to fit into existing developer workflows rather than replace them. That means integrations with version control systems, CI pipelines, and common IDEs—features intended to reduce friction so teams can adopt incrementally without a radical process change. CodeRabbit’s docs emphasize this approach in framing "why CodeRabbit" as a tool that plugs into current flows rather than demanding a forked workflow: Why CodeRabbit product docs.

Bold takeaway: the combination of suggested edits plus enterprise governance is what moves an AI reviewer from toy to tool for large organizations.

Roadmap signals from funding

The move from a $16M Series A to a $60M Series B is a signal about priorities: expect investments in more accurate models, lower inference latency for large repos, and hardened deployment options (e.g., VPC or private-hosting). Both TechCrunch’s reporting on the Series A and its Series B coverage point to product development and enterprise support as top-line uses of proceeds.

Automated suggested changes — how concrete diffs speed merges

What suggested changes look like inside a review workflow

Automated suggested changes are more than intelligent comments; they are patch-level artifacts—diffs that can be reviewed and applied directly. A reviewer who opens a pull request can see inline proposed edits, click “apply,” or edit before merging. This mechanism short-circuits the traditional cycle: comment → author action → new commit → another review round. Research in the field indicates that suggested-change UIs affect reviewer behavior and decrease iteration time; one relevant study outlines how generated edits interact with human reviewers to shorten review timelines and increase throughput. See findings from automated suggested-change research: automated suggested changes research.

In practice, teams using these features often report fewer manual corrections and faster merges for routine fixes—style, common bug patterns, or well-understood refactors. The trade-off is an increased need for high-precision suggestions so reviewers can trust applying patches with minimal manual auditing.

Insight: suggested diffs convert reviewer intent into executable changes, collapsing effort and reducing cognitive overhead.

Performance, models, and developer impact — accuracy, throughput, and trust

Performance claims and what to expect at scale

CodeRabbit’s public positioning emphasizes review throughput—faster reviews and higher signal-to-noise in suggestions. With Series B capital, the company is positioned to invest in lower-latency inference paths and model tuning for the codebases of large enterprises. Coverage of the Series B notes this shift toward enterprise-grade reliability and scaling: TechCrunch Series B coverage.

Academic work provides context for realistic expectations. Recent studies find that AI-assisted review tools can measurably improve reviewer efficiency while also underscoring variability: performance gains depend on suggestion accuracy, codebase familiarity, and reviewer workflows. For a broader, up-to-date analysis of AI-assisted code review effectiveness see AI-assisted code review effectiveness research. Together, these sources suggest that CodeRabbit’s core ROI is in throughput gains, conditional on continued model improvements.

Scale and reliability considerations for enterprises

Handling thousands of repos and high query volumes isn’t just an engineering challenge—it’s an operational one. Series B funding typically underwrites SRE, monitoring, and enterprise SLAs. Expect features like rate-limiting guarantees, priority support, and integrations for security-scanning pipelines as part of a roadmap aimed at large customers. CodeRabbit’s newsroom frames the Series B as a push to broaden enterprise offerings and capacity: CodeRabbit Series B newsroom.

Model quality estimation and continual improvement

Assessing suggestion quality requires more than accuracy numbers; it needs a way to prioritize high-impact guidance. Semi-supervised approaches such as ReviewRanker propose methods to estimate review quality and rank suggestions for human attention. Vendors can adopt similar pipelines—collecting feedback, using signal-weighted metrics, and updating models iteratively to reduce false positives and negatives. The ReviewRanker paper details these techniques and is a useful template for product teams: ReviewRanker research.

Bold takeaway: expect steady iterative improvements rather than overnight perfection—trust grows with small, observable wins and transparent quality metrics.

Enterprise availability, pricing signals, and market positioning

Rollout timing and who becomes eligible first

With Series B capital, product teams typically move from pilot-friendly offerings to formal general availability (GA) for enterprise customers. That transition usually includes dedicated onboarding teams, account management, and staged rollouts for large customers to ensure smooth migrations. CodeRabbit’s Series B announcement explicitly ties funding to expanding enterprise support and deployment options in its newsroom: CodeRabbit Series B newsroom.

Target customers are engineering organizations that have centralized CI/CD, compliance or audit needs, and significant code-review bottlenecks—groups that can justify per-seat or per-repo licensing and that demand integrations with existing VCS and identity systems. The funding cadence signals a focus on enterprise sales and ISV partnerships, positioning CodeRabbit to pursue deals that require contractual and technical guarantees.

Pricing signals without a public rate card

The company has not published granular pricing in the materials cited, but standard enterprise positioning suggests tiered pricing—team tiers for small groups, enterprise tiers with SLAs and audit features, and volume-based pricing for larger customers. Watch CodeRabbit’s docs and newsroom for formal plans: CodeRabbit docs, Series B newsroom. Industry reporting also frames the broader investment trend into AI code review, reinforcing the expectation of enterprise-focused commercial models: AInvest coverage of the investment trend.

How CodeRabbit fits into the competitive landscape

Peer comparison tools show CodeRabbit among several competitors, and the product’s unique selling points are the depth of automated suggestions and the speed with which suggested edits can be applied. See direct comparisons on PeerSpot for context: CodeRabbit versus Engage on PeerSpot and CodeRabbit versus Tackle on PeerSpot.

Insight: funding momentum often compresses the vendor evaluation window—companies with procurement flexibility that pilot early will shape long-term platform selection.

Market alternatives and practical comparison points

How CodeRabbit differs from earlier tools and rivals

Traditional code review tools emphasized commenting, threading, and simple static checks. CodeRabbit’s emphasis on AI-driven patch generation and prioritized suggestions changes the dynamic by moving from passive annotation to proactive remediation. Its growth trajectory—from a $16M Series A to a $60M Series B—signals that the company is moving into head-to-head competition with other enterprise-focused vendors that offer deeper integrations and compliance features. See earlier TechCrunch analysis of the Series A and the more recent Series B write-ups for this progression: TechCrunch Series A, TechCrunch Series B.

Practical evaluation checklist when comparing tools

When teams evaluate CodeRabbit against alternatives, the most consequential dimensions are suggestion accuracy, the ease of applying suggested diffs in existing workflows, integration depth (CI, IDE, issue trackers), enterprise support and SLAs, plus privacy and compliance controls. PeerSpot comparisons provide side-by-side feature overviews that can help teams shortlist vendors for pilots: PeerSpot product comparisons.

Key practical note: for procurement and engineering leads, prioritize a pilot that measures merge-time and reviewer cognitive load over feature lists—those metrics better capture the business value of suggested changes.

Performance in practice and developer impact

Expected developer outcomes grounded in research

Academic and applied research on AI-assisted review suggests three consistent outcomes when models are well-tuned and integrated: reduced review cycle times, fewer iterations to merge, and lower cognitive load for reviewers—particularly for routine fixes. Several studies back these claims; for a broad view of AI-assisted review effectiveness see AI-assisted code review effectiveness research and for specific effects of suggested-change workflows see automated suggested changes research.

However, adoption friction is real. Developers must trust suggestions, and false positives can erode that trust quickly. Research also shows that UI affordances—clear provenance of suggestions, easy rollback, and user feedback channels—are critical for sustained adoption. The "DeputyDev" research explores assistant tooling and human–AI interaction patterns that inform how teams can structure feedback loops and guardrails: DeputyDev assistant research.

Adoption caveats and the role of continuous feedback

No AI tool is perfect out of the gate. Continuous model refinement, human-in-the-loop feedback, and internal metrics like reviewer acceptance rate and post-merge defect rates are the right signals to iterate on a deployment. Vendors that publish or expose quality-estimation metrics (or let customers run their own validation) make it easier for engineering leadership to quantify risk and benefit.

Insight: the most successful early deployments are targeted pilots focused on high-frequency, low-risk changes (formatting, standard security patterns, small refactors), where suggested changes can show rapid wins.

FAQ — CodeRabbit AI code review: likely customer questions

Q1: What exactly is CodeRabbit and what is its core capability?

Short answer: CodeRabbit is an AI-powered code review platform that generates inline suggestions and automates routine review tasks to speed merges and improve reviewer productivity. See the company overview and early coverage for more context: TechCrunch Series A.

Q2: How much funding has CodeRabbit raised and what does it mean?

Short answer: the company announced a $16M Series A (CRV-led) and later a $60M Series B that reportedly values it around $550M—an indication of investor confidence and a likely push toward enterprise productization. See reporting of both rounds: Series A details, Series B coverage.

Q3: Is CodeRabbit enterprise-ready and what integrations are supported?

Short answer: CodeRabbit is positioned for enterprise use with features like role-based access and audit logs expected to be part of its offerings; the product integrates with common VCS, CI/CD, and collaboration tools per the vendor documentation. For rollout specifics, check the company newsroom about the Series B: CodeRabbit Series B newsroom.

Q4: How reliable are CodeRabbit’s suggestions — what do studies show?

Short answer: academic studies find AI-assisted suggestions can improve reviewer efficiency, but reliability varies by task, model, and codebase; continuous training and human oversight are necessary to avoid false positives. See recent research on effectiveness and suggested-change impact: AI-assisted review effectiveness, suggested changes study.

Q5: How does CodeRabbit compare to other AI code-review tools?

Short answer: competitors include products listed on PeerSpot; comparisons show CodeRabbit’s strengths in automated patch suggestions and enterprise integration work. Evaluate vendors on integration depth and suggestion accuracy.

Q6: Are there privacy or compliance concerns with AI code review like CodeRabbit?

Short answer: enterprise buyers should verify data handling, model training policies, and on-prem/VPC deployment options. Vendors expanding after Series B commonly add compliance features—ask for SOC/ISO certifications or private-hosting options where required. See vendor docs and Series B announcement context: CodeRabbit docs, Series B newsroom.

What CodeRabbit’s surge means for teams and the future of AI code review

CodeRabbit’s rapid path—from a $16 million Series A to a $60 million Series B with an enterprise customer base measured in the thousands—is a clear market signal: investors and buyers are treating AI-assisted code review as operational infrastructure, not a novelty. In the coming year, that will mean three practical shifts for engineering organizations. First, feature velocity focused on enterprise needs: expect richer integrations, better onboarding, and hardened audit trails. Second, more careful deployment models: VPC options, compliance certifications, and per-repo controls will become standard asks in procurement conversations. Third, a new set of metrics will shape success: acceptance rate of automated suggestions, reduction in reviewer-hours per merge, and post-merge defect rates will replace pure usage metrics as the currency of ROI.

Looking ahead, the path toward widespread adoption is iterative. Tools like ReviewRanker provide a playbook for how to measure suggestion impact and guide model updates, while assistant-research like DeputyDev shows how human–AI workflows evolve over time. Organizations that pilot thoughtfully—starting with low-risk, high-frequency changes and instrumenting feedback loops—are likely to capture the initial productivity gains and build the trust necessary to widen adoption.

There are trade-offs and uncertainties. Model errors, integration complexity, and procurement cycles can slow wins. Transparency about data usage and robust rollback mechanisms are not optional; they are prerequisites for scaling. Yet the momentum is evident: when a startup moves from early-stage funding to a large growth round and backs that with thousands of customers, the market is signaling that AI code review is transitioning from experimental to central in the developer toolchain.

For engineering leaders and platform teams, the opportunity is to treat CodeRabbit and similar tools as platform investments: run measurable pilots, require clear security guarantees, and track downstream metrics tied to cycle time and quality. If the early evidence holds, the next few years will show a steady migration of routine review labor from manual annotation to assisted, suggested-change workflows—freeing reviewers for higher-order design discussions and accelerating delivery without sacrificing control.

In short, CodeRabbit’s surge is not just a funding headline; it is a practical nudge to teams to experiment, measure, and prepare for AI tools to become standard parts of the software delivery lifecycle.