Context Engineering for AI Agents: Unveiling Manus' Lessons for Building Smarter, Scalable AI Solutions

- Ethan Carter

- Oct 2, 2025

- 6 min read

Introduction

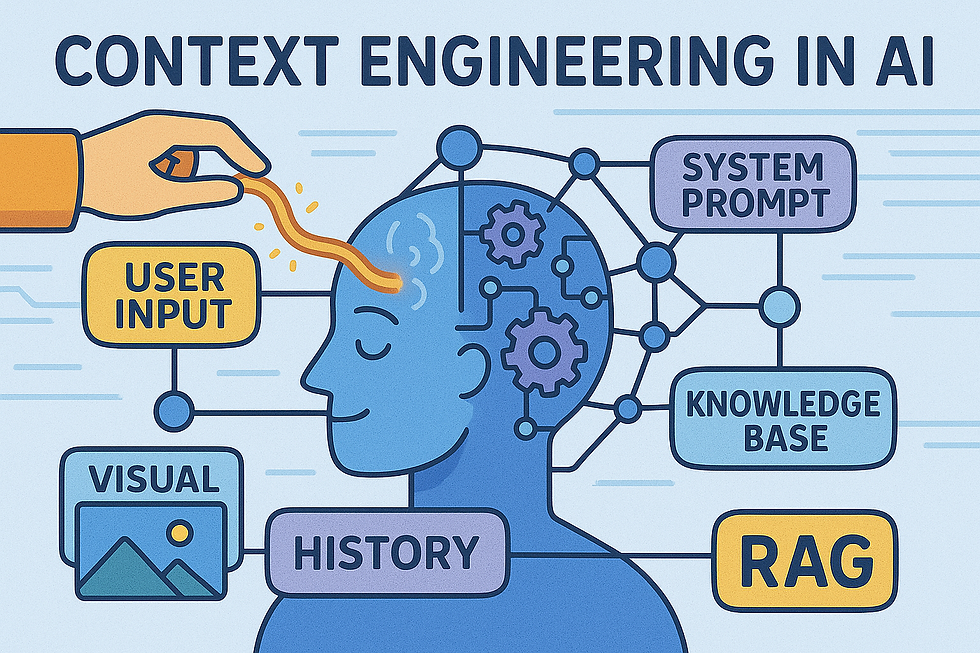

As the era of large language models (LLMs) unfolds, building truly capable, autonomous AI agents isn't just about choosing the most powerful model—it's about designing how that model interacts with its environment through context. "Context engineering" has rapidly become the linchpin for reliable, scalable, and efficient AI agents. Drawing from the practical journey behind Manus, a pioneering AI agent framework, this article explores core concepts, key lessons, and actionable strategies for mastering context engineering. Whether you're developing a new agent from scratch or seeking to push an existing product to new heights, understanding and applying these principles will accelerate your path to robust AI solutions.

What Exactly Is Context Engineering for AI Agents?

Core Definition and Common Misconceptions

Context engineering for AI agents refers to the systematic design, structuring, and manipulation of the information (context) provided to language models, enabling agents to reason, act, and adapt in real-world environments. Rather than fine-tuning models for every new use case—a slow, costly, and inflexible process—context engineering leverages prompt composition, context memory, and interaction patterns to maximize performance and adaptability.

Common misconceptions:

It's just prompt engineering: While prompt engineering is part of it, context engineering covers full agent-environment interaction, memory management, and even how failures are integrated as learning signals.

Larger context windows solve all problems: Even with models offering 128K tokens or more, real-world use quickly exposes limits—both technical (degraded performance with long contexts) and economic (skyrocketing inference costs).

Proper context engineering is thus fundamental for the creation of resilient, efficient, and scalable AI agents.

Why Is Context Engineering for AI Agents So Important?

Its Impact and Value

Context engineering is pivotal because it determines the very behavior, efficiency, and success of AI agents:

Speed and Cost: Smart context management (notably, maximizing KV-cache hit rates) can lead to order-of-magnitude reductions in latency and cost. For example, in Manus, cached token inference can be 10 times cheaper than non-cached operations.

Adaptability: Well-engineered context enables agents to evolve as underlying LLMs improve, sidestepping the inflexibility of custom-tuned models.

Reliability and Recovery: Recording both successes and failures in context enhances agent robustness. Agents that "learn" from mistakes without external intervention are better equipped to handle real-world variability.

Scalability:Efficient context handling lets agents handle complex, multi-step tasks without excessive resource usage.

Without context engineering, even the best models become brittle, costly, and unreliable.

The Evolution of Context Engineering for AI Agents: From BERT to LLMs

The road to advanced context engineering parallels advances in NLP:

Pre-BERT Era: Models required extensive fine-tuning and weeks of iteration to handle new tasks, stalling product-market fit and rapid development.

Rise of LLMs (GPT-3, Flan-T5): The emergence of strong, contextually aware LLMs allowed teams to shift from training custom models to building agents that leverage context for dynamic adaptation.

Contemporary Practice: Today's best agent frameworks, such as Manus, are built to exploit the power of context manipulation, KV-cache optimization, and modular memory systems.

The core lesson: engineering the context is now at least as crucial as engineering the model itself.

How Context Engineering for AI Agents Works: A Step-by-Step Reveal

Designing for KV-Cache Efficiency

KV-cache (Key-Value cache) hit rate is the most critical metric for agent performance and cost. High cache utilization is achieved by maintaining stable prompt prefixes, making context strictly append-only, and ensuring deterministic serialization of context objects (such as tool definitions or state data).

Avoid context-breaking practices like including volatile timestamp data in prompts, or reordering previously serialized actions/observations.

Action Space Management: Mask, Don't Remove

Tool "explosion" can make agent action selection error-prone if not managed. Manus found that masking (using logit masking during decoding to hide or require certain actions based on state) is more cache-friendly and robust than dynamically adding/removing tools during an agent loop.

Masking avoids invalidating cache, reducing confusion, and allows for flexible tool management without sacrificing efficiency.

Using Filesystems as Contextual External Memory

File systems provide virtually unlimited, persistent context externalization. Manus agents learn to read/write files for structured, recoverable memory—e.g., keeping URLs instead of entire web pages or paths instead of full documents.

This enables both efficient context truncation (to save cost and fit limits) and recovery when necessary.

Leveraging Repetition and Recapitulation

Continually updating a "todo.md" or similar plan file within the agent's context acts as a form of "attention manipulation"—keeping goals front-of-mind and counteracting the natural decay of long-term task objectives in LLM attention.

Keeping Failures in Context

Instead of erasing failed attempts or errors, keeping them in context strengthens agent learning and reduces repeated mistakes. Agents that can "see" their past errors adapt much more rapidly.

This principle is underutilized in benchmarks but vital in real-world performance.

Avoiding Overfitting with Few-Shot Patterns

Excessive few-shot prompt engineering can cause agents to mimic unproductive patterns. Instead, Manus adds controlled variability (template changes, slight order shuffling, alternate phrasings) to avoid stagnation and hallucination.

How to Apply Context Engineering for AI Agents in Real Life

Practical Guidelines:

Optimize for Cache:

Keep prompts and tool definitions stable across sessions

Serialize objects deterministically to avoid subtle context differences

Batch changes with explicit cache breakpoints only when absolutely necessary

Mask Tools Instead of Deleting:

Use stateful logit masking during decoding to restrict or enforce action choices

Create consistent action prefixes (e.g., all browser tools start with browser_), making masking easier and debugging simpler

Externalize Long-Term State:

Offload large, infrequently needed data to files, referencing via paths or URLs rather than keeping them in raw context

Exploit Recapitulation and Self-Reference:

Let agents re-articulate goals or plans repeatedly, ensuring alignment remains fresh throughout long tasks

Leave Errors for Learning:

Persist failed actions and exceptions within context to promote self-correction

Vary Patterns to Reduce Overfitting:

Add deliberate variety into prompt sequences and action templates to break monotony and prevent unhelpful imitation

Implementation Example:

A Manus-style agent faced with reviewing 20 résumés would store the review plan and progress in an external file, mask tool choices to only allow relevant actions during each review phase, and record both successful and failed attempts for each résumé. Context is trimmed by offloading details to files, with only summary pointers in the live context—thus optimizing cost and model performance.

The Future of Context Engineering for AI Agents: Opportunities and Challenges

Opportunities:

Smarter, More Reliable Agents: Enhanced context management lays the foundation for truly "autonomous" agents that act and recover more like humans.

Model-Agnostic Agent Design: Context engineering allows agent architectures to evolve alongside rapid LLM advances without disruptive rewrites.

External Memory Integration: Leveraging file-system-like structures could pave the way for integrating next-generation neural memory systems (e.g., State Space Models, neural Turing machines) for even more scalable AI.

Challenges:

Balancing Context Size and Utility: As context windows grow, managing the trade-off between necessary information and inference cost becomes more complex.

Context Compression Risks:Overzealous context truncation or lossy compression risks deleting critical evidence for agent decision-making.

Human-AI Collaboration: Deciding which errors or failed attempts to expose in agent logs involves privacy, auditability, and trust considerations.

The next wave of breakthroughs will likely come from those who blend context engineering, architectural innovation, and rigorous real-world iteration.

Conclusion: Key Takeaways on Context Engineering for AI Agents

Context engineering is essential for building AI agents that are scalable, cost-effective, and robust. It's not just about prompt tricks, but about holistic interaction design—including external memory, error handling, and action-space control.

Practicality trumps perfection: Experience shows that empirical iteration ("random graduate descent") and robust engineering yield more progress than elegant but unproven theory.

Agent memory is everything: Persistent, retrievable context—especially externalized—enables complex, long-running, and resilient behavior.

Failure is valuable: Embrace and record agent failures to accelerate self-correction and learning.

Design for evolution: Shape your context so your agents can ride the wave of LLM improvements, not be stranded when the tide shifts.

As Manus' journey shows, the future of intelligent agents will be built—one context at a time.

Frequently Asked Questions (FAQ) about Context Engineering for AI Agents

What is context engineering in AI agents?

Context engineering is the process of structuring and managing the information an AI agent provides to a language model, enabling effective reasoning, action, and adaptation. It's broader than prompt engineering—covering all aspects of agent-environment interaction.

What's the most common challenge in context engineering for AI agents?

The biggest challenges are managing KV-cache efficiency (for cost and speed), dealing with growing context length, and ensuring errors are leveraged for adaptation rather than hidden.

How does context engineering for AI agents differ from traditional NLP or chatbot design?

Traditional chatbots rely on simple prompt-response cycles and may not require memory beyond short conversations. Advanced AI agents, by contrast, maintain persistent memory, manage large toolsets, and interact with environments using complex context management—including file systems and dynamic action spaces.

How can a developer get started with context engineering for AI agents?

Begin by stabilizing prompt prefixes, implementing append-only context strategies, and externalizing large or persistent state into structured files. Use logit masking for action selection, and design error persistence into your agent workflows.

What's the future direction for context engineering in AI agents?

Future advances include integrating neural memory architectures (like State Space Models), smarter context compression and recovery, and greater trust through transparent error handling. Agents will become more autonomous, model-agnostic, and capable of tackling ever more complex tasks.