Google’s Gemini Adds Multi-Turn, Multi-Image AI Editing with Nano Banana Upgrade

- Olivia Johnson

- Aug 28, 2025

- 16 min read

Introduction to Google Gemini Nano Banana upgrade and multi-turn multi-image editing

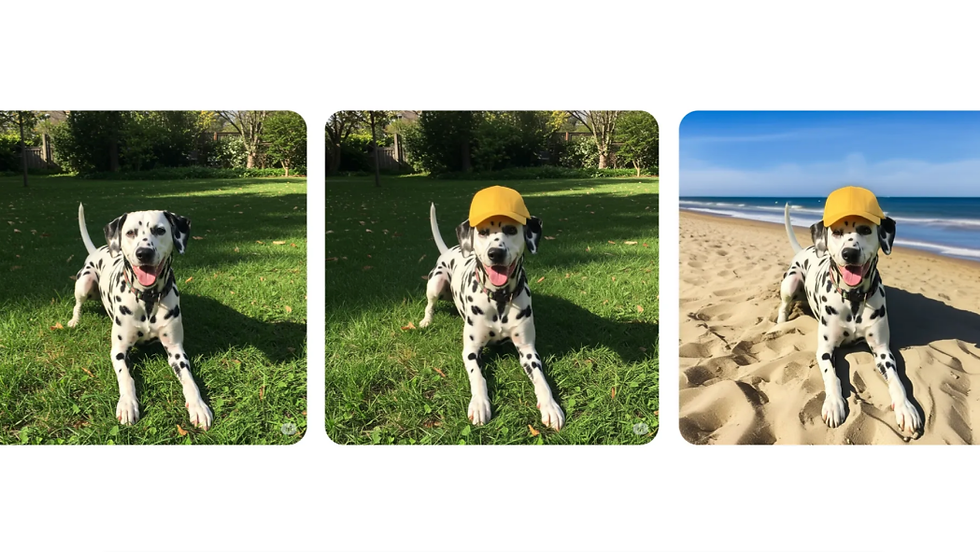

Google’s latest update to its image model — marketed as the Gemini 2.5 Flash Image update and colloquially dubbed the Nano Banana upgrade — introduces true conversational image editing that spans multiple turns and multiple images. At its heart this release enables multi-turn image editing (iterative, stateful edits across a conversation) and multi-image editing (coordinated edits applied to several assets in the same session), letting creators request refinements, rollbacks, and cross-image consistency without leaving the chat flow. Google’s Cloud announcement summarizes the Gemini Image 2 roadmap and MLOps improvements that underlie this change, and early coverage highlights the user-facing Nano Banana improvements and experiments.

Why this matters: creators, product teams, marketers, and researchers all wrestle with iteration costs. Multi-turn image editing gives more granular control by maintaining a conversational state so designers can say “make the shirt red” then “rotate the pattern slightly” without re-uploading or re-specifying the whole scene. Multi-image editing dramatically cuts batch effort — think creating consistent ad variants or localized product shots where the same asset transformations must be applied across many images. That combination affects speed, consistency, and the creative feedback loop.

This article walks through the technical foundations, typical user workflows and case studies, analytics and troubleshooting patterns, and the regulatory and ethical considerations teams should weigh when adopting Gemini 2.5 Flash Image and the Nano Banana upgrade. Throughout, you’ll find concrete examples, implementation signals, and practical next steps to help teams test and scale conversational image editing.

Insight: The Nano Banana upgrade shifts image editing from isolated one-off operations to interactive, stateful collaboration between human intent and model-driven transforms.

Key takeaway: Gemini’s multi-turn, multi-image pipeline reduces iteration time and increases control, especially for batch creative and prototyping workflows.

Gemini 2.5 Flash Image features, multi-turn and multi-image editing explained

Gemini 2.5 Flash Image extends Gemini’s image capabilities with conversation-aware editing, enabling users to iteratively refine a composition, apply linked edits across images in a session, and use semantic commands (add, erase, alter attributes) in natural language and visual references. The Nano Banana upgrade focuses on responsiveness and coherency: lower latency for edit turns, improved consistency when editing the same element across several images, and UI affordances that make versioning and rollback more intuitive. Google’s Vertex AI documentation provides the mechanics of image edits and masks used by Gemini-style systems, and the Nano Banana press coverage highlights the upgrade’s multi-image coherency and performance claims.

User-facing capabilities include:

Iterative refinements across conversational turns (change color, then texture, then lighting).

Simultaneous editing of multiple images with shared transformations (apply a style or object insertion consistently).

Semantic add/erase/manipulate controls that understand high-level instructions (e.g., “remove the background person, replace with a bicycle”).

UI affordances: conversation history, visible version thumbnails, drag-to-link edits across images, and mask preview overlays.

Performance claims and practical limits: Gemini 2.5 is engineered to reduce round-trip latency for short edits and to handle common image sizes used in web and mobile creative work; however, very high-resolution raw assets, extremely large batch sizes, or ultra-fine pixel edits remain slower or less precise than manual tools. Google’s Cloud announcement discusses the MLOps and deployment improvements that help lower latency and scale inference.

Insight: the interface matters as much as the model — better versioning, previewing, and link controls multiply model utility.

What Gemini 2.5 Flash Image delivers to users

Gemini 2.5 Flash Image supports sequences such as:

Refine: “Make the sofa teal,” then “increase cushion shadow detail,” then “slightly flatten the fabric texture.”

Rollback: request a prior version by saying “undo the last two changes.”

Blend: combine elements from two images, such as “place the hat from image 2 onto the model in image 1 and match color temperature.”

Multi-image sessions are initiated by uploading multiple images into a single conversation or by referencing earlier images with names (e.g., “apply to all product shots in this conversation”). The system tracks identifiers for each image and allows linking operations so that a mask or reference from one image can be reused as a template across others.

Key takeaway: Multi-turn sequences allow designers to iterate naturally — submit a high-level intent, evaluate a proposed edit, and refine it conversationally without losing context.

Nano Banana upgrade highlights and benchmarks

The Nano Banana upgrade called out improvements in:

Quality: better object grounding and fewer unnatural artifacts in iterative edits.

Speed: reduced per-turn latency through inference optimizations and caching of intermediate latent states.

Multi-image coherency: improved color, geometry, and attribute alignment when applying the same edit across multiple assets.

Benchmarks used in early reports include perceptual similarity measures across edit sequences and latency averages for small (512×512) to medium (2048×2048) images. Real-world scenarios to test:

A/B creative generation: measure time-to-first-acceptable-variant.

Batch style application: measure inter-image color consistency metrics.

Iterative retouch: measure number of turns to reach designer approval versus traditional tools.

Key takeaway: Benchmarks matter, but evaluate using your own creative acceptance criteria: perceived quality, iteration count, and time savings.

UX changes for iterative image editing

To support conversational flows, Gemini’s UI introduces:

Conversation history pane with thumbnail snapshots for each turn.

Versioning controls (label, compare, revert) and timeline scrubber for edits.

Mask editing tools and alignment guides for linking edits across images.

A “link edits” toggle that applies the same semantic operation to selected images.

These UX changes help preserve edit intent and make undo/redo meaningful in a multi-turn context. For example, users can create a mask over a product and then link that mask across five photos to apply an identical color change while adjusting lighting per image.

Key takeaway: Good UX prevents context drift by surfacing intent and making edit scope explicit.

Practical limits and best use cases

Where Gemini excels:

Creative design, moodboard exploration, and rapid prototyping.

Batch creative tasks like marketing variants, product mockups, and localized ads.

Early-stage concept art where speed and ideation matter more than pixel-perfect realism.

Where it may struggle:

Extremely fine-grained photorealism that demands complex manual retouching.

Very large batches requiring deterministic, pixel-accurate transformations across thousands of images.

Cases where strict provenance and legal attribution require complex audit trails absent from basic interfaces.

Key takeaway: Use Gemini 2.5 Flash Image for idea generation, consistent styling across moderate batches, and workflows where human-in-the-loop validation is acceptable.

How multi-turn image editing works in Gemini, technical overview

At a high level, multi-turn image editing in Gemini coordinates three pieces: a conversational state that stores prior prompts and versions, an edit-intent parser that maps language to editing operators, and a stateful image representation that can be transformed and re-rendered across turns. The system must persist masks, latent representations, and alignment metadata between turns so edits remain consistent and composable. Vertex AI’s image-edit overview explains mask-aware editing and the importance of stateful handling for repeatable edits, and academic work on semantic image editing provides background for the operations Gemini performs.

Insight: multi-turn editing is an orchestration problem as much as a modeling problem — storing and applying intent reliably across turns is the core technical challenge.

Conversation state, prompts and edit intents

Gemini maps natural language prompts to discrete edit intents such as insert object, erase element, change attribute, or spatial transform. The conversation state holds the sequence of intents, associated masks, and metadata (e.g., which image(s) were targeted, user approvals, and version labels). When a user issues “remove the lamp and brighten the corner,” the intent parser splits and prioritizes operations, generates or refines masks, and schedules the transforms to avoid conflicting edits.

Practical implementation details:

Intent parsing uses language understanding modules trained to recognize editing verbs and object references.

Confidence scores guide when the system should ask a clarifying question (e.g., “Do you mean the lamp on the left or the floor lamp?”).

State persistence caches intermediate latent representations to speed up subsequent render steps.

Actionable takeaway: Structure prompts clearly (verb → target → attribute) to reduce clarification loops: for example, “erase the lamp on the left; brighten the upper-left corner by 20%” yields faster, accurate edits.

Semantic image editing building blocks

The main building blocks for semantic editing are:

Segmentation and mask generation: identify pixel regions for targeted changes.

Mask-aware synthesis: inpainting or compositing conditioned on the preserved surroundings.

Attribute control: disentangled controls for color, texture, lighting, and geometry so each attribute can be adjusted without unintended changes.

These operations depend on robust segmentation models and latent-space editors that can alter attributes without degrading unrelated content. Academic literature on semantic editing techniques describes mask-guided inpainting and disentangled attribute control that underpin commercial systems.

Actionable takeaway: Provide high-quality reference images and explicit mask hints when possible — the model performs best with clear spatial constraints.

Multi-image pipelines and synchronization

To coordinate edits across images, Gemini uses shared templates, linked masks, and cross-image conditioning. Techniques include:

Shared masks: reuse a mask created in one image as a spatial template in others, with automatic alignment adjustments.

Reference frames: anchor edits to a canonical pose or object and transform coordinates to each image’s geometry.

Latent conditioning: store a style or attribute vector (color profile, texture logits) and apply it consistently across assets.

These patterns help maintain cross-image coherency for attributes like color temperature, object placement, and style. The system also supports exceptions: apply a linked edit to five images but exclude one with a single toggle.

Actionable takeaway: When planning batch edits, create a canonical reference—one image that defines masks and style—and link others to that reference for best consistency.

Prompt engineering and control knobs for stable outputs

Best prompt practices for multi-turn sessions:

Be explicit: separate actions (erase/insert), targets (object/location), and attributes (color/size).

Use reference anchors: “match the blue from image 3” to tie edits to existing assets.

Include constraints: “keep shadows consistent” or “preserve face details” to preserve delicate regions.

Use short verification turns: after a major edit, ask the model to summarize the applied operations to validate intent.

Control knobs commonly available involve temperature-style sampling settings, size/quality trade-offs, and a “strict adherence” flag to prioritize deterministic transforms over creative variation.

Key takeaway: Prompt discipline reduces context drift and speeds convergence to desired results.

Multi agent systems and multi modality models behind Gemini image editing

Modern conversational image editing is frequently implemented as a coordinated set of specialized agents rather than a single monolithic model. Multi-agent systems distribute tasks to agents that handle intent parsing, mask generation, layout planning, and final rendering, then reconcile outputs into a single edit. This orchestration pattern improves modularity and enables targeted scaling: each agent can be optimized, validated, and updated independently. Academic work on integrating vision, graphics, and NLP provides patterns for this orchestration and shows how agents can exchange structured representations to maintain coherence.

Insight: decomposing the pipeline into agents reduces the cognitive load on a single model and enables tighter control and auditing of each editing stage.

Multi agent orchestration for interactive editing

Typical agent roles include:

Intent agent: parses natural language into structured operations.

Perception agent: performs segmentation and object detection to create masks and identify key assets.

Layout agent: plans spatial arrangements, resolves occlusions, and proposes transformations.

Rendering agent: performs mask-aware synthesis, lighting consistency, and texture blending.

Verification agent: checks for artifacts, policy compliance, or unmet constraints.

Coordination protocols are lightweight: agents exchange JSON-like structures (e.g., {operation: "insert", target: "hat", mask_id: 12, style_vector: X}) and use confidence scores to request clarifications. This architecture also makes it easier to add human-in-the-loop checkpoints where a reviewer can approve or modify an intermediate mask.

Actionable takeaway: For production deployments, design agent interfaces so each stage can emit interpretable logs and checkpoints for auditing.

Vision graphics and NLP integration patterns

Bridging free-form instructions to pixel edits involves several intermediate representations:

Scene graphs or object lists that capture semantic relationships (object A sits on object B).

Spatial grids or keypoints for geometric alignment.

Latent style vectors for color and texture control.

From an engineering standpoint, these representations allow deterministic transforms (e.g., scale this object by 10%) and generative synthesis without repeating entire rendering pipelines from scratch. Research on integrating graphics and NLP demonstrates how mapping language to discrete scene edits reduces ambiguity and improves repeatability.

Actionable takeaway: Use structured prompts that implicitly or explicitly build a scene graph (e.g., “place the red mug to the right of the laptop, slightly rotated toward the camera”).

Advances in multi modality VLMs and their impact

Progress in multi-modality vision language models (VLMs) drives improvements in object grounding, commonsense layout, and cross-image coherency. Better grounding reduces hallucinated details, and models that handle both language and pixels can reason about edits (e.g., “make the lamp smaller so it fits the table”) with improved spatial awareness. These capabilities amplify the power of multi-turn editing by reducing misinterpretation across conversational turns.

Key takeaway: Improvements in VLMs translate directly into fewer clarification cycles and more reliable multi-image consistency.

MLOps and scaling considerations for production use

Production systems must address deployment, latency, and continuous improvement:

Model deployment patterns: use segmented agents and autoscaling for high-reliability inference.

Latency minimization: cache intermediate latent representations and use model distillation for faster turn responses.

Continuous improvement cycles: collect labeled feedback (accept/reject scores) and retrain intent parsers to reduce clarification frequency.

Actionable takeaway: Run small pilots with telemetry on turn counts, clarification rates, and per-turn latency to prioritize optimization efforts.

Practical applications and case studies for Gemini multi image editing in creative workflows

Gemini’s multi-turn and multi-image editing maps cleanly to many creative and commercial workflows. Artists can iterate on concepts quickly, marketing teams can generate localized creative variants, ecommerce teams can produce consistent product images, and researchers can visualize hypotheses without investing in heavy manual retouching. Early press coverage and community experiments illustrate how the Nano Banana upgrade improves batch consistency and iteration velocity.

Insight: multi-image editing converts repetitive manual work into a high-leverage creative process.

Creative artists and AI art workflows

A typical artist workflow: 1. Generate several base compositions from prompts. 2. Pick a concept and request iterative edits to refine pose, lighting, and style. 3. Use linked edits to apply a successful color palette or pattern across the whole series for a cohesive body of work.

Example: an illustrator generates 6 concept images, selects one and asks Gemini to “swap the jacket color to deep mustard, increase fabric texture, and add stitched seams,” then applies the same style to the other five images with a single command. This reduces the repetitive tuning artists often perform.

Actionable takeaway: Use multi-turn editing to move from ideation to a consistent series in fewer than half the manual editing steps.

Commercial content generation and marketing assets

Marketing teams can:

Produce A/B variants: tweak headlines, background colors, or product placement across dozens of images in one session.

Localize creatives: change signage language or color palettes to match regional tastes while preserving brand composition.

Produce consistent product mockups: place the same product in multiple scenes with matched lighting and style.

Case scenario: a retail brand uploads ten product shots, creates a mask for the product area in one image, and links the mask to others to change the fabric pattern consistently for a seasonal campaign.

Actionable takeaway: Create canonical templates early in the workflow (masked reference images and a style vector) to speed multi-image batch edits.

Research, education and prototyping scenarios

Researchers and educators use multi-turn editing for:

Visualization of models and experiment results by iteratively refining plotted elements.

Classroom demos showing progressive changes (e.g., how color grading affects mood).

Rapid prototyping of UI/UX concepts by testing multiple look-and-feel variants in a single conversation.

Actionable takeaway: Use multi-turn editing as a teaching tool to demonstrate causal changes (change one attribute at a time and document outcomes).

Community case snapshots and press highlights

Early adopters and press highlight:

Faster iteration cycles and reduced need for manual compositing.

Improved cross-image coherency in batch use cases as demonstrated in Nano Banana previews.

Community-shared prompt templates for common operations, accelerating onboarding for new users.

Key takeaway: The greatest immediate ROI is in workflows that previously required repeated manual adjustments across multiple assets.

User experience, analytics, community troubleshooting and best practices

Adoption of multi-turn, multi-image editing depends on discoverability, stable UX patterns, and robust community support. Understanding how users behave and the common failure modes helps teams design better onboarding and troubleshooting instructions. While public analytics dashboards vary, common signals reported by early deployments include high engagement in first sessions and drop-off at points of model confusion (clarification loops).

Insight: User education on prompt structure and session management cuts friction dramatically.

Insights from user behavior analytics

Typical metrics to monitor:

Time to first successful edit: how long it takes a user to get a usable result.

Turn count to convergence: average number of conversational turns to reach a final version.

Retention across sessions: whether users come back to the multi-image editor or revert to traditional tools.

Actionable takeaway: Instrument the interface to capture turn-level accept/reject signals and collect annotated examples for retraining.

Common problems and fixes for multi-turn sessions

Reported failure modes and fixes:

Mask mismatch: when an auto-generated mask misses parts of an object. Fix: manually refine the mask or provide a bounding-reference (“include the lamp base”).

Context drift: the model forgets earlier constraints during long sessions. Fix: restate critical constraints or use named references (“stick with ‘StudioStyle1’ for color”).

Inconsistent color palettes across images: caused by separate rendering passes. Fix: create and apply a shared style vector or reference image.

Actionable takeaway: Encourage users to save checkpoints frequently and label versions to reduce backtracking costs.

Community tutorials, templates and shared prompts

Communities are publishing templates for common tasks:

Product mockup templates with reusable masks and a short prompt pattern.

A/B generation prompt packs that specify variations to test (color, background, headline).

Troubleshooting checklists for common artifacts.

These community assets reduce onboarding time and make outcomes more predictable.

Actionable takeaway: Maintain an internal prompt library tailored to your brand’s needs and share it with designers and marketers.

Best practices for reliable iterative editing

Operational recommendations:

Session management: limit session length for single conversational threads and checkpoint after major edits.

Checkpointing: export intermediate versions and log the applied intents for auditability.

Reference usage: always upload a master reference image for style or attribute transfers.

Human review gates: for production assets, require a human approval step before publishing.

Key takeaway: Treat multi-turn sessions as stateful transactions—save, label, and audit each meaningful milestone.

Regulatory, ethical and copyright considerations for Gemini generated images

As AI-generated imagery becomes routine, legal and ethical guardrails are essential. The U.S. Copyright Office and other authorities are actively clarifying how AI-assisted creation intersects with authorship and copyrightability. The U.S. Copyright Office has guidance on AI-generated content that outlines the current legal baseline for attribution and human authorship. Organizations must also address provenance, consent for source imagery, and safeguards against misuse.

Insight: responsible use requires provenance and human oversight baked into workflows, not added later.

US Copyright Office guidance and legal baseline

Key takeaways from official guidance:

Human authorship matters: purely machine-generated works without creative human input typically face copyright eligibility issues.

AI-assisted works can be copyrightable when a human makes creative choices that go beyond routine operation.

Organizations should document the role humans played in creation to support claims of authorship.

Actionable takeaway: Log human prompts, approval steps, and edits to build an audit trail supporting authorship claims.

Attribution, provenance and consent best practices

Practical steps:

Store provenance metadata: original image source, prompt history, masks used, and user approvals.

Obtain rights for source images and record consent for any identifiable people appearing in inputs.

Use visible or embedded attribution labels where required by policy or platform rules.

Actionable takeaway: Implement automated metadata capture at upload time and retain it through edit turns.

Mitigations against abusive or infringing outputs

Controls to reduce abuse and infringement:

Content filters and policy models that flag risky edits (e.g., creating deepfakes of public figures).

Human review gates for potentially sensitive outputs.

Rate limits and auditing for high-volume operations that might be used to copy protected works.

Actionable takeaway: Combine automated moderation with human-in-the-loop review for edge cases and policy-sensitive outputs.

Recommendations for enterprise adoption and governance

Governance checklist:

Policy: define allowed use-cases, restricted content classes, and approval workflows.

Logging: maintain edit histories, user IDs, and version diffs.

Training: educate teams on attribution, consent, and quality expectations.

Legal review: consult counsel on licensing models for generated imagery.

Key takeaway: Enterprises should treat conversational image editing as a feature with compliance obligations—design policies and telemetry from day one.

FAQ on Google Gemini multi turn multi image editing and the Nano Banana upgrade

Q1: What is multi-turn image editing and how is it different from single-shot editing? A1: Multi-turn image editing means the system preserves conversational state and prior edits so you can iteratively refine an image (e.g., change color, then texture), unlike single-shot editing which applies a one-time transformation with no built-in memory of prior steps; for implementation mechanics see Vertex AI’s image editing overview that explains mask-aware and stateful edit flows.

Q2: Can Gemini edit multiple images in a single session and keep edits consistent? A2: Yes — Gemini’s multi-image session features let you link masks and style references so the same semantic edit can be applied across assets with alignment and color coherency controls, as showcased in press explanations of the Nano Banana upgrade’s multi-image coherency improvements.

Q3: How do I structure prompts for iterative edits to avoid context drift? A3: Use explicit instruction sequences (operation → target → attribute), reference anchors (e.g., “match image 2”), and checkpoint important constraints periodically; these techniques align with best practices in multi-turn pipelines described in the Vertex AI documentation.

Q4: What are the common failure modes and how can I troubleshoot them quickly? A4: Common issues include mask mismatch, color inconsistency, and context drift; fix them by refining masks manually, using shared style vectors or reference images, and labeling checkpoints to revert if the model diverges.

Q5: Are outputs from Gemini 2.5 Flash Image copyrightable and what should I know about attribution? A5: Copyrightability depends on the human creative contribution and how much creative control was retained; keep a provenance record of prompts, approvals, and edits to support authorship claims and follow guidance issued by the U.S. Copyright Office on AI-generated works.

Q6: How can teams integrate Gemini image editing into production MLOps pipelines? A6: Start with a pilot that logs per-turn telemetry (accept/reject, latency, masks), use modular agent design for inference scaling, and instrument retraining loops with labeled feedback as recommended in deployment discussions from the Gemini/MLOps announcements.

Q7: Where can I find community tutorials and templates to accelerate adoption? A7: Community hubs and vendor-provided galleries often post prompt templates and mask examples; supplement community materials with your internal template library tailored to brand constraints and product needs to ensure consistency.

Q8: What privacy and content moderation controls should I enable when using multi-image editing? A8: Enable content filters for sensitive categories, require consent for identifiable individuals in source images, and configure human-review gating for outputs that could be misleading or harmful, aligning with enterprise governance best practices.

Conclusion: Trends, actionable insights and future outlook for Gemini image editing

The Nano Banana upgrade to Gemini 2.5 Flash Image marks a practical shift from one-shot image tools toward conversational, stateful, and batch-capable editing. The combination of multi-turn image editing and multi-image editing unlocks faster iteration cycles, better batch consistency, and workflows that align with how creative teams already work: iteratively and collaboratively.

Near-term trends (12–24 months) to watch:

Continued improvements in photorealism and fewer iterative corrections required.

Stronger cross-image coherency as VLMs learn to represent style vectors robustly.

More refined enterprise controls for provenance, audit, and moderation.

Greater integration into creative suites and marketing platforms.

Emergence of community-driven prompt templates and shared libraries.

Opportunities and first steps:

Experiment with multi-turn prompts: run small design sprints to compare iteration counts and time saved versus manual workflows.

Adopt community templates and create an internal prompt library for brand consistency.

Incorporate provenance metadata capture from day one (upload origin, prompt history, masks).

Run pilot MLOps tests focused on telemetry collection (turn counts, latency, acceptance rates).

Establish governance: define allowed use-cases, enable moderation controls, and require human approvals for production assets.

Uncertainties and trade-offs remain: legal frameworks are evolving, very high-fidelity retouching still benefits from expert manual work, and scaling to thousands of images requires engineering investment. Treat current capabilities as powerful accelerants for ideation and batch creative work, while designing for human oversight and auditability.

Final takeaway: The Nano Banana upgrade makes Gemini a compelling tool for iterative, conversational image workflows — teams that combine disciplined prompt practices, provenance capture, and human oversight will extract the most value while managing risk.