Human-in-the-Loop Tech Redefines AI Agent Security

- Aisha Washington

- Oct 9, 2025

- 9 min read

Imagine an intelligent assistant that not only answers your questions but also executes tasks on your behalf. It books your flights, curates your weekend playlist on Spotify, and orders your groceries, all while you focus on more important work. This is the promise of AI browser agents, the next frontier in personal computing, powered by large language models (LLMs) like Claude, Gemini, and ChatGPT. These autonomous bots are designed to navigate the web and interact with applications, streamlining our digital lives in ways we're only beginning to imagine.

However, this convenience introduces a profound security paradox. For an AI agent to be truly useful, it needs access to our private accounts. It needs our usernames and passwords. This creates an unprecedented risk: what if the AI, designed to help you, inadvertently exposes your most sensitive information? The very mechanism that grants them autonomy could become a vector for catastrophic data breaches.

This is the central challenge of the agentic era. Fortunately, a new security model is emerging to address it head-on. Pioneered by companies like 1Password, the solution hinges on a concept called "human-in-the-loop" (HITL) authentication. It's a framework designed to grant AI agents the access they need without ever entrusting them with the keys to your kingdom. This article explores the critical problem of AI agent security, breaks down how HITL systems work, and analyzes what this shift means for the future of our digital identity.

The Rise of AI Agents and the Inevitable Security Paradox

The concept of agentic AI has rapidly moved from science fiction to a functional reality. These are not just chatbots; they are autonomous systems capable of executing multi-step tasks across different websites and applications. But as their capabilities grow, so does the attack surface for our personal data.

What Are AI Browser Agents?

AI browser agents are software programs, often integrated into web browsers or powered by standalone applications, that use LLMs to understand and execute user commands. Instead of you manually clicking through a website, you can give the agent a high-level goal, such as "Find and book a round-trip flight to Hawaii for the first week of December, and add it to my calendar." The agent then navigates to airline websites, searches for flights, and proceeds to the checkout page, acting as your digital proxy. Major AI platforms are increasingly incorporating these agentic capabilities to make their tools more powerful and useful.

The Promise: Automating Your Digital Life

The appeal of AI agents is undeniable. They offer to offload the mundane, time-consuming tasks that clutter our daily routines. Consider the possibilities:

Travel Planning: An agent can compare flights, hotels, and rental cars across dozens of sites, booking the best options based on your predefined budget and preferences.

Personalized Entertainment: You could ask your agent to create a new Spotify playlist with "upbeat indie rock from the last five years" and share it with a friend.

E-commerce and Shopping: An agent could monitor for price drops on a specific product, purchase it automatically when it hits your target price, and arrange for shipping.

These tasks require the agent to log in to your accounts on your behalf. Without this ability, their utility is severely limited.

The Peril: A New Vector for Credential Theft

Herein lies the paradox. Unlike a human, who might forget a password, an AI bot risks remembering it in a way that could lead to a future breach. When you give your password to an agent, you are potentially exposing it to the underlying LLM and the infrastructure it runs on.

The risks are manifold:

Model Training Exposure: Could your credentials be inadvertently included in future training data, making them accessible to others?

Compromised Infrastructure: If the company powering the AI agent suffers a data breach, your stored or temporarily cached credentials could be stolen

Unintended Actions: A poorly instructed or compromised agent could perform unauthorized actions on your behalf, using your saved credentials to wreak havoc

This is not a theoretical problem. It's a fundamental security flaw in the initial design of agentic AI. The solution cannot be to simply trust the AI provider; it requires a new architectural approach to authentication.

How Human-in-the-Loop Authentication Secures AI Agents

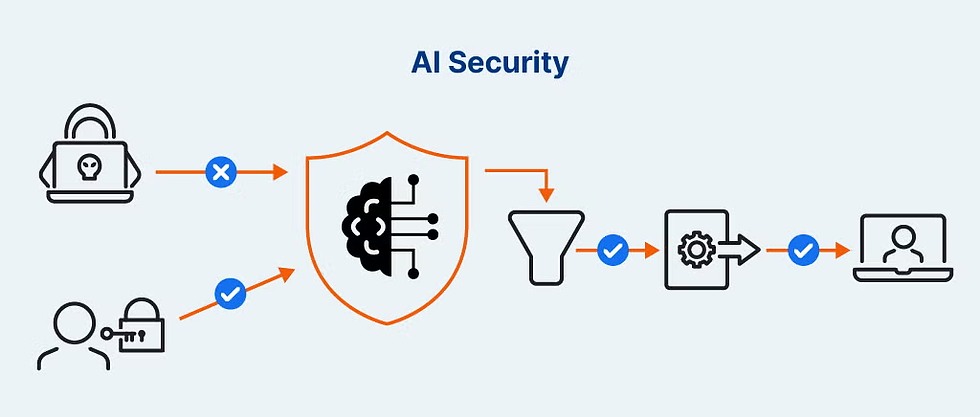

To solve the agentic security paradox, we need a system where the AI can request access without ever possessing the credentials. This is the core principle of human-in-the-loop (HITL) authentication, a model that keeps the user in firm control of their digital identity.

Defining "Human-in-the-Loop" (HITL) Security

In the context of AI agents, HITL security means that no sensitive action, especially one involving authentication, can be completed without explicit, real-time approval from the human user. The AI acts as a requester, not a possessor, of privileges. The user becomes an integrated part of the workflow, serving as the ultimate authorization checkpoint. This approach ensures that even if the AI is compromised, it has no standing credentials to steal.

1Password's Secure Agentic Autofill: A Case Study

Password manager 1Password has developed one of the first concrete implementations of this philosophy, a feature it calls Secure Agentic Autofill. It's a tool built to work with AI bots, similar to how their existing extension works for human users, but designed to solve the unique security risks agents pose. The system's entire purpose is to "inject the credentials directly into the browser if, and only if, the human approves the access".

Step-by-Step: The Secure Handshake Process

The genius of the Secure Agentic Autofill system lies in its carefully orchestrated workflow, which separates the AI's intent from the actual credentials.

Request:The AI browser agent navigates to a login page and determines it needs credentials to proceed. It then signals this need to the 1Password browser extension

Identification and Approval:1Password identifies the correct login for the site and, instead of handing it to the AI, sends an approval request to the user. This prompt appears on one of the user's trusted devices, such as their laptop or phone

Human Authentication:The user authenticates the request using a secure, local method like Touch ID on a Mac or another form of biometric verification. This action confirms the user's intent to log in

Secure Injection:Once approved, 1Password uses an end-to-end encrypted channel to inject the credentials directly into the browser session being operated by the AI

The Zero-Knowledge Advantage: Why the AI Never Sees Your Password

The most critical part of this process is what doesn't happen. At no point do the username and password traverse the AI model or its supporting systems. According to 1Password, the AI agent and the LLM powering it never see the actual credentials. This "zero-knowledge" principle is the cornerstone of the solution. The AI knows it needs to log in, and it knows that the login was successful, but it has zero knowledge of the password itself. This elegantly severs the link between agentic function and credential risk.

Real-World Implementation: Where Can You Use This Today?

This technology is no longer just a concept. The first iteration of this secure agentic framework is already being deployed, signaling a major step forward for the entire AI industry.

Early Access Through Browserbase

Initially, Secure Agentic Autofill is available in early access through a platform called Browserbase. This is a strategic starting point, as Browserbase is a company that builds tools and a specialized web browser environment specifically designed for running AI agents and automations. By integrating with a native "AI-first" browser, 1Password can test and refine the technology in a purpose-built environment before a wider rollout.

The Broader Ecosystem: What This Means for Other AI Tools

While Browserbase is the first adopter, this security model is a blueprint for the entire agentic AI ecosystem. The core challenge of credential security is universal, affecting every major player, including tools built on models from OpenAI (ChatGPT), Google (Gemini), and Anthropic (Claude).

The precedent set by 1Password and Browserbase will likely pressure other platforms to adopt similar HITL authentication frameworks. Users will, and should, start demanding to know how their credentials are being handled. In the near future, we can expect to see:

Direct integrations with major AI chatbots and browser-native agents

API standards that allow any password manager to securely serve credentials to any AI agent

Security ratings for AI tools based on whether they use a zero-knowledge, human-in-the-loop model for authentication

Actionable Insights: Protecting Your Digital Identity in the Agentic Age

As AI agents become more common, users must become more sophisticated in how they manage their digital identities. Relying on old security habits will not be enough.

Why Your Standard Password Manager Isn't Enough

Traditional password manager browser extensions are designed for human-driven autofill. You click a button, and it fills the fields. While this is secure for personal use, it's not designed for autonomous agents. Simply letting an AI trigger a standard autofill function would recreate the original security problem. The agent would still gain access to the raw credentials stored in the browser's memory or DOM. The new generation of agentic security tools, like 1Password's, are fundamentally different because they create an unbreakable, encrypted link between the password vault and the browser session that bypasses the AI agent entirely.

Evaluating AI Tools: Questions to Ask About Security

Before you grant an AI agent access to your accounts, you should be asking tough questions about its security architecture:

Credential Handling: How does your service handle my login credentials? Are they stored, and if so, how are they encrypted?

Authentication Flow: Is there a human-in-the-loop approval process for every login attempt?

Zero-Knowledge Principle: Does the AI model or agent ever have direct access to my plaintext passwords?

Data Isolation: How do you ensure my data is isolated and not used for training other models?

The Role of Biometrics in the New Security Chain

The 1Password model highlights the growing importance of biometrics as the final link in the security chain. Using something you are (a fingerprint, a face) to approve a request is far more secure than using something you know (a master password). Biometric authentication via methods like Touch ID or Windows Hello provides a fast, frictionless, and highly secure way for users to give that "human-in-the-loop" approval, as it proves the user is physically present and authorizing the action.

Conclusion

The age of autonomous AI agents is dawning, bringing with it both incredible convenience and significant security risks. The naive approach of simply feeding our passwords to bots is a recipe for disaster, threatening to undermine the very trust these systems need to function.

The path forward, illuminated by new technologies like 1Password's Secure Agentic Autofill, lies in a human-in-the-loop model. By re-architecting the authentication process to require explicit human approval for every sensitive action, we can grant AI agents the access they need to be useful without ever compromising our credentials. This zero-knowledge approach, where the AI never sees the password, is the fundamental design principle that will enable a secure and trustworthy agentic future. As users, our role is to demand this level of security and to be discerning about the tools we entrust with our digital lives.

Frequently Asked Questions (FAQ)

1. What is AI agent security?

AI agent security refers to the practices and technologies designed to protect a user's data, credentials, and accounts when being accessed or managed by an autonomous AI agent. The primary goal is to prevent the AI from exposing sensitive information, like passwords, either through vulnerabilities, bugs, or misuse.

2. What is the main security risk with AI browser agents?

The main risk is credential exposure. For an AI agent to perform tasks like booking tickets or managing online accounts, it needs to log in on your behalf. If the agent handles your actual password, it could be stored insecurely, exposed in a data breach, or inadvertently leaked, creating a severe security risk.

3. How is Secure Agentic Autofill different from regular autofill?

Regular autofill is designed for humans and often places the password directly into a web page's code, where it could be accessed by a browser agent. Secure Agentic Autofill is built for AIs and uses a "human-in-the-loop" model. The AI requests a login, you approve it on a trusted device, and the credentials are injected securely without the AI ever seeing them.

4. What can I do to protect my accounts when using AI agents?

First, use a password manager. Second, be selective about the AI tools you use. Prioritize services that explicitly describe a human-in-the-loop or zero-knowledge security model for handling logins. If a tool doesn't require your explicit, real-time approval for a login, it may not be secure enough for sensitive accounts.

5. What is the future of authentication for AI?

The future is likely passwordless, even for AIs. Technologies like passkeys, which use cryptographic signatures instead of shared secrets, are a perfect fit. An AI will be able to request a login, and you will approve it with a biometric scan on your phone, completing the action without a password ever being involved. This, combined with human-in-the-loop frameworks, will create a highly secure environment for agentic AI.