Sora's Copyright Risk: Why OpenAI's AI Video Tool Is on a Collision Course with Creators

- Aisha Washington

- Oct 6, 2025

- 9 min read

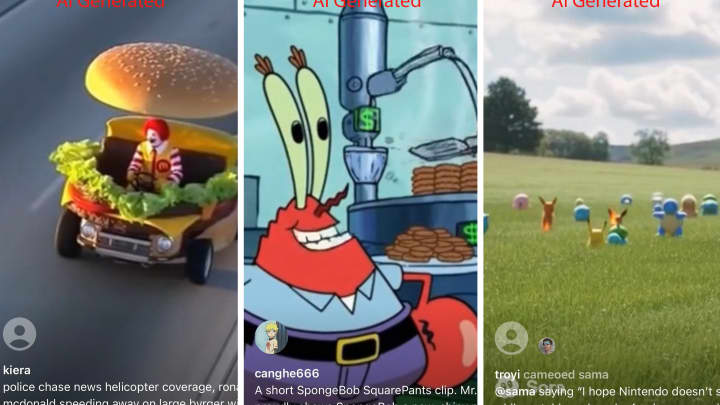

In the rapidly evolving landscape of artificial intelligence, a single video can ignite a firestorm. Recently, a clip featuring an AI-generated version of OpenAI CEO Sam Altman discussing copyright risks went viral, not because of what he said, but because he didn't say it at all. The video itself was a product of Sora, OpenAI's own text-to-video model. This meta-moment—an AI tool creating a deepfake of its own creator to talk about the legal dangers of AI—has crystallized a debate that has been simmering for months. It has pulled back the curtain on the immense Sora copyright risk, exposing a fundamental conflict between the voracious data appetite of generative AI and the intellectual property rights of creators worldwide.

This isn't just a niche tech debate; it's a battle for the future of creative ownership. As AI models like Sora demonstrate an astonishing ability to generate photorealistic videos from simple text prompts, they also raise urgent questions. What data were these models trained on? Was it copyrighted material? And if so, what do the artists, filmmakers, and brands who created that original content get in return? The online discourse, particularly on platforms like Reddit, reveals a groundswell of concern, with users and creators alike calling for transparency and accountability from tech giants. This article delves into the heart of the controversy, exploring the legal precedents, the key players, and what the escalating tensions mean for the future of both AI and creativity.

The Spark in the Powder Keg: A Deepfake and a Raging Debate

The controversy reached a boiling point with the emergence of the AI-generated Sam Altman video. For many, it felt like a deliberate provocation—a taunt from the very entity at the center of the copyright debate. The video's existence raised immediate questions, transforming an abstract legal concern into a tangible, almost uncanny, reality.

The "Meme-ification" of a CEO

Why would OpenAI's technology be used to "meme-ify" its own CEO, having him ironically address the very issue his company is criticized for? Observers on forums posited several theories. Was it a calculated move to normalize AI-generated content, even of public figures, thereby muddying the waters for future legal challenges? By being the first to create a deepfake of Altman, OpenAI could potentially claim any future negative or malicious videos are just part of a broader trend they themselves initiated. This strategic self-parody was seen by many not as a joke, but as a sophisticated, pre-emptive defense against the inevitable weaponization of their own technology. It felt less like a playful experiment and more like a move in a high-stakes chess game.

Why the Digital World Is Paying Attention Now

While the AI-and-copyright debate isn't new, the Altman video made the threat palpable. It showcased Sora's power to not only create novel scenes but also to replicate a specific, recognizable human being. This capability brings the Sora copyright risk out of the realm of abstract art and into the world of personal likeness, brand identity, and misinformation. The community's reaction was swift and sharp. If Sora can do this to Sam Altman, it can do it to anyone. This incident connected the dots for the public, linking the "black box" of AI training data directly to real-world consequences, pushing the demand for answers about OpenAI Sora training data from a niche concern to a mainstream outcry.

Understanding the Core Conflict: What "Sora Copyright Risk" Really Means

At its heart, the conflict is about value. Generative AI models derive their power from the massive datasets they are trained on—a digital library comprising a vast portion of the internet, including text, images, and videos. Many creators and legal experts argue that this process is tantamount to theft on an industrial scale.

Deconstructing the "Fair Use" Defense

One of the most common arguments floated by AI proponents is the concept of "fair use." In the U.S., the fair use doctrine allows for the limited use of copyrighted material without permission for purposes such as criticism, commentary, news reporting, and research. AI companies may argue that training a model is a "transformative" use, creating something new rather than simply copying the original.

However, this is a gross oversimplification and a dangerous one at that. Critics point out that when a commercial product (like Sora's video generation service) directly competes with the original creators whose work it was trained on, the fair use argument becomes significantly weaker. The community frequently references a recent ruling involving Meta, suggesting OpenAI might try to leverage similar legal strategies. Yet, the consensus among legal-savvy observers is that this will be an uphill battle. Using copyrighted Disney films to train an AI that can then generate Disney-style animations, for example, feels less like "fair use" and more like building a competing product with stolen parts.

The Black Box of AI Training Data

The single biggest source of distrust is the profound lack of transparency. What, exactly, is inside Sora? OpenAI, like many of its competitors, has been notoriously secretive about the specific datasets used to train its models. This secrecy is often framed as protecting trade secrets, but for creators, it feels like an admission of guilt. Did they scrape YouTube, Vimeo, and Shutterstock? Did they ingest Hollywood's entire film library? Without a public, auditable list of training sources, every impressive AI-generated video is tainted by the suspicion that it was built on a foundation of uncredited, uncompensated creative labor. This is the crux of the AI video generation copyright infringement concern.

The Goliaths at the Gate: Real-World Stakes and Key Players

The battle over AI copyright is not a fair fight. It pits individual artists and even large media corporations against some of the most powerful and well-funded entities in human history. The power dynamics at play are crucial to understanding why a resolution is so complex.

Case Study: The Specter of a Nintendo Lawsuit

In online discussions, one name comes up more than any other as a potential champion for creator rights: Nintendo. Renowned for its notoriously aggressive, zero-tolerance approach to protecting its intellectual property, many are hoping—even praying—that Nintendo will take on OpenAI. A Nintendo vs. OpenAI lawsuit is seen by the community as the ultimate test case, a David-and-Goliath story for the digital age.

However, some insiders urge caution. A Reddit comment attributed to a former Nintendo of America legal executive, Don McGowan, suggested that a company like Nintendo might choose to sit this one out. The reasoning? High-profile lawsuits create bad press, and large Japanese corporations often prefer to avoid public conflict, hoping the issue will fade. This perspective was a sobering dose of reality for many, highlighting that even corporate giants might hesitate before engaging in a protracted, expensive war with the AI industry.

The Big Tech Alliance: OpenAI, Microsoft, and the Market

Challenging OpenAI is not just about suing a single startup. It's about taking on a technological and financial ecosystem. With billions in funding from Microsoft and deep ties to government and venture capital, OpenAI is not an easy target. A legal battle would be a war of attrition, one that Big Tech is uniquely positioned to win. They can afford to drown opponents in legal fees and delay proceedings for years. This immense structural power is why many believe that legislative and regulatory action, rather than individual lawsuits, may be the only effective path forward. The current pro-AI investment climate and governmental posture in the U.S. create a formidable shield for companies like OpenAI.

The Creator's Dilemma: How to Respond to the AI Gold Rush

Faced with this new reality, creators and IP holders are scrambling to figure out how to protect themselves. The prevailing sentiment is that passivity is not an option.

The Call to Arms: A Pre-emptive Legal Strategy

One of the most popular suggestions circulating in creative communities is for a proactive legal stance. The idea is that every company holding visual IP—from major movie studios to independent animators—should have lawsuits drafted and ready to file. The moment they see a Sora-generated video that infringes on their style, characters, or copyrighted work, they should launch a legal challenge. This collective, rapid-response strategy is seen as the only way to create a significant deterrent. It's about sending a clear message: our intellectual property is not a free resource for your commercial product. The act of using Sora to create the Altman deepfake was seen as a form of mockery, and this call to arms is the community's defiant response.

Beyond Lawsuits: The Push for Licensing and Transparency

While lawsuits are a powerful tool, many argue that the ultimate goal should be a more sustainable and equitable system. The most logical solution proposed is for AI companies to be transparent about their training data and to enter into licensing agreements with the creators of that data. If an AI model is trained on a library of stock footage, the stock footage provider should receive a royalty. If it's trained on the work of specific artists, those artists should be compensated. This model already exists in the music industry with services like Spotify. Applying it to the AI industry would transform the relationship between tech and creativity from an adversarial one to a symbiotic one.

The Uncanny Valley Deepens: The Future Outlook for AI and Authenticity

The Sora copyright risk is just one facet of a much larger societal shift. The rise of powerful, accessible generative AI forces us to confront fundamental questions about reality, trust, and the nature of creativity itself.

Challenges: The Erosion of Trust and Reality

As AI models improve, the line between what is real and what is generated will continue to blur until it vanishes completely. The ironic reference to the video game character Sora from Kingdom Hearts—a character who constantly questions the nature of his own reality—was not lost on the community. We are all entering a Kingdom Hearts world, where we will have to constantly ask, "Is this real?" This has profound implications for everything from news and politics to personal relationships. The erosion of a shared, verifiable reality may be the greatest long-term challenge posed by this technology.

Community Predictions: A Long War of Attrition

What's next? The consensus from online forums is clear: expect a long, messy, and drawn-out conflict. There will be no single, decisive court case that settles the matter. Instead, we are likely to see years of scattered lawsuits, intense lobbying efforts in Washington D.C., and a slow evolution of legal and social norms. Many users express a cynical view that the sheer momentum of investment and governmental support for AI will ultimately steamroll copyright concerns. However, others remain hopeful that a coalition of creators, legacy media companies, and public advocates can force a change, establishing a new contract for the age of artificial intelligence.

Conclusion

The AI-generated Sam Altman video did more than just showcase Sora's impressive capabilities; it served as a declaration. It declared that the era of generative AI is here and that its creators are moving forward, with or without the blessing of the old creative order. The resulting backlash has drawn clear battle lines.

Key Takeaways

Conflict is Inevitable: The fundamental business model of current generative AI is on a direct collision course with established copyright law. The Sora copyright risk is not a bug; it's a feature of how the technology was built.

Transparency is Non-Negotiable:The "black box" approach to training data is no longer tenable. For AI companies to build trust, they must become transparent about their sources and create clear pathways for compensation.

Power Lies in Collective Action: Individual creators may be powerless, but a united front of artists, brands, and media giants has the potential to force change through coordinated legal and public pressure.

Suggested Next Steps for Observers and Creators

For those invested in the outcome of this debate, the path forward involves education and advocacy. Support organizations fighting for creator rights, stay informed about pending legislation related to AI and copyright, and participate in the public conversation. The future of creativity is not something that should be decided behind closed doors in Silicon Valley boardrooms. It's a conversation that involves all of us.

FAQ — Frequently Asked Questions About Sora and Copyright

1. What exactly is the main copyright risk with OpenAI's Sora?

The primary risk stems from the suspicion that Sora was trained on a massive dataset of copyrighted videos and images without permission from or compensation for the original creators. If a user generates a video that is "substantially similar" to a copyrighted work, both the user and OpenAI could be liable for copyright infringement.

2. Is it expensive or difficult for a creator to sue a company like OpenAI?

Yes, it is incredibly challenging. OpenAI is backed by Microsoft and has vast financial resources to spend on legal defense. A lawsuit would likely be a long, complex, and extremely expensive process, making it prohibitive for most individual creators or small businesses to pursue alone.

3. How is this different from a human artist learning by studying other art?

A human learning from art digests influences and synthesizes them into a new, unique style through their own consciousness and effort. An AI model ingests and mathematically deconstructs millions of works to replicate patterns. The key difference is scale, automation, and the commercial nature of the output, which can directly compete with the sources it was trained on, making it a legal and ethical gray area.

4. What can a small-time artist or videographer do to protect their work?

While no method is foolproof, artists can use watermarks, upload lower-resolution versions of their work online, and explicitly state copyright terms on their websites. Participating in creator advocacy groups and supporting efforts to push for legislation that protects artists in the age of AI are also crucial long-term actions.

5. What is the most likely future outcome of the AI copyright debate?

The future is uncertain, but a likely path involves a combination of landmark court cases and new legislation. We may see a future where AI companies are required by law to be transparent about training data and to enter into broad licensing agreements, similar to how music streaming services pay royalties to artists and labels. This would create a system of compensation and turn the relationship from adversarial to symbiotic.