Thinking Machines Lab Reveals First Insights into Building Reproducible Generative AI Models

- Ethan Carter

- Sep 12, 2025

- 11 min read

Thinking Machines Lab — a research-focused group embedded in the high-stakes world of advanced AI — recently published early findings and practical guidance aimed squarely at making generative models more reproducible. This is not an abstract manifesto: the lab’s commentary and early tooling recommendations emphasize regression testing, versioned datasets, and environment locking as concrete engineering levers to reduce flaky behavior and ease verification. Wired’s coverage framed these moves as an explicit commitment to reproducibility rather than a marketing line, and the lab’s public conversations echo ongoing academic work on regression testing and systematic training.

Why this matters now: reproducibility is a foundation of trust, safety, and regulatory readiness for systems that generate text, code, images, and decisions. When outputs are nondeterministic or impossible to replicate, audits, incident investigations, and scientific comparisons become fraught. The thinking here aligns with recent scholarly frameworks that prescribe regression suites, open, versioned datasets, and deterministic training practices as pillars of trustworthy evaluation and deployment. Recent research on regression testing and open datasets and systematic approaches to reproducible training provide practical context to the lab’s early agenda. This article synthesizes those threads and explains what developers, product teams, and decision-makers can expect as reproducible generative AI models move from theory toward production-ready practice.

Thinking Machines Lab’s approach to reproducible generative AI models

Practical engineering over abstract promises

Thinking Machines Lab’s early disclosures signal a shift from conceptual discussion to operational commitments. Rather than only arguing that reproducibility matters, the lab has emphasized building specific capabilities: regression testing suites for generative outputs, tools for dataset versioning and provenance, and mechanisms to lock training and inference environments so runs can be re-created. This emphasis mirrors the stance that reproducibility must be engineered into toolchains, not left as an optional afterthought. Wired’s reporting captured this orientation as a deliberate insistence on measurable engineering outcomes.

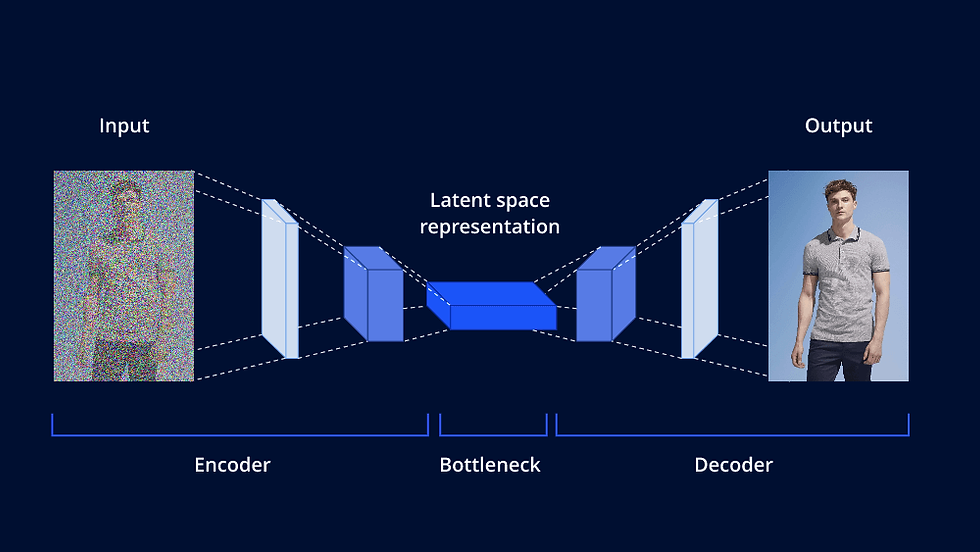

What the lab describes feels like a practical stack: test harnesses that treat generated outputs as first-class artifacts to be tested across commits; dataset registries that record exact versions used for training and evaluation; and environment capture that pins library versions, container images, and random seeds. Those components aim to make it possible to say, with confidence, “this output was produced by code X, data snapshot Y, and environment Z,” which is the minimum for forensic analysis and regulated auditing.

insight: reproducibility here is being treated as a product requirement—one that shapes how models are developed, shipped, and monitored.

How this affects product behavior

The initial tooling targets consistent outputs under identical conditions (same seed, same code, same data). In practice that reduces the kind of “flaky” regressions developers dread: a prompt that worked two weeks ago suddenly producing wildly different behavior after a model update. By catching such regressions with automated suites, teams can detect unintended behavior early and track root causes to code, data, or environment drift.

For developer and QA teams, the direct benefits include faster root-cause analysis, clearer audit trails for produced outputs, and fewer surprise rollbacks. For product managers, reproducibility provides a defensible baseline: you can compare releases against a fixed set of examples and define acceptable drift. The Thinking Machines Podcast discussions align these engineering choices with community-driven best practices, stressing that reproducibility is a collaborative effort between research disciplines and production teams.

Key takeaway: reproducibility is being operationalized with test suites, versioned datasets, and environment locking to make model outputs auditable and stable across releases.

Specs and performance for reproducible generative AI models

What reproducibility specifications look like

When people talk about “specs” in this context, they move away from traditional hardware benchmarks toward reproducibility criteria: environment locking, dataset provenance, deterministic checkpoints, and precise logging of training and inference parameters. A reproducibility spec describes the exact conditions under which a model must reproduce results — which libraries, container images, random seeds, training checkpoints, and dataset snapshots were used. These specifications are not about raw throughput or accuracy alone; they are about traceability and determinism.

Research proposals are converging on quantifiable checks: bit-for-bit determinism in inference where feasible, reproducible output distributions for given prompts, and documented acceptance thresholds for allowable variation. A recent paper proposing regression testing and open datasets for generative models outlines precisely these measurable checks, and work on trustworthy evaluation defines evaluation scaffolds that include reproducibility metrics.

Trade-offs and pipeline overhead

Deterministic runs and extensive regression testing introduce overhead. Training with tight environment locking may hinder hyperparameter hunting and the kind of rapid exploration favored in early research. Running exhaustive regression checks for every change can slow CI/CD cycles and force teams to invest in more compute and tooling to maintain acceptable velocity.

However, those costs trade off against fewer production incidents, reduced rollbacks, and faster compliance responses. In regulated settings — healthcare, finance, or large-scale research with reproducibility requirements — the up-front investment is often justified by decreased downstream risk. The academic literature supporting systematic training emphasizes reproducible pipelines as a way to reduce variance in results and make research claims more robust, rather than as a barrier to innovation. A foundational work on training reproducible deep learning models outlines procedural steps that increase reproducibility at the cost of additional engineering discipline.

insight: the upfront slow-down buys long-term gains in reliability, auditability, and confidence.

Metrics that teams can adopt

Concrete, measurable metrics are central to making reproducibility actionable:

Determinism checks: bit-for-bit or token-for-token identical outputs under controlled settings.

Output-consistency metrics: similarity scores across repeated runs with the same seed and environment.

Regression thresholds: defined tolerances for semantic drift (for example, percentage of prompts with changes beyond a set similarity metric).

Dataset lineage coverage: percent of training/eval data with versioned provenance recorded.

Implementing these metrics allows organizations to set clear pass/fail criteria for model updates. Teams can then automate rollbacks or gating based on whether changes exceed predefined regression thresholds. The recent work on regression testing and open datasets provides concrete guidance on designing these metrics for generative systems.

Key takeaway: reproducibility specs prioritize environment and data traceability, introduce measurable checks, and impose pipeline costs that most production teams will consider a worthwhile investment.

Eligibility, rollout, and pricing for reproducible generative AI models

What’s available now and what to expect

Public reporting describes the Thinking Machines Lab output as “initial insights” and early-stage tooling rather than a fully packaged, mass-market product. Coverage so far suggests the work is research-driven, with prototypes and design principles likely to precede a broad commercial launch. Wired’s reporting framed the lab’s releases as a research-first effort that signals intent rather than a consumer product announcement.

The pattern to watch is familiar from other enterprise-grade AI capabilities: early partner programs, select research collaborations, and staggered developer previews before a general release. The lab’s approach — combining open datasets and reproducibility practices — implies that parts of the stack may be released as open-source or research tooling, while hardened, production-grade services could be offered under enterprise licensing. This hybrid path is common when research organizations want community validation for core ideas while preserving a pay-for-support model for scale and integration.

Likely eligibility and timeline cues

The first wave of access will probably target research partners, academic collaborators, and enterprise customers with strong compliance needs. These early adopters can test regression suites, contribute datasets for versioning, and report on operational experiences. The coverage positions the effort as iterative: research papers and community feedback will shape alpha and beta tool releases rather than a single “big bang” launch.

Pricing is unknown, but the combination of open and enterprise elements suggests a tiered model: free or permissive licensing for research tooling and datasets, and paid support or managed reproducibility platforms for production use. Analysis from the Centre for International Governance Innovation emphasizes that reproducibility is becoming a procurement and governance issue — organizations that need guarantees will likely pay for them.

Key takeaway: expect staged availability beginning with research and enterprise previews; core ideas may be shared openly, while production-ready tooling is likely to follow under commercial terms.

How reproducible generative AI models differ from previous approaches

Shifting priorities from raw capability to auditability

Historically, model development workflows emphasized capability improvements — bigger models, better benchmarks, faster iteration. Reproducibility was often a secondary concern, addressed post hoc when experiments needed replication. Thinking Machines Lab’s stated emphasis places reproducibility as a co-equal goal: a change in priorities where auditability, deterministic behavior, and dataset lineage guide engineering decisions as much as performance gains.

That shift aligns with academic consensus that reproducibility is central to credible science and trustworthy deployment. A community consensus paper on reproducibility argues for shared standards and explicit reproducibility targets across the research lifecycle. Operationalizing those standards in product environments moves the needle from “we should be reproducible” to “we will deliver reproducible outputs.”

Competitors and alternative approaches

Academic groups and open-source projects have long proposed toolkits and frameworks for reproducibility: dataset version control systems, experiment tracking platforms, and practices to pin environments. What appears to be different in the Thinking Machines Lab approach is the integration of those practices into a product-focused pipeline with regression testing aimed specifically at generative outputs.

This is not to say the lab’s approach is unique — many organizations are converging on similar ideas — but the lab’s visibility and stated commitment may accelerate industry uptake. Compare this to prior workflows where reproducibility was often ad hoc and siloed within research notebooks; the new push favors standardized, automated regression testing and dataset registries.

Trade-offs in practice

The usability trade-offs are real. Rapid, exploratory research workflows often rely on flexible environments and quick experiments. Enforcing reproducibility practices can slow iteration and require more upfront engineering. But for customers in regulated industries, the benefits are substantial: reproducible models create clearer audit trails and make post-hoc investigations tractable, reducing legal and operational exposure.

From a market perspective, reproducibility will likely become a differentiator. As the AI market expands, customers will demand verifiable outputs as part of procurement decisions. Statista’s market projections underline the commercial scale at stake; as adoption grows, so will the incentive to require reproducibility in purchasing decisions.

Key takeaway: reproducibility shifts engineering and procurement priorities away from pure capability racing and toward auditability and operational guarantees, with expected trade-offs in iteration speed.

Real-world usage and developer impact of reproducible generative AI models

How developer workflows will change

For engineering teams, the practical impact is tangible. Continuous Integration/Continuous Deployment (CI/CD) pipelines will need new gates: regression tests that operate on generated outputs, dataset validation steps that ensure training snapshots are immutable, and environment capture to guarantee re-runs match original executions. Teams will also need richer observability: detailed logs tying outputs to model checkpoints and dataset versions.

Concrete example: a healthcare vendor deploying a clinical-assist generative model will add a reproducibility gate that verifies a fixed set of case prompts produce identical or acceptably similar reports after a model update. If a test fails, the change must be traced to code, data, or an environment change before release — a process that short-circuits the “ship and see” mentality.

Immediate use cases that benefit most

Regulated industries see the clearest wins. In scientific research, reproducible models make it possible to replicate published experiments with confidence. In healthcare and finance, regulators and auditors require verifiable decision trails; reproducible outputs provide that trail. Even in product settings like content generation, reproducibility helps track regressions that could harm brand reputation or violate policy.

Tooling and community effects are also important. Open datasets and shared reproducibility patterns allow peers to validate claims, reproduce benchmarks, and compare models on a level playing field. That communal validation reduces the asymmetry between vendor claims and independent verification.

Operational costs and governance

Operationally, organizations must invest in dataset provenance systems, robust environment management (containerization, pinned dependencies), and extended regression suites. These investments increase engineering overhead initially but tend to reduce incident frequency and severity over time. Governance teams will also need to establish policies: what constitutes acceptable drift, who signs off on production changes, and how to document provenance for long-lived models.

The broader research community has offered guidance on reproducibility standards and practices; government funding agencies and foundations increasingly expect reproducible methods in funded projects. The U.S. National Science Foundation provides guidelines encouraging reproducibility in research workflows, emphasizing data and code sharing where possible. Integrating such guidance into industry practices will help align academic norms with commercial requirements.

Key takeaway: reproducibility requires new CI/CD practices, governance rules, and engineering investments, but delivers measurable benefits in reliability and compliance.

FAQ: reproducible generative AI models and Thinking Machines Lab

What does “reproducible generative AI models” mean in this context?

It means a model produces consistent outputs when invoked with the same inputs, code, and environment, and that the training and inference conditions are recorded so results can be verified. This includes deterministic inference where possible, reproduction of training diagnostics, and clear dataset provenance. Recent proposals on regression testing and reproducible evaluation explain these requirements in detail.

Has Thinking Machines Lab released a product I can download?

As of public reporting, the lab has shared initial insights and research-first tooling concepts rather than a mass-market, downloadable product. Coverage frames the work as early-stage and research-driven, with prototypes and community engagement expected before a wider release. Wired’s coverage emphasizes the research orientation of the lab’s early work.

Will reproducibility slow model iteration?

Yes, reproducibility practices add overhead—environment locking, regression suites, and dataset versioning can slow iteration. However, they also reduce debugging time, lower rollback frequency, and improve production stability, which often offsets the uphill cost. Methodological work on reproducible training outlines these trade-offs and suggests disciplined steps to make reproducibility practical.

Are there standards or regulations that require reproducibility?

There is increasing guidance and consensus around reproducibility in research and some industry contexts, but comprehensive regulatory mandates for AI reproducibility are still emerging. Agencies and research funders already emphasize reproducibility norms, and community consensus papers call for shared standards. The NSF provides guidelines encouraging reproducible methods in funded research, and academic communities have produced consensus recommendations for reproducible practices see a community reproducibility consensus paper.

How does reproducibility relate to trust and safety?

Reproducibility is foundational to trust: it allows independent verification of behavior, supports audit trails, and helps investigators understand failure modes. These capabilities are core to safety-critical deployment decisions and to building external confidence in model claims. Work on trustworthy evaluation links reproducibility directly to credibility and safety mechanisms for generative systems.

Will open datasets be required?

Open, versioned datasets are recommended for transparency and replication, but legal and privacy constraints mean they cannot always be used. Where full openness isn’t possible, detailed provenance and controlled-access schemas help preserve reproducibility while respecting constraints. Research on regression testing and open datasets advocates versioned data when possible.

What reproducible generative AI models mean for the future of AI

A vision that balances speed and stewardship

Thinking Machines Lab’s early emphasis on reproducibility signals a maturing phase in AI development where engineering discipline and governance become as important as raw capability. In the coming years, expect reproducibility practices to spread beyond labs and into vendor product specifications, procurement criteria, and industry certifications. The immediate future will see iterative tooling releases and community validation that refine what “reproducible” means in practice.

This shift produces trade-offs. Organizations that prioritize reproducibility will move more deliberately, sacrificing some experimental speed for predictability and auditability. But that discipline pays dividends: fewer high-profile regressions, clearer explanations for model behavior, and stronger evidence when models are used in regulated or safety-critical settings.

Opportunities for readers and organizations

For practitioners, the next steps are concrete. Begin by instrumenting existing pipelines with dataset version control and experiment tracking, adopt containerized or otherwise pinned environments, and design regression tests for representative prompts. For managers, build reproducibility into procurement checklists and vendor contracts. For researchers, contribute to community benchmarks and open dataset registries to help establish shared reproducibility baselines.

At the ecosystem level, reproducibility can help restore some of the confidence eroded by irreproducible claims. As reproducible generative AI models become more common, vendors will increasingly advertise reproducibility guarantees and customers will demand them. That dynamic will create market pressure to standardize evaluation metrics and audit practices, which benefits everyone trying to build reliable AI systems.

Final thought: reproducible generative AI models are a practical pathway to making generative systems safer and more transparent; they require patience and investment now, but they are likely to become a competitive and regulatory necessity in the near future.