Unlocking the Power of Reinforcement Learning Reasoning: How DeepSeek-R1 Revolutionizes AI Problem-Solving

- Aisha Washington

- Oct 2, 2025

- 4 min read

Introduction

Artificial intelligence (AI) has made remarkable strides in recent years, particularly in reasoning and problem-solving capabilities. Traditionally, AI reasoning models relied heavily on supervised learning with human-labelled step-by-step reasoning traces, which constrained AI's reasoning style to human patterns. However, recent research led by DeepSeek-R1 introduces a game-changing approach through pure reinforcement learning (RL) with answer-only rewards. This novel technique empowers AI to independently discover reasoning strategies, breaking free from human limits and achieving state-of-the-art (SOTA) performance in tasks requiring logical thinking such as math, coding, and STEM problems. This article explores the fundamentals, importance, development, workings, practical applications, future prospects, and key takeaways of reinforcement learning reasoning as pioneered by DeepSeek-R1.

What Exactly Is Reinforcement Learning Reasoning?

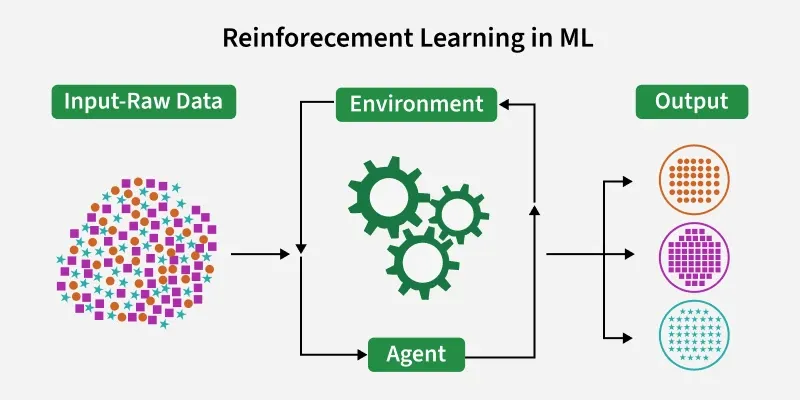

Reinforcement learning reasoning is a method where AI models learn to reason by being rewarded solely for producing correct final answers rather than following human-provided intermediate steps. Unlike traditional supervised learning that forces models to mimic human reasoning pathways, this approach enables models to create their own reasoning sequences guided only by answer correctness. A common misconception is that AI must replicate human logical steps to reason well; DeepSeek-R1's research disproves this by showing that freedom from human step-tracing allows AI to develop more effective and even non-human reasoning strategies while maintaining answer accuracy.

Why Is Reinforcement Learning Reasoning So Important?

This breakthrough is vital because it removes the ceiling imposed by human-labeled data. Traditional models could not surpass the quality of their training examples; their reasoning styles were limited to what humans demonstrated. With reinforcement learning reasoning, models can evolve their own methods, including self-checking, verification, and adaptive strategy changes mid-solution, all critical for solving complex, correctness-checkable problems. This advancement dramatically improves AI's accuracy and reasoning skills, exemplified by DeepSeek-R1 achieving a pass@1 score increase from 15.6% to 77.9%, reaching 86.7% with self-consistency. This not only enhances AI's utility in research and industrial applications but also sets the stage for more autonomous AI problem-solving capabilities.

The Evolution of Reinforcement Learning Reasoning: From Past to Present

Historically, AI reasoning relied on supervised learning where human annotators manually crafted step-by-step reasoning traces for models to copy, similar to teaching a student by showing every intermediate step. This methodology inherently capped AI's potential reasoning complexity. The evolution to reinforcement learning reasoning marks a pivotal shift—starting with DeepSeek-V3 Base models and progressing to the DeepSeek-R1 variant trained via specialized algorithms like GRPO (a group-based variant of PPO). This shift allows models to optimize for final answer correctness alone, bypassing the need for explicit human demonstration and encouraging innovation in reasoning pathways, which was published as a cover article in Nature and represents cutting-edge AI research.

How Reinforcement Learning Reasoning Works: A Step-by-Step Reveal

The training pipeline for DeepSeek-R1 can be summarized as follows:

Pure RL Training (R1-Zero):

The model starts training using only accuracy and format-based rewards, receiving reinforcement solely when its final answer matches the ground truth. This encourages exploration of diverse reasoning styles.

Supervised Fine-Tuning (Dev1):

A small dataset of human-verified examples is introduced to improve the readability and fluency of the model's output without compromising reasoning innovation.

Rule-Based RL (Dev2):

The model undergoes further RL training, incorporating rule-based constraints to refine answer quality and compliance.

This multi-stage training facilitates the emergence of built-in reflection, verification, and backup strategies, allowing the model to self-correct and switch tactics mid-solution when needed. These capabilities exceed traditional supervised approaches, which were restricted to mimicking human reasoning sequences.

How to Apply Reinforcement Learning Reasoning in Real Life

The advances in reinforcement learning reasoning have significant practical implications:

Mathematics and STEM Fields:

AI can solve complex problems with higher accuracy by employing adaptive reasoning without human-designed step traces.

Coding and Software Development:

Improved AI can autonomously debug, optimize, and generate code with advanced reasoning strategies.

Education:

Tools powered by reinforcement learning reasoning can offer novel tutoring approaches where AI develops personalized reasoning pathways for learners.

Research Automation:

Automating scientific discovery becomes more feasible as AI models devise unique methods to solve verification-based challenges.

Organizations seeking to leverage these capabilities should focus on integrating reinforcement learning frameworks that reward answer correctness, employ staged training pipelines, and allow the model flexibility to explore reasoning paths.

The Future of Reinforcement Learning Reasoning: Opportunities and Challenges

Looking ahead, reinforcement learning reasoning holds tremendous promise but also faces challenges:

Opportunities: Potential to surpass human-level reasoning in specialized domains, accelerate AI-driven scientific breakthroughs, and enable more robust and creative AI applications.

Challenges:Balancing exploratory reasoning with reliability, ensuring interpretability of AI reasoning paths, and managing computational costs of reinforcement training at scale.

As AI continues to evolve, frameworks like DeepSeek-R1 pave the way for models that not only answer correctly but reason with an unprecedented depth of autonomy and flexibility.

Conclusion: Key Takeaways on Reinforcement Learning Reasoning

Reinforcement learning reasoning represents a paradigm shift in AI development, removing dependency on human step-by-step guidance and enabling models to invent unique, effective reasoning strategies. DeepSeek-R1 exemplifies this by achieving state-of-the-art results in tasks requiring accurate and adaptable problem-solving skills. The future of AI reasoning will likely be defined by such autonomous learning techniques, unlocking new potentials across industries and research fields.

Frequently Asked Questions (FAQ) about Reinforcement Learning Reasoning

What is reinforcement learning reasoning?

It is an AI training method where models learn to reason by receiving rewards only for correct final answers, without relying on human-labeled intermediate steps.

What are the practical challenges of reinforcement learning reasoning?

Challenges include ensuring training efficiency, maintaining output readability, and balancing exploration with solution reliability.

How does reinforcement learning reasoning compare to supervised learning?

Unlike supervised learning that mimics human reasoning steps, reinforcement learning reasoning allows models to discover their own reasoning strategies, potentially exceeding human capabilities.

How can one start using reinforcement learning reasoning in AI projects?

Begin with models capable of reinforcement learning, design reward systems focused on answer correctness, and employ staged training pipelines including fine-tuning for output quality.

What does the future hold for reinforcement learning reasoning?

Future prospects include AI surpassing human reasoning in specialized tasks, though challenges in interpretability and computational demand remain key focus areas.