Albania Appoints AI Bot 'Diella' as First Virtual Minister to Combat Corruption

- Ethan Carter

- Sep 15, 2025

- 11 min read

What happened and why it matters for tech and governance

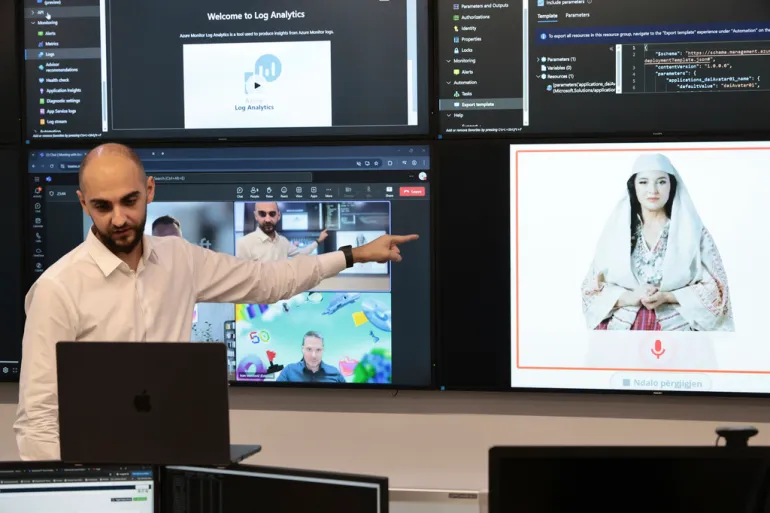

In mid‑September 2025 Albania made headlines by formally appointing an artificial intelligence chatbot named Diella as a virtual minister with a mandate to monitor and flag corruption risks in public procurement. This move was widely reported as a global first: a government giving an AI system an official ministerial title and a public mandate to help detect irregularities in how contracts are awarded and executed. News outlets framed the appointment as a world first and described the system’s public launch and remit.

Why this matters goes beyond a media novelty. Public procurement is a major source of waste and illicit gain in many economies; tools that improve transparency, timeliness of detection, and audit trails can materially change how oversight teams prioritize cases. At the same time, assigning an AI an official role sets a precedent about how governments can integrate automated systems into decision pathways and accountability structures. Coverage emphasized the practical implications for procurement integrity and the precedent‑setting nature of the appointment.

This article walks through what Diella does, the technical approach reported so far, how the rollout is structured, how Diella compares to human‑led audits and previous AI pilots, and what early uses and developer lessons reveal. It also summarizes the main governance, bias, and security challenges flagged by researchers and reporters, and it answers common technical and policy questions readers are likely to have.

Feature breakdown and technical overview of Diella

Core functions: continuous monitoring, anomaly detection, and public access

Diella was described in official briefings and media reports as a multi‑role system designed to scan public procurement activity and make findings accessible to both oversight teams and the public. Its headline capabilities include continuous monitoring of procurement tenders, automated anomaly detection and flagging, a public‑facing chatbot interface for inquiries, and generation of detailed logs and audit trails intended to support subsequent investigations. News reports summarized these core functions and the system’s public transparency role.

A few operational features were repeatedly highlighted: Diella runs 24/7, integrates with national procurement registries, looks for suspicious bidding patterns (for example, repeated winner‑concentrations, last‑minute tender changes, or bid submissions from related entities), and surfaces possible conflicts of interest. Where Diella flags a case, those findings are routed to human oversight teams rather than being treated as final determinations.

Insight: Diella is designed as a triage and transparency tool — it accelerates discovery and builds evidence trails while preserving human authority for investigations and legal action.

User‑facing interactions: chat and dashboards

For non‑technical users, Diella is reachable via a chatbot interface that can answer queries about specific tenders, explain why a contract was flagged, and provide historical procurement context for specific suppliers or contracting authorities. Procurement officers and oversight bodies get richer dashboards and downloadable reports to support follow‑up. Early descriptions indicate that flagged cases are accompanied by contextual data (e.g., bid histories, bidder networks) to make human review more efficient. Media coverage described the chatbot and dashboard channels available to citizens and officials.

Limits and guardrails

Importantly, reporters and analysts stressed that Diella is a virtual minister in title and function, not a legal arbiter. Its outputs are advisory: flagged anomalies are meant to prompt audits, human interviews, or forensic review. The government emphasized human‑in‑the‑loop oversight to validate findings and make prosecutorial or administrative decisions. AP News and other outlets noted the advisory role and human oversight design.

Key takeaway: Diella combines automated monitoring and public transparency, but current reporting frames it as an augmenting tool — not a replacement for human judgment.

Technical architecture, data sources, and reported performance

High‑level architecture and data flow

Public reporting described Diella as a layered system that ingests procurement records, applies rule‑based filters and machine learning models to detect anomalies, and exposes findings through a natural language interface and administrative dashboards. The architecture reportedly includes:

Data ingestion connectors to national procurement registries and contract databases.

Preprocessing and standardization pipelines to normalize tender metadata.

Rule engines that codify known red flags (e.g., single‑bid awards, subcontracting patterns).

Machine learning modules for outlier detection and network analysis (for identifying related bidders).

A chatbot front end using natural language processing (NLP) to field public queries.

Secure logging and audit trails to preserve provenance for investigations.

Defining terms: "Anomaly detection" here refers to methods that identify events or patterns in procurement data that deviate from expected norms. "Human‑in‑the‑loop" means humans validate and act on AI outputs rather than the AI making unilateral legal decisions.

Data sources and how detection is triggered

Diella’s primary data source is the national public procurement registry — formal tender notices, bids submitted, award records, and contract execution reports. Detection is reportedly triggered through a combination of:

Rule‑based triggers (hard thresholds or compliance rules).

Statistical outlier scoring (transactions that deviate from historical baselines).

Network and graph analysis (common ownership, repeated associations).

Textual NLP analysis of tender documents for suspicious clauses or unusual procurement descriptions.

Journalists and analysts have highlighted that the system’s effectiveness depends critically on data quality and standardization, which is why initial deployments prioritized high‑risk procurement categories where records are more complete. Academics have emphasized the need for clean, standardized procurement data for reliable AI detection.

Reported accuracy, testing, and validation

Public reporting so far describes early indicators and illustrative examples rather than fully validated accuracy metrics. Officials and researchers caution that no definitive public accuracy numbers have been released; the system is described as an early‑stage deployment that will require independent validation, continuous retraining, and calibrated thresholds to manage false positives and false negatives. Coverage stressed the need for independent evaluation and ongoing testing.

Security and resilience are also emphasized: secure data protections, logging for auditability, and procedures to prevent electoral or operational interference are reported priorities. Media reports and academic commentaries note the requirement for continuous model updates to keep pace with evolving corrupt practices and data drift. Euronews and other outlets covered these resilience and security concerns.

Key takeaway: The technical stack is built around procurement data ingestion, hybrid rule/ML detection, and public interfaces — but measurable efficacy remains to be independently demonstrated.

Rollout, access, and governance arrangements

Phased deployment and who can use Diella

Diella’s public appointment in September 2025 was coupled with an immediate scope focused on national public procurement systems and a phased integration plan with central registries and select ministries. Initial access layers include a public chatbot for citizens, dashboards for procurement officers, and tailored reports for anti‑corruption units. Al Jazeera and AP News reported the appointment date and the phased rollout approach.

The rollout logic follows familiar product‑deployment patterns: start with high‑risk procurement categories and systems where the data architecture is already relatively mature, validate outputs with oversight teams, then expand scope only after protocol, legal, and regulatory checks. Early communications emphasized that citizens can query Diella’s public chatbot to look up tender histories or see why a contract was flagged, while officials receive deeper analytics and exportable evidence packages.

Governance, accountability, and cost

As a government initiative, Diella is not a paid consumer product — there is no user pricing for citizens. Coverage described governance arrangements that place human ministers and oversight bodies squarely responsible for follow‑up investigations and accountability. The government framed Diella as a ministerial advisor that produces transparent records to support human action. Euronews and The Week covered these governance and accountability frameworks.

Governance features reportedly include mandatory human review of flagged cases before escalation, audit logging to record the chain of evidence, and an intention to publish aggregate performance metrics and case outcomes as pilot metrics become available.

Practical constraints and scale

Early deployment is deliberately constrained: the system is first applied to procurement categories judged high risk, and expansion is tied to validation and possible legislative alignment. Operational constraints include legacy system integration complexity, uneven data completeness across ministries, and the need for staff training so officials can interpret and act on Diella’s outputs.

Bold takeaway: the rollout intentionally balances transparency and control — public access plus human accountability — while phasing expansion to mitigate operational and legal risks.

Comparison with traditional oversight and global alternatives

What makes Diella a "world first"

Diella’s novelty is not that AI is being used for procurement oversight — many governments have piloted algorithms for fraud detection — but that Albania has formally conferred a ministerial identity on the system and publicly placed it in a continuous monitoring and advisory role. Previous efforts in other countries were typically internal piloting tools, advisory systems, or discrete contract‑risk modules; none were reported to hold an official ministerial appointment. Media commentary framed Diella as a precedent and contrasted it with prior AI pilots.

Speed and scope: automated monitoring versus periodic human audits

One clear operational contrast is cadence. Traditional oversight often depends on periodic audits, whistleblower tips, or manual review processes that can take weeks or months. Automated monitoring provides near‑real‑time triage, which can accelerate detection and evidence collection. That said, automated systems are heavily dependent on data completeness and model validation — poor data quality can yield misleading signals.

Industry and academic observers emphasize that automation can reduce detection latency but not eliminate the need for human expertise in contextual interpretation and legal adjudication. AP News and academic sources highlighted the complementary nature of AI detection and human investigation.

Alternatives and tradeoffs

Alternative strategies include strengthening human audit capacity, improving procurement law enforcement, and implementing open‑data transparency initiatives that empower journalists and civic technologists. AI offers scalability and pattern recognition advantages but introduces tradeoffs around explainability, bias, and the potential for over‑reliance on imperfect models. Coverage noted that other countries experimenting with AI in governance have proceeded cautiously, often prioritizing vendor‑agnostic pilots and independent evaluations. Euronews and The Week covered these global comparisons and tradeoffs.

H3 — Accountability in practice: who answers for Diella

A practical accountability question is how errors are remediated. Because Diella’s outputs are advisory, legal responsibility for decisions remains with human officials and institutions. The system’s value depends on clear escalation paths: automated flag → human triage → audit/investigation. Transparency in how flags are generated and logged is essential to prevent reputational damage from false positives and to allow redress when needed.

Early usage, developer impact, and operational challenges

Early case examples and operational effects

Initial media reports and podcast coverage described Diella scanning tenders, producing flagged lists for anti‑corruption units, and enabling faster triage of suspect contracts. These are early, illustrative examples rather than audited impact metrics: reporters noted specific procurement categories where the system highlighted repeated winners or unusual contracting sequences, which then fed into human review processes. Journalistic accounts provided case‑study style reporting on early flagged irregularities.

For procurement teams, Diella reportedly changed workflows by enabling faster prioritization of high‑risk tenders, supplying richer audit trails for investigators, and improving the traceability of oversight actions.

Developer lessons: data, explainability, and integration

Developers and researchers analyzing the deployment pointed to several recurring lessons:

High‑quality, standardized procurement data is a prerequisite. Models are only as reliable as their inputs.

Explainability matters: investigators need transparent reasons for why a case was flagged — black‑box scores alone won’t win trust.

Continuous retraining is required to manage concept drift as corrupt actors adapt.

Integration with legacy systems is often the most time‑consuming operational task, requiring data mapping and security work.

Operational challenges that shape developer priorities

Operational friction points include uneven metadata standards across agencies, access restrictions to sensitive procurement details, and the need to balance openness with privacy. Developers also face institutional resistance in some units unaccustomed to data‑driven triage. Finally, the need for independent evaluation creates additional development overhead: logging, test harnesses, and reproducible evaluation datasets must be built from the start.

Bold takeaway: early usage shows operational promise but underscores that technical capability must be matched with data governance, explainability, and institutional change management.

FAQ

Q1: What exactly is Diella and what powers does it have?

Diella is an AI chatbot appointed as a virtual minister to monitor and flag corruption risks in public procurement. It automates continuous monitoring and produces advisory flags for human investigators; it does not make legal decisions or replace judicial processes. See reporting on the appointment and remit.

Q2: When did Albania launch Diella and where is it active?

The public appointment and launch were reported in September 2025. Initial activity is focused on the national public procurement ecosystem, with phased integration planned across ministries and central registries. Al Jazeera covered the launch and scope.

Q3: How accurate is Diella at detecting corruption?

No definitive public accuracy metrics have been published. Coverage and academic commentary stress that reported outcomes are early indicators and that independent validation and ongoing testing will be necessary to quantify true positive and false positive rates. Scholars have recommended independent evaluation and transparent methodology.

Q4: Can Diella make legal decisions or replace human ministers?

No. Diella’s role is advisory and monitoring: it flags irregularities for human oversight. Legal decisions, disciplinary actions, and prosecutions remain the responsibility of human authorities. News outlets emphasized the human‑in‑the‑loop model.

Q5: What are the main privacy and security concerns?

Main concerns include protecting sensitive procurement records, ensuring secure integrations with legacy systems, preserving audit logs, and preventing adversarial manipulation. Reported mitigation includes secure data pipelines, strict access controls, and auditability of logs. Tech coverage discussed security and resilience needs.

Q6: Will other countries adopt similar AI ministers?

Albania’s move is likely to be studied internationally as a precedent, but experts suggest other governments will proceed cautiously — favoring pilots, independent audits, and clear regulatory frameworks before assigning AI any formal ministerial or decision‑making roles. Commentary framed the appointment as a test case for broader adoption.

Q7: How can developers and policymakers evaluate Diella’s effectiveness?

Effective evaluation will require open performance metrics, independent audits, reproducible test datasets, and reporting on downstream outcomes (e.g., how many flagged cases led to meaningful investigations or recovered funds). Academics recommend pilot phases with transparent methodology and stakeholder engagement. Academic recommendations emphasize rigorous validation and stakeholder involvement.

Forward‑looking implications for AI‑assisted governance and what to watch next

Diella’s public appointment is both symbolic and practical: symbolic because it reframes what an official minister can be in the digital age; practical because it puts concrete AI capabilities — continuous monitoring, automated triage, public transparency — into everyday governance workflows. In the coming months and years, observers will watch three linked indicators to judge whether this experiment moves from novelty to durable public value.

First, measured outcomes. Will Diella’s flags result in faster, higher‑quality investigations and demonstrable recoveries or sanctions? Reporters and researchers are looking for independent audits and metrics that go beyond raw flag counts to measure investigatory yield and reduced procurement leakage. Media coverage stressed the need for independent validation.

Second, transparency and explainability. For public trust to grow, Diella must surface not only that a tender was flagged but why — which features, patterns, or rules triggered the flag — and that human reviewers can trace a reproducible evidence trail. Scholars have recommended publishing methodology, test datasets, and performance summaries as part of pilot reporting. Academic commentary urged openness and reproducibility.

Third, governance and safeguards. The balance between automation and human responsibility will be decisive. Albania’s approach — public access combined with mandated human review and audit logging — is a pragmatic model, but it will need legal clarity, data protection safeguards, and independent oversight to avoid mission‑creep or misuse. International observers will be watching whether Albania establishes clear accountability lines and publishes lessons for replication.

In a broader sense, Diella offers a working example of AI‑assisted governance that others will study. For technologists and policy teams interested in similar deployments, the Albanian experiment underscores practical priorities: invest in data quality and integration, design explainable detection pipelines, maintain human oversight and legal clarity, and plan for independent, transparent evaluation.

There are real uncertainties. Models can drift, actors adapt, and governance frameworks may lag behind technical capability. Yet the experiment is valuable precisely because it forces those tradeoffs into the open: policymakers must now confront questions about evidence, error, redress, and civic communication.

As next updates arrive and pilot metrics are published, public servants, civic technologists, and researchers will have a clearer sense of whether AI ministers are a scalable model for improving transparency — or a cautionary example of how symbolic innovation must be tethered to rigorous evaluation. For now, Diella represents an instructive hinge between today's auditing practices and a future where AI‑assisted tools reshape how transparency and accountability are operationalized in government.