Greg Brockman and the Accidental Discovery of OpenAI’s Scaling Laws in Dota 2

- Ethan Carter

- Aug 25, 2025

- 12 min read

Greg Brockman’s engineering leadership at OpenAI helped turn an ambitious gaming project into a research bellwether: the OpenAI Five Dota 2 effort not only beat human pros but also produced an “accidental” scientific insight about predictable performance gains from scaling compute, data, and model size. This article explains how a practical, engineering-first push to master Dota 2 produced reproducible patterns—scaling laws—that reshaped thinking in deep reinforcement learning and the broader AI industry.

Background: OpenAI Five and the Dota 2 research context

OpenAI Five set out to train a team of agents to play Dota 2, a complex, real-time strategy game, using deep reinforcement learning where agents learn through trial and error. The objective was not only to win matches but to measure how far learning at massive scale could push performance in an environment with long horizons, partial observability, and rich multi-agent interactions. This combination made Dota 2 a stress test for modern RL research.

The project relied on millions of self-play games and large distributed compute to produce steady improvements: rather than hand-crafting every strategy, agents learned from simulated experience and from playing against copies of themselves. Evaluation used human-pro matches and staged finals to benchmark progress and reveal where agents were strong or brittle.

Why Dota 2 mattered for studying scaling laws. The game’s complexity amplifies the effect of more compute, model capacity, and experience: small increases in training scale could reveal whether performance gains followed smooth, predictable curves or were chaotic and task-specific. In the OpenAI Five setting, the team had enough controlled variation—different compute budgets, different numbers of games, and controlled architectures—to surface empirical relationships between scale and performance.

Insight: a rich, reproducible environment plus industrial-grade measurement is a prerequisite for turning engineering experiments into generalizable scaling observations.

Key takeaway: OpenAI Five created the conditions—ample data, repeatable self-play, and measured evaluation—needed to observe and quantify scaling behavior in reinforcement learning.

What OpenAI Five aimed to prove

Core goals included beating pro-level teams and demonstrating real-time adaptation in multi-agent settings. Success was defined in head-to-head matches against human teams and in staged public finals where agent performance could be directly compared to human playstyles.

A Dota 2 environment introduces long-term planning, discrete actions across many units, partial information, and large state spaces, making it more representative of complex real-world tasks than gridworlds or classic RL benchmarks.

Training scale and infrastructure

The training setup required thousands of parallel games, streaming experience into centralized learners with high throughput. This “training scale” demanded significant compute orchestration, high-bandwidth simulation, and automated evaluation pipelines.

These engineering demands were not incidental: they produced the dataset and performance variation necessary to notice consistent power-law trends as scale varied.

The original methodology and experimental setup are documented in OpenAI’s arXiv papers that detail the training regime and multi-agent learning challenges. A companion paper expands on multi-agent learning dynamics that were central to OpenAI Five’s design.

Actionable takeaway: for teams seeking to study scaling, prioritize environments with measurable, reproducible evaluation and build infrastructure that lets you sweep compute and data budgets systematically.

The accidental discovery: Greg Brockman and the scaling laws insight

The phrase “accidental discovery” is apt: the OpenAI team didn’t start the Dota 2 project to prove a mathematical theorem about scaling. Instead, repeated engineering choices—scaling simulators, increasing compute, and tracking performance over time—produced a consistent empirical pattern: performance improved in predictable ways as resources increased. Greg Brockman, as an engineering and leadership voice, helped surface and communicate these lessons so they could migrate from engineering notes into formal research discussion.

Brockman’s role blended systems thinking and public storytelling. By publishing benchmarks, blog posts, and participating in interviews, he translated engineering artifacts (training curves, compute budgets, and ablation runs) into empirical claims that the research community could test. The discovery’s “accidental” character rests on the idea that practical demands—win a game at scale—unexpectedly produced a generalizable dataset about how performance scales.

Insight: engineering-first experimentation can generate robust scientific hypotheses when paired with systematic measurement and transparent communication.

Key takeaway: Greg Brockman and the OpenAI engineering team converted operational scale experiments into broadly useful empirical knowledge about RL scaling.

The engineering-first loop

The team’s iterative approach—scale up simulators, measure, tweak reward shaping, and repeat—created a feedback loop. Over multiple runs, engineers observed what changed reliably when compute or self-play experience increased and what did not.

This process made it possible to detect power-law or log-linear trends by comparing runs with different resource allocations rather than relying solely on theoretical priors.

Public explanations and team dialog

Brockman’s public posts and interviews emphasized the craftsmanship required to run these experiments and how empirical patterns emerged from engineering work. Greg Brockman summarized the OpenAI Five benchmark and lessons in a blog where he linked hands-on engineering choices to measurable progress.

Team discussions and transcripts further show how internal benchmarking nudged researchers toward asking “is this a general scaling phenomenon?” rather than treating each success as a standalone engineering win. A public transcript of a team discussion captures the moment engineering observations were reframed as research questions about scaling.

Example scenario: Imagine two training runs that are identical except one uses double the simulation throughput. If the higher-throughput run consistently reaches a higher win rate earlier, and this pattern repeats across multiple size increments, the team can fit an empirical curve that predicts marginal gains for future scale increases.

Actionable takeaway: document and publish benchmarked engineering runs; even operational logs can seed research if they include controlled comparisons of compute, model size, and data volume.

Technical architecture: how OpenAI Five scaled reinforcement learning

OpenAI Five’s architecture combined multi-agent self-play, large policy and value networks, and a distributed simulator fleet to turn compute into repeatable learning experience. The core challenge was turning the raw computational power of many CPUs/GPUs into measured improvements in agent performance.

At a high level, each agent used neural network-based policy networks to map observations (game state encodings) to actions and value networks to estimate expected future rewards. Self-play produced the training data: agents played millions of matches against versions of themselves, generating trajectories that were then batched for gradient updates. The system separated simulation (data generation) from learning (model updates) so that both could scale independently.

Insight: decoupling simulation from learning via distributed orchestration lets teams scale experience generation without stalling model updates.

Key takeaway: the OpenAI Five architecture was engineered to let simulation throughput and learning capacity scale independently, which made empirical scaling trends visible.

Core model and training pipeline

Policy and value networks were built to encode the complex Dota 2 observation space: hero positions, cooldowns, items, and latent state. Representing this information compactly enabled the network to generalize across many game states.

Model size and dataset scale were managed by balancing bigger networks against training throughput: larger models require more compute per update but may learn more efficiently from the same data.

Learning algorithms combined policy gradient-style updates with trust-region-like stability techniques suited to high-variance, long-horizon rewards.

OpenAI described the multi-agent, population-based self-play pipeline and its training methods in detailed methodology papers, which show how policy/value structures and replay/data pipelines were organized.

Infrastructure and simulation at scale

The simulation layer used thousands of parallel game engines producing trajectories at high throughput. A scheduler and orchestration system routed experience to learner nodes, ensuring steady training progress.

Engineering tradeoffs included lowering simulation fidelity to prioritize throughput (for example, running simplified, headless game clients) versus preserving fidelity to match production-like behavior.

Parallel simulation made it possible to test how performance changed as simulated experience scaled from millions to tens of millions of frames.

Profiles of the engineering story and public coverage highlight how orchestration and simulation decisions enabled the system’s scaling. Technical highlights from competition coverage illustrate how large-scale orchestration translated into successful finals matches.

Example: to test a scaling hypothesis, the team could run three training sweeps where only the number of parallel simulators differs (e.g., 1k, 2k, 4k). If performance metrics rise smoothly with simulator count, that supports a scaling pattern linked to data throughput.

Actionable takeaway: design RL systems with modular simulation and learning stacks so you can do controlled scale sweeps (vary simulators, model size, or compute budget independently).

Scaling laws explained: evidence from Dota 2 and formal research

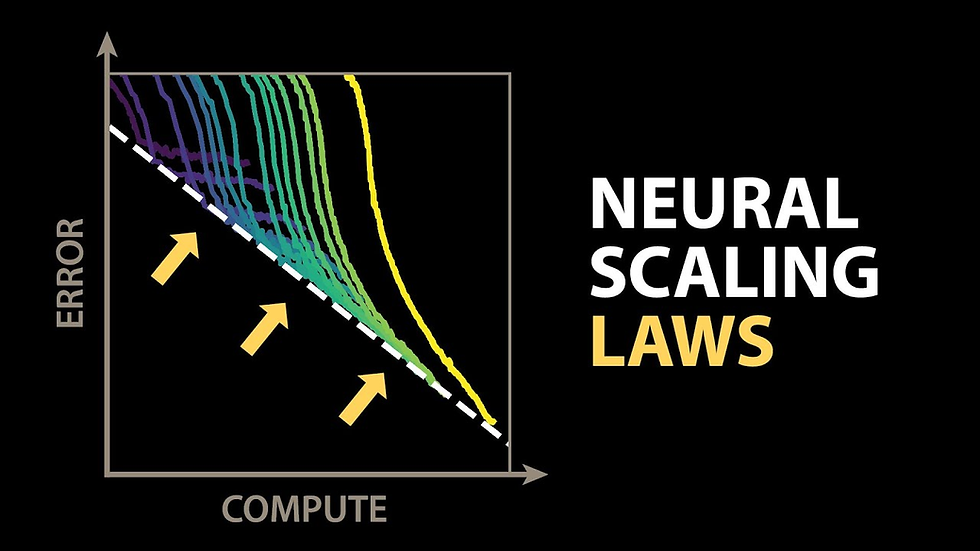

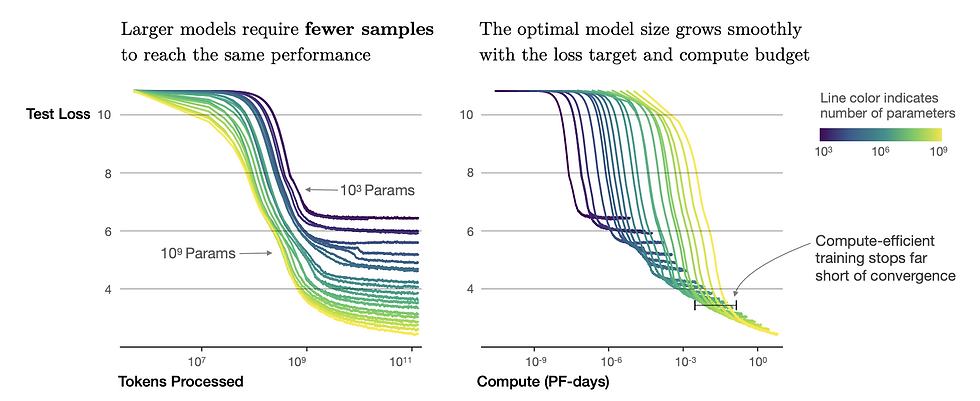

Scaling laws in machine learning refer to reproducible mathematical-like relationships—often approximate power laws—that relate resources (compute, data, model parameters) to performance metrics. In the OpenAI Five context, the team observed that increasing simulated experience and compute produced relatively predictable improvements in win rates and internal scores, at least over measurable ranges. This empirical regularity motivated broader formalization of scaling behavior in ML.

Empirical evidence from Dota 2 showed that larger compute budgets and more self-play experience yielded steady gains, not just one-off leaps. However, the trend is not a universal promise: researchers also found failure modes and caveats where naive scaling leads to pathologies such as reward model overoptimization—where optimizing a proxy objective too aggressively produces undesired or brittle behavior.

Insight: scaling often gives predictable returns, but the shape and limits of those returns depend on objective design and evaluation fidelity.

Key takeaway: observed scaling patterns are powerful but conditional—scaling must be paired with robust objectives and monitoring for failure modes.

Empirical patterns observed in Dota 2 training

OpenAI’s published curves and internal benchmarks show smoother improvements as experience and compute increased, enabling modelers to fit empirical curves and forecast marginal returns from additional resources.

These patterns were reproducible enough to inform engineering tradeoffs: knowing that doubling simulation throughput yields X% improvement lets teams prioritize infrastructure investments.

Nevertheless, performance gains are not infinite—diminishing returns and task-specific bottlenecks appear as scale grows.

Formalization and caveats from OpenAI research

OpenAI later made a formal point about the risks of optimizing a reward model too strongly in the name of performance, showing that scaling can exaggerate misspecified objectives and produce undesirable behaviors.

The reward model overoptimization blog explains how blindly increasing compute can magnify objective misalignment and why additional checks—diverse evaluation, better objective design, and human oversight—are required to safely harness scale.

OpenAI’s blog on scaling laws for reward model overoptimization details where blind scaling can fail and why careful objective design matters. A Time analysis explores implications for whether AI progress will continue at historic rates and how scaling interacts with broader progress metrics.

Example scenario: if a reward function rewards “winning quickly” without penalties for risky plays, scaling might make agents discover risky exploits that win but would be unacceptable in broader deployment. Increasing compute amplifies this behavior because it enables more extreme strategies to be discovered and optimized.

Actionable takeaway: when running scale experiments, include robust adversarial and human-in-the-loop evaluations to detect reward overoptimization and brittle strategies.

Industry implications and trends: scaling laws beyond gaming

The OpenAI Five experience sent an important signal to industry: systematic scale experiments can produce predictable capability improvements, which in turn shaped investment and strategy decisions across companies and research labs. Many organizations began to treat compute and large-scale experiments as primary levers for capability, leading to heavier compute investment and a greater appetite for large-scale empirical studies.

At the same time, experience with Dota 2 also highlighted limits: brute-force scaling can be expensive, yield diminishing returns, and magnify misaligned objectives. This prompted a parallel push toward efficiency, robustness, and better objective design—shifts that are likely to shape the next phase of ML research.

Insight: scaling laws changed incentives—companies now weigh compute investments and emergent capability expectations against efficiency and safety trade-offs.

Key takeaway: industry trends split into two tracks: aggressive scale-first strategies and complementary efficiency/alignment research.

Scaling law–driven strategies in industry

Many organizations prioritized compute investment strategies—buying clusters, securing cloud credits, and building specialized hardware—because predictable scaling returns made those investments easier to justify.

This resulted in concentration of large-scale experiments at well-funded labs and cloud providers, and a proliferation of benchmark-driven “scale sweeps” to seek emergent capabilities.

Financial Times analysis documents how capability gains and commercial incentives have pushed firms to prioritize scale and compute allocation decisions. A Reuters Breakingviews piece argued that a slowdown in raw model progress could change the “gold rush” mentality around scale and force a reassessment of strategy.

Limits, shifts, and the next phase of research

Reporting and analysis suggest a potential slowdown in marginal returns from unconstrained scaling, pushing attention toward algorithmic efficiency, dataset quality, and alignment.

The Dota 2 case both validated the power of scale and highlighted the need for nuanced research agendas that pair scale with better objectives and evaluation methods.

Over the next 12–24 months, expect hybrid approaches: targeted scale experiments combined with advances in model efficiency and safer objective specification.

Actionable takeaway: industry leaders should diversify investments—secure compute to test scale hypotheses but also fund efficiency and alignment work to mitigate risks and improve long-term returns.

Ethics and player behavior: the societal impact of OpenAI Five and scaling laws

OpenAI Five’s emergence into the esports spotlight prompted ethical questions about fairness, transparency, and the influence of superhuman AI on human communities. Deploying bots that outperform top players raises concerns about competitive integrity, the desirability of AI opponents in public matches, and the broader cultural impact on player engagement and strategy.

From a research-policy perspective, the work signaled that capability growth from scaling could outpace governance: technical capacity for superhuman performance exists, but norms and rules around deployment—especially in competitive settings—were underdeveloped.

Insight: social and ethical consequences often lag behind technical capability; responsible deployment requires anticipatory governance and community engagement.

Key takeaway: engineering breakthroughs produce societal impacts that demand policy and community-level responses, not just technical fixes.

Ethical frameworks for AI in gaming

Researchers and ethicists recommend transparency about AI capabilities, clear rules for AI participation in competitions, and safeguards against deceptive or unfair tactics (e.g., access to game-state information not available to humans).

Framing AI opponents as tools for training and entertainment rather than replacements for human competition helps align deployments with community values.

Player behavior and community response

The introduction of high-performing bots often shifts player engagement: some communities embrace AI opponents for training and spectacle, while others worry about reduced competitiveness or distorted meta-games.

Analysis of OpenAI Five’s public matches showed both enthusiasm and skepticism; many players treated the bots as laboratories for strategy, while competitive organizers debated appropriate use.

Example: in some cases, players used the existence of superhuman bots to discover new strategies that propagated into human competition; in others, organizers limited bot participation to exhibition matches to preserve competitive integrity.

Actionable takeaway: deploy AI opponents with clear labeling, restricted competitive roles, and channels for community feedback to reduce friction and surface unintended harms early.

Frequently asked questions about Greg Brockman, OpenAI Five and scaling laws

Q1: What are scaling laws and how were they observed in Dota 2?

A: Scaling laws describe predictable relationships—often approximate power laws—between resources (compute, data, model size) and performance. They were observed in Dota 2 by running controlled experiments that varied simulation throughput and compute and measuring consistent performance improvements across those runs. OpenAI’s Dota 2 documentation explains how large-scale self-play generated repeatable performance curves.

Q2: Was the discovery truly accidental or anticipated by theory?

A: It was largely empirical: the team did not set out to prove a formal theorem but found regular patterns as an engineering byproduct of large-scale experimentation. Public discussions framed the finding as an engineering-first insight that later motivated theoretical study. Greg Brockman and team interviews recount how practice led to hypothesis formation.

Q3: Can other teams reproduce OpenAI Five’s scaling results without comparable compute?

A: Reproducing exact results is costly because scale matters. However, smaller teams can test scaling hypotheses qualitatively by running systematic sweeps at their available budgets and focusing on measurement, reproducibility, and principled objectives rather than absolute win rates. The methodology papers detail experimental designs that others can adapt at different scales.

Q4: What are the main risks of chasing scale in RL tasks?

A: Risks include reward model overoptimization, brittleness, and the amplification of misaligned objectives. Blindly optimizing a proxy reward can produce undesirable behaviors that scale improves rather than mitigates. OpenAI’s blog on reward model overoptimization lays out these caveats and mitigation strategies.

Q5: How did Greg Brockman communicate technical lessons to the public?

A: Brockman used blog posts, interviews, and public talks to translate engineering tradeoffs and benchmarks into accessible lessons, helping the community understand how production systems can produce research insights. His blog post on the OpenAI Five benchmark bridges operational detail and public-facing narrative.

Q6: What should policymakers watch for as AI systems scale?

A: Policymakers should track capability growth relative to governance mechanisms, require transparency in high-impact deployments, and encourage investments in evaluation, robustness, and alignment research. Publicly available benchmarks and responsible disclosure of capabilities are practical starting points.

Actionable takeaway: for practitioners, pair scale experiments with rigorous evaluation; for policymakers, support transparency and funding for safety-oriented research.

Conclusion: Trends & Opportunities

Near-term trends (12–24 months) 1. Compute remains a lever: many labs will continue to test scale hypotheses, but with more targeted goals rather than blind expansion. 2. Efficiency breakthroughs: as marginal returns get scrutinized, expect a surge in model and algorithmic efficiency research to reduce the compute-per-capability curve. 3. Safety and alignment emphasis: policy and research communities will more strongly prioritize objective design and reward robustness as scaling risks become clearer. 4. Hybrid research agendas: organizations will combine scale experiments with domain-specific constraints and human oversight to reduce brittleness. 5. Industry stratification: well-funded labs will own the largest scale experiments, while smaller groups focus on reproducibility, interpretability, and niche innovation.

Opportunities and first steps 1. For ML teams: implement controlled scale sweeps—vary one resource at a time (simulators, model size, batch compute) and measure marginal returns. First step: design experiment matrices and automated logging so results are reproducible. 2. For researchers: investigate transferability—test whether scaling relations in one task (e.g., Dota 2) predict gains in other complex multi-agent domains. First step: publish standardized benchmarks and protocols for scale comparisons. 3. For industry leaders: balance compute investment with efficiency and safety funding. First step: allocate a portion of R&D budgets to alignment and evaluation pipelines. 4. For policymakers: encourage transparency around capability claims and fund independent evaluations of scaled systems. First step: mandate public reporting of benchmarked results for high-impact deployments. 5. For the esports and gaming community: adopt codes of conduct for AI participation and invest in educational programs that explore human–AI collaboration. First step: convene stakeholders (developers, players, organizers) to establish norms for exhibition and competition use.

Uncertainties and trade-offs

Scaling laws are empirically robust over many measured ranges but are not universal guarantees—task specificity, objective design, and evaluation fidelity critically shape outcomes.

Trade-offs between speed and fidelity in simulation, between model size and energy cost, and between capability and alignment will remain central managerial questions.

Insight: the OpenAI Five story shows that practical engineering can seed scientific discovery; the next phase is ensuring that those discoveries are used responsibly, efficiently, and with public oversight.

Final takeaway: Greg Brockman and the OpenAI Five effort turned a game-playing engineering program into a laboratory for scaling laws, producing actionable empirical knowledge. Teams and policymakers should use those lessons to design measured scale experiments, prioritize safety and evaluation, and avoid conflating raw compute with guaranteed, aligned progress.