Perplexity AI Silent Model Substitution: CEO Aravind Srinivas Responds to Pro User Accusations

- Ethan Carter

- Nov 8

- 7 min read

A recent firestorm erupted in the Perplexity AI user community, sparked by growing frustration over the platform's model performance. Pro subscribers, paying for access to premium AI models, began noticing significant quality drops in responses, leading to accusations of silent model substitution—a practice where users' queries are secretly routed to cheaper, less capable models while the interface falsely claims a premium model is processing the request. As user trust plummeted and accusations mounted, CEO Aravind Srinivas personally addressed the community to explain the technical issue behind the Perplexity AI model substitution controversy. This article examines the AI model transparency concerns, the CEO's explanation of the bug, and why this incident matters for the broader AI industry.

Understanding the Silent Model Substitution Accusations Against Perplexity AI

The Perplexity AI model substitution controversy extends far beyond a simple technical glitch—it strikes at the core of the relationship between AI service providers and their paying customers. For Pro subscribers, the incident represented a fundamental breach of the platform's value proposition.

Why Perplexity AI Users Felt Deceived by Model Performance and Quality Degradation

The initial alarm wasn't raised by error logs but by a tangible decline in output quality. Users reported that the issue transcended a mere icon misreporting the model; the quality of AI responses had genuinely deteriorated. Responses that were supposed to come from powerful models like Claude Sonnet 4.5 were described as "objectively less coherent, less precise, and more generic". One user noted they had observed a decline over several months, suggesting this wasn't an isolated incident but a pattern.

This created a jarring expectation mismatch. Pro users who explicitly selected and paid for a top-tier model like Claude Sonnet 4.5 reasonably expected their queries to be processed by that exact model. The discovery that the platform was silently falling back to a substitute—potentially Claude Haiku 4.5, which costs approximately one-third of Sonnet 4.5's price—without user knowledge was described as unacceptable by the community.

Defining Silent Model Substitution and Its Impact on AI Service Trust

Silent model substitution refers to when a service provider, without user notification, routes a query to a different—and typically less powerful and cheaper—AI model than the one the user selected. This practice represents a profound breach of trust, especially for a premium AI search engine that claims to prioritize accuracy and reliability.

For users, this isn't a minor backend detail; it's the core product they're paying for. The incident was particularly damaging because it fed into pre-existing suspicions about whether AI companies are deliberately cutting costs to improve margins. Users pointed to a pattern of "glitches" that seemed to coincidentally benefit Perplexity's bottom line, such as auto-selecting less powerful models or other issues that appeared to reroute users to inferior models to reduce infrastructure costs.

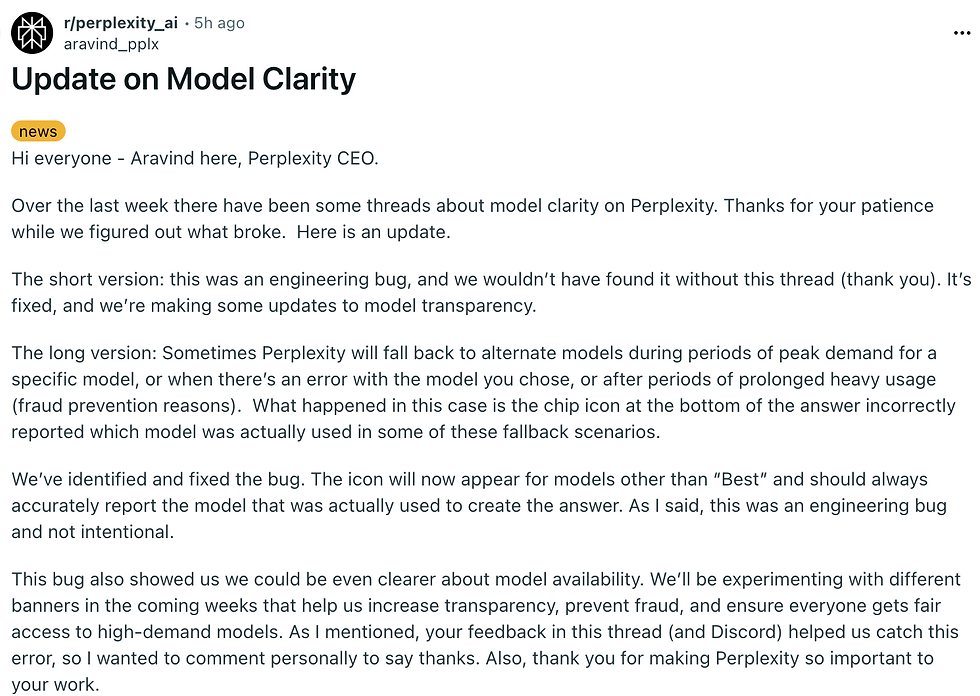

CEO Aravind Srinivas's Official Explanation of the Model Substitution Bug

In a direct response to the community, CEO Aravind Srinivas acknowledged the issue and offered an explanation for what occurred.

The Technical Details Behind the Perplexity AI Quality Degradation Issue

According to Srinivas, the problem was an engineering bug, not intentional model substitution. He explained that Perplexity sometimes uses fallback models for several reasons: during periods of "peak demand for a specific model," when there's an "error with the model you chose," or for "fraud prevention reasons" after prolonged heavy use. The bug, he stated, was that the chip icon at the bottom of the answer incorrectly reported which model was actually used in these fallback scenarios. In essence, the system was performing a designed fallback as intended, but the user interface failed to accurately reflect this change, creating a misleading experience for Pro subscribers.

This explanation distinguishes between two potential issues: (1) the silent model substitution mechanism itself, which Perplexity claims is a necessary fallback system, and (2) the UI reporting error, which the company framed as the actual bug requiring a fix.

Was the Silent Model Substitution Intentional? Aravind Srinivas's Position

The CEO was definitive in his stance that the lack of transparency was unintentional. He announced that the team had already "identified and fixed the bug," and moving forward, the icon should "always accurately report the model that was actually used." By framing the issue as a UI reporting error rather than a deliberate deception strategy, the company aimed to reassure users that the underlying intent wasn't malicious. However, this explanation highlighted a critical distinction: while Perplexity acknowledged the reporting error, it didn't commit to eliminating silent model substitution entirely.

User Backlash and Community Response to the CEO's Explanation

The CEO's explanation was met with a deeply divided response across the community. While some users were willing to accept the apology and move forward, a significant portion remained deeply skeptical and demanded more than just a UI fix.

How the Community Responded to Aravind Srinivas

The reactions on Reddit and other platforms were mixed. Some users, particularly those with enterprise subscriptions, showed understanding. One user noted: "Yes, I seen this issue occur sometimes... Was it annoying? Sure. Did I grab a pitchfork and start screaming? No. I opened a support ticket and went on my way".

However, many others were not convinced by the "it was a bug" explanation. Skepticism ran high, with users cynically observing that whenever some 'bug' occurs, it appears to be profitable for Perplexity, and there are never "bugs" that benefit users. Another commenter directly challenged the "fraud prevention" justification, viewing it as a convenient excuse. The sentiment that the company was caught cutting costs and was now attempting to save face was prevalent.

Core Demands from Perplexity Pro Users for Restoring Trust

Beyond the anger and skepticism, the community articulated a clear set of demands for rebuilding trust. Users argued that fixing the display icon was insufficient; the real issue was the silent fallback mechanism itself. The community coalesced around three core principles:

No silent model substitution – Users must never be switched to a different model without their explicit knowledge and consent

Clear warning when the selected model isn't used – If a fallback is necessary, the platform must prominently inform the user in advance

A user-controlled setting to disable fallback entirely – Pro users want the ability to opt-out of substitutions, even if it means waiting longer or receiving an error message

Many users stated they would not renew their subscriptions, making it clear that their continued business was contingent on these specific transparency changes.

Beyond Perplexity AI: The Industry-Wide Need for AI Service Transparency

This incident, while specific to Perplexity AI, serves as a crucial case study for the entire AI industry, illuminating the ethical tightrope companies must walk between operational costs and user trust.

What the Silent Model Substitution Incident Reveals About AI Economics and Ethics

The Perplexity model substitution controversy underscores a larger, uncomfortable reality: running state-of-the-art AI models is incredibly expensive. Running inference on these models is economically challenging at current subscription price points. This financial pressure creates a powerful incentive to cut costs, sometimes in ways that aren't transparent to end-users.

Research into AI transparency and trust-building indicates that when companies lack transparency about AI system operations, users lose confidence in the service. Ethically, the incident raises a critical question: when does a cost-saving measure cross the line into deception? The overwhelming user response suggests that the line is crossed the moment a substitution happens silently. Being transparent about which AI model is generating results is not just good practice—it's essential for building consumer trust in AI systems.

The Future of Perplexity AI: Transparency Experiments and Rebuilding User Trust

Looking forward, CEO Aravind Srinivas acknowledged that the bug highlighted the need for greater clarity. He committed that the company would be "experimenting with different banners in the coming weeks that help us increase transparency" and ensure fair access to models. These experiments will be the real test of Perplexity's commitment to rebuilding its reputation. The technical fix is necessary, but the more difficult work of regaining user trust has just begun. The company's future success will likely depend on whether it adopts the user-demanded principles of explicit warnings and user control over model selection.

Conclusion: Rebuilding Trust at Perplexity AI After the Model Substitution Controversy

The saga of the Perplexity AI silent model substitution bug is a story of user vigilance meeting corporate operational reality. It began with Pro users noticing a decline in quality, escalated into accusations of deception, and culminated in a direct response from CEO Aravind Srinivas, who attributed the issue to an "engineering bug." While the UI fix is a necessary first step, it does not address the core user demand: an end to silent fallbacks.

The trust that was broken wasn't merely about a faulty icon; it was about the user's fundamental right to control and understand the service they pay for. As organizations increasingly deploy AI systems, transparency and explainability are becoming essential pillars for building trustworthy AI. The future of Perplexity AI's relationship with its most dedicated users now hinges not on cosmetic bug fixes, but on a genuine commitment to the radical transparency they are demanding. For the broader AI industry, this incident serves as a warning: users will hold AI service providers accountable for delivering the promised value without deception.

Frequently Asked Questions

What is silent model substitution at Perplexity AI?

Silent model substitution refers to Perplexity AI routing user queries to cheaper, lower-quality AI models without user notification, while the interface continues displaying the premium model name. For example, queries ostensibly processed by Claude Sonnet 4.5 were actually handled by Claude Haiku 4.5, which has significantly lower computational cost but reduced capability for complex reasoning tasks.

How did Aravind Srinivas explain the model quality degradation?

CEO Aravind Srinivas stated the issue was an "engineering bug" where the UI failed to correctly display the model being used during fallback scenarios. He explained that fallbacks occur during peak demand or model errors but insisted the lack of transparency was unintentional.

What performance difference exists between Claude Sonnet 4.5 and Claude Haiku 4.5?

Claude Sonnet 4.5 remains the frontier model, while Claude Haiku 4.5 achieves performance comparable to Claude Sonnet 4 (the previous generation). Haiku 4.5 costs approximately one-third of Sonnet 4.5's pricing while delivering near-frontier performance for many tasks.

What are users demanding from Perplexity AI regarding model transparency?

The community has made three core demands: (1) an absolute end to silent model substitutions, (2) clear and explicit warnings when the chosen model is unavailable and a fallback is required, and (3) a user-controlled setting to disable fallbacks entirely, even if this results in an error or delay.

Why is this incident significant for the AI industry?

This incident highlights the fundamental tension between the high cost of running premium AI models and the financial pressure on companies to reduce expenses. It demonstrates how AI service transparency is critical for building user trust and preventing the perception of deceptive practices. The broader industry faces similar pressures and incentive structures.

Is the silent model substitution a sign of unsustainable AI pricing?

Industry observers have suggested that the incident reflects broader financial realities in the AI sector. The high operational cost of running powerful AI models creates financial pressure on companies to find cost-saving measures, though how these are implemented—transparently or silently—makes all the difference in user perception and trust.