Microsoft AI Safety Pledges Ring Hollow When Copilot Can’t Send an Email

- Olivia Johnson

- Dec 13, 2025

- 6 min read

Mustafa Suleyman, the CEO of Microsoft AI, recently made headlines with a stark promise: if the company’s artificial intelligence development poses a threat to humanity, they will stop working on it. This "break glass in case of emergency" policy is intended to reassure the public and regulators that Microsoft AI safety remains a priority over raw speed.

However, for the professionals and developers actually using Microsoft’s current suite of AI tools, this high-level philosophical debate feels disconnected from reality. While executives discuss the existential risks of superintelligence, daily users are wrestling with software that struggles to execute basic office tasks without hallucinations.

Real-World Friction: Why Microsoft AI Safety Feels Like a Distant Concern

Before analyzing the corporate pledges regarding the future of superintelligence, we need to look at the current state of the technology. The primary skepticism surrounding the Microsoft AI safety narrative stems from a lack of functional reliability in today's products.

Troubleshooting the Gap Between Microsoft AI Safety and Copilot Reliability

For many early adopters, the immediate danger isn't that AI will become sentient and take over; it’s that it will confidently mess up a spreadsheet or break a deployment pipeline.

Practical experience with Microsoft Copilot often reveals a "slot machine" dynamic. You pull the lever, and sometimes you get a perfect snippet of Python code. Other times, you get a hallucinated library that doesn't exist.

The Workflow Failure Point A clear example of this friction occurs in "agentic" workflows—tasks where the AI is supposed to chain actions together. One common failure mode involves simple automation in Microsoft 365. Users attempting to configure Copilot to read an email, parse the sentiment, and draft a reply based on a specific calendar availability often hit a wall.

In verified testing scenarios, Copilot frequently fails to bridge the gap between two applications. It might successfully summarize the email but fail to trigger the calendar lookup. Or, it might hallucinate a calendar slot that doesn't exist. Users report attempting to set up these "simple" logic chains multiple times, only to revert to doing it manually because the error correction took longer than the task itself.

The Context Window Limit Developers have noted that while natural language parsing is excellent, logical retention is poor. If you ask Copilot to analyze a code block, it works. If you ask it to refactor that code based on a dependency file three folders up, it often loses the plot. The Microsoft AI safety conversation focuses on "runaway intelligence," but the user reality is "runaway stupidity"—where the AI confidently executes the wrong command because it misunderstood the context of the project.

This creates a trust deficit. If the system cannot safely handle a "Reply All" function without supervision, the promise that it includes a kill-switch for Superintelligence feels like marketing fluff rather than a technical safeguard.

The Executive Vow: Mustafa Suleyman’s Stance on Microsoft AI Safety

Despite current limitations, the leadership at Microsoft is positioning itself as the responsible steward of the next generation of models. Mustafa Suleyman, a co-founder of DeepMind and now the head of Microsoft’s consumer AI division, has explicitly stated that safety is the absolute governor of their release schedule.

Defining the "Halt" Conditions for Microsoft AI Safety

Suleyman’s pledge is specific. He indicated that Microsoft is building "Superintelligence"—models with the potential to outperform humans across all tasks. The core tenant of his proposed Microsoft AI safety framework is a willingness to halt development if specific risk thresholds are breached.

This is a pivot from the standard Silicon Valley mantra of "move fast and break things." Suleyman argues that because the potential impact of AGI (Artificial General Intelligence) is irreversible, the industry cannot afford a reactive approach.

The Shift in Infrastructure ControlThis stance comes at a time when Microsoft is restructuring its relationship with OpenAI. While Microsoft previously relied heavily on Sam Altman’s team for core model development, recent moves suggest a diversification. With OpenAI seeking computing power from partners like Oracle and SoftBank, Microsoft has clawed back rights to develop its own in-house competitors to GPT-4.

This internal development puts the burden of Microsoft AI safety squarely on Redmond’s shoulders. They can no longer point to OpenAI as the sole entity responsible for alignment. If Microsoft is building the engine, they own the brakes.

Commercial Realities Threatening Microsoft AI Safety Protocols

The skepticism regarding Suleyman's vow isn't just about technical bugs; it’s about the nature of a publicly traded company. History suggests that when profit collides with caution, profit usually wins.

Shareholder Demands vs. Microsoft AI Safety Commitments

Critics argue that a voluntary pause in development is incompatible with fiduciary duty unless the government mandates it. If Microsoft were to halt work on a breakthrough model due to hypothetical safety concerns, while Google or Meta continued, Microsoft’s stock would likely plummet.

The Forced Integration Problem This aggressive drive for market dominance is already visible in how AI is being deployed. Users have pointed to the aggressive integration of Copilot into Windows 11 as a sign that adoption metrics matter more than user preference. The "slop" factor—unwanted, low-quality AI features appearing in the Start menu or Edge browser—contradicts the idea of a cautious, safety-first rollout.

If Microsoft AI safety was truly the priority, we would likely see a pull-back on forced implementation. Instead, the company is pushing the technology into every corner of the OS, regardless of whether users want it or if the hardware is optimized for it. This behavior suggests that the fear of falling behind competitors drives decision-making more than the fear of the technology going rogue.

The Technical Hurdle: Agentic AI and Future Microsoft AI Safety

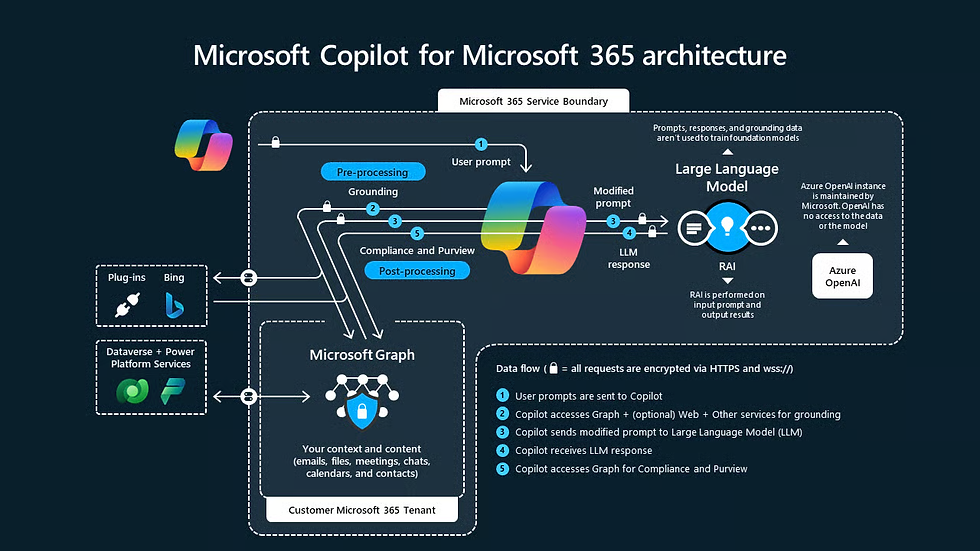

The next frontier, according to Suleyman, is the "Agent." This moves beyond the chatbot paradigm (user asks, AI answers) to a permission-based system (user gives a goal, AI goes away and does the work).

When Microsoft AI Safety Meets Complex Logic Chains

Suleyman admits that these agentic capabilities are still experimental. For an AI to function as an agent, it needs to interact with the world—booking flights, buying servers, writing to databases. This is where Microsoft AI safety faces its hardest test.

The Fragility of Autonomy Current agents break easily. A slight change in a website’s CSS can break an AI web scraper. A vaguely worded email can cause an automated assistant to hallucinate a crisis.

The danger here isn't necessarily a malicious AI takeover, but an incompetence cascade. If Microsoft grants these agents high-level permissions within an enterprise operating system, a single error could propagate across a network instantly.

The vow to "halt" development implies a clear line in the sand. But in software engineering, risk is rarely a binary switch. It is a spectrum of bugs, exploits, and unintended consequences. An AI that is 99% safe is still catastrophic at Microsoft’s scale.

Until Copilot can reliably handle a complex Excel macro without human hand-holding, the promise of a safety protocol for Superintelligence remains a theoretical exercise. Users want tools that work today, not promises about holding back a god-like machine that doesn't exist yet.

FAQ: Common Questions on Microsoft’s AI Strategy

What is the specific trigger for Microsoft to stop AI development?

Microsoft has stated they will halt work if a model demonstrates an inability to be contained or poses a tangible threat to human safety. However, the specific metrics or "red lines" for this decision have not been publicly detailed.

How does current Copilot performance relate to superintelligence risks?

Current performance highlights the "alignment problem" on a small scale; if Copilot cannot reliably execute a user's email workflow, scaling that same logic to critical infrastructure poses significant reliability risks.

Is Microsoft developing its own AI models independently of OpenAI?

Yes. While the partnership remains, Microsoft has begun investing in its own in-house model architecture and infrastructure to reduce dependency and ensure they have control over the technology stack.

Why are users skeptical of the Microsoft AI safety pledge?

Skepticism arises from the company’s history of prioritizing shareholder value and the current aggressive push to bundle AI features into Windows products regardless of user demand or product readiness.

What is "Agentic" AI in the context of Microsoft Copilot?

Agentic AI refers to future versions of Copilot that can perform multi-step actions autonomously, such as planning a trip or managing a project, rather than just generating text or code snippets.