Nvidia CEO Jensen Huang: Redefining AI Scaling and the Nuclear Future

- Olivia Johnson

- Dec 7, 2025

- 6 min read

The recent Joe Rogan Experience (JRE) interview with Nvidia CEO Jensen Huang did more than just generate viral clips about nuclear energy. It laid out a technical and economic roadmap for the next decade of computing. While the general public fixated on the energy soundbites, the deeper signal for the industry was Huang’s explicit redefinition of AI scaling.

We are no longer relying on a single method to make models smarter. The era of simply feeding more text into a larger model is ending. In its place, Nvidia is optimizing for a future defined by inference-time compute, synthetic data loops, and entirely new energy infrastructures.

Beyond Pre-Training: How Nvidia Is Pushing AI Scaling

For the last few years, the primary driver of progress was pre-training scaling. If you wanted a smarter model, you bought more H100s, scraped more of the internet, and trained a larger parameter set. This was the GPT-4 formula.

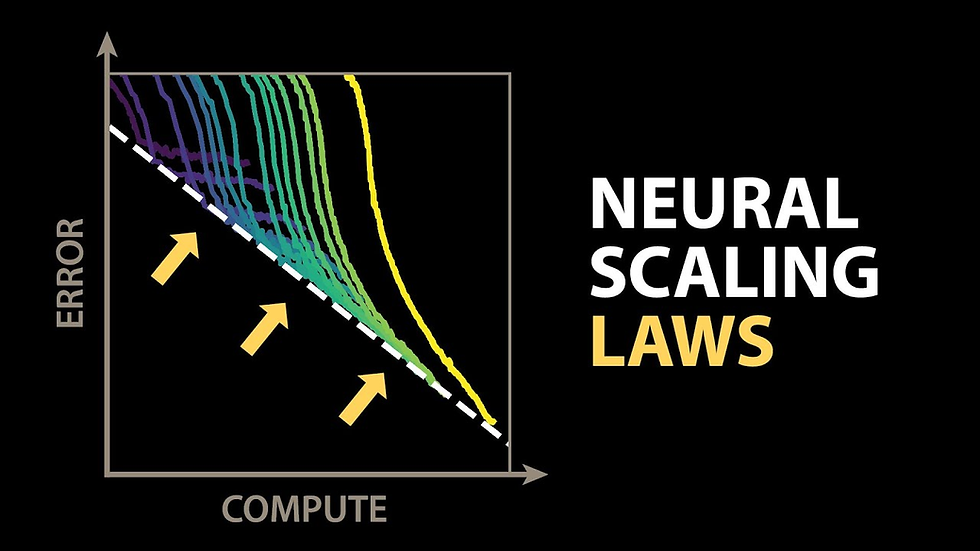

Huang suggests this single-track approach is obsolete. He outlined three distinct scaling laws that are now running in parallel.

The Evolution of Scaling

The first two are familiar. Pre-training scaling involves massive data ingestion. Post-training scaling—the ChatGPT era—introduced Reinforcement Learning from Human Feedback (RLHF), teaching the model to align with human intent.

The third law is the game-changer: Inference-Time Scaling.

This is the frontier that models like OpenAI’s o1 (Strawberry) are exploring. Instead of a model immediately spitting out the first likely token it predicts, the system pauses. It generates a "tree of possibilities," simulates various outcomes, critiques its own logic, and selects the best path before answering.

Nvidia is re-architecting its hardware to support this "thinking time." The computational load shifts from the massive burst of energy required to train a model once, to the sustained, intense compute required every time the model answers a complex question.

AI Scaling Through Test-Time Compute

Inference-time scaling changes the economics of AI. It implies that intelligence isn't just a static property of a trained model; it is a function of how much time and compute you allow the model to burn while solving a problem.

For Nvidia, this is arguably more lucrative than training. Training happens once per model version. Inference happens billions of times a day. If the industry adopts "thinking models," the demand for efficient, high-performance inference chips will outstrip the demand for training clusters. Huang confirmed that Nvidia chips are being optimized specifically to handle this branching, multi-step logic rapidly.

Powering Nvidia: Why Nuclear is the Only Way Forward

Scaling intelligence requires power. The most viral moment of the JRE interview was Huang’s commentary on Small Modular Reactors (SMRs).

Current data centers are reaching the gigawatt scale. Placing a gigawatt load on a standard public utility grid is logistically impossible in many regions; it creates volatility that can crash local infrastructure. Huang argues that the only viable solution for future "AI factories" is to go off-grid or use dedicated power generation.

Solving the AI Scaling Energy Crisis with SMRs

Huang predicts that within 6 to 7 years, we will see data centers powered by proprietary SMRs. These reactors, capable of generating hundreds of megawatts, offer consistent, carbon-free baseload power that wind and solar cannot guarantee for 24/7 compute clusters.

This isn't just about total power consumption. Huang highlighted a crucial efficiency metric that often gets lost in climate debates: the energy cost per token.

While total energy usage is climbing because demand is insatiable, the energy required to generate a single token of intelligence has plummeted by 100,000x over the last decade. This is Moore’s Law applied to energy. If AI scaling stopped today, running current models would be virtually free in terms of power. The energy crisis exists only because the industry refuses to settle for current intelligence levels, constantly pushing the frontier of what models can do.

Synthetic Data and the Future of Nvidia

A common criticism of generative AI is that it will eventually run out of human training data. Critics point to "model collapse"—the idea that AI trained on AI-generated content will degrade into nonsense.

Huang rejects this premise. He predicts that within 2-3 years, 90% of the world's knowledge will be generated by AI. However, he distinguishes between "fake data" and what he calls "distilled intelligence."

From Internet Scraping to AI Scaling Through Distillation

The future of data isn't scraping Reddit or Wikipedia; it's simulation. Huang describes a loop where AI reads existing scientific principles, simulates outcomes (creating new data), and then learns from those simulations.

Consider a physics engine. An AI can simulate a billion material interactions based on the laws of physics. The results of those simulations are not "hallucinations"; they are mathematically valid data points that never existed before. By training on this synthetic data, the model effectively "distills" intelligence from the rules of the universe rather than just the patterns of human language.

This allows Nvidia and its partners to bypass the bottleneck of finite human text. AI scaling continues because the models begin to generate their own curriculum based on verified ground truths.

Nvidia and the Emerging Robot Economy

The conversation naturally drifted toward physical AI. Huang pushed back against the labor displacement narrative, suggesting we are entering a Robot Economy.

The argument is that robots will not just replace human slots in existing hierarchies but will create entirely new industries. He cited "Robot Apparel" as a semi-serious example—customizing autonomous agents—but the broader point stands: the supply chain for robotics will be vast.

AI Scaling Leading to Universal High Income

Referencing concepts often discussed by Elon Musk, Huang touched on the transition from scarcity to abundance. If AI scaling and robotics drive the cost of labor toward zero, the cost of goods and services should theoretically collapse.

In this scenario, the conversation shifts from Universal Basic Income (a survival stipend) to Universal High Income (access to high-quality resources). If productivity creates massive surplus, the economic problem becomes distribution rather than production. For Nvidia, this validates their push into robotics simulation (Isaac Sim) and edge computing (Jetson). They are building the nervous system for the physical workforce.

The Psychology of the Nvidia Engine

For founders and investors, the most revealing part of the interview wasn't technical—it was psychological. Despite Nvidia being a $3 trillion company, Huang admitted he wakes up every morning with a "suffering" gene, feeling that the company is 30 days away from going out of business.

This paranoia is feature, not a bug. Huang attributes Nvidia's resilience to this perpetual fear of failure rather than blind ambition. He referenced the near-bankruptcy during the Sega era in the 90s as the foundational trauma that keeps the company sharp.

In an industry prone to hype, this mindset explains why Nvidia rarely rests on its lead. They treat every platform shift—from PC graphics to crypto mining to AI scaling—as an existential threat that must be dominated.

The Illusion of Walls

The overarching theme of Huang's breakdown is that the perceived barriers to AI progress—energy limits, data shortages, and compute costs—are illusions. Nvidia views these as engineering problems with defined solutions.

AI scaling is not hitting a wall; it is changing lanes. We are moving from a brute-force era of pre-training into a nuanced era of inference reasoning, powered by nuclear energy and fed by synthetic data. The "thinking" chips are already being designed. The only variable left is how fast the physical world can keep up with the digital one.

FAQ

What is inference-time scaling and why does it matter?

Inference-time scaling refers to increasing the computational resources used during the generation of an answer, rather than just during training. It allows models to "think," simulate multiple paths, and error-check before responding, leading to significantly higher accuracy in complex reasoning tasks without needing a larger base model.

Will Nvidia rely on the public power grid for future AI factories?

Likely not for the largest facilities. Jensen Huang predicts that within the next decade, major data centers will require gigawatts of power, necessitating off-grid solutions like Small Modular Reactors (SMRs) to avoid crashing public utility infrastructure.

Does using synthetic data cause AI model collapse?

It depends on the method. Blindly training on low-quality AI output causes collapse. However, Nvidia advocates for "distilled intelligence," where AI generates data via reinforcement learning or physics simulations (grounded in verifyable rules), which actually improves model quality.

How does the robot economy differ from automation?

Automation typically refers to replacing specific tasks. The robot economy implies a systemic shift where physical AI agents perform broad labor categories, driving the cost of production near zero. Huang suggests this leads to resource abundance (Universal High Income) rather than just job loss.

Why does Jensen Huang believe Nvidia is always "30 days from going out of business"?

This mindset stems from Nvidia's early struggles and near-bankruptcy in the 1990s. Huang believes that maintaining a "paranoia of failure" prevents complacency and ensures the company adapts faster than competitors, regardless of its current market cap.