The AI Scaling Problem: Why We're Chasing the Wrong Kind of Artificial Intelligence

- Aisha Washington

- Oct 16, 2025

- 8 min read

Introduction

There's a pervasive feeling that the field of artificial intelligence is moving at a dizzying speed, a relentless pace that's impossible to keep up with. Every week, it seems a new headline declares that an AI has surpassed another human benchmark—acing the SAT, solving PhD-level physics problems, or mastering complex programming challenges. Tech leaders and social media influencers amplify this narrative, suggesting that Artificial General Intelligence (AGI) is not just on the horizon, but imminent. This constant barrage of breakthroughs can make anyone pause and wonder if highly skilled human jobs are on the verge of obsolescence.

However, this popular vision of AGI, one sold to us by big tech and media hype, is fundamentally misleading. While today's AI models are incredibly powerful, they are not on a direct path to replicating human intelligence. The reason lies in a deep-seated misunderstanding of what intelligence truly is. We've become obsessed with measuring an AI's ability to apply knowledge, but we have almost entirely ignored its ability to acquire it. This gap is the essence of the AI scaling problem, and it reveals that in at least one crucial way, we are not even headed in the right direction.

What Exactly Is the AI Scaling Problem? — The Illusion of Progress

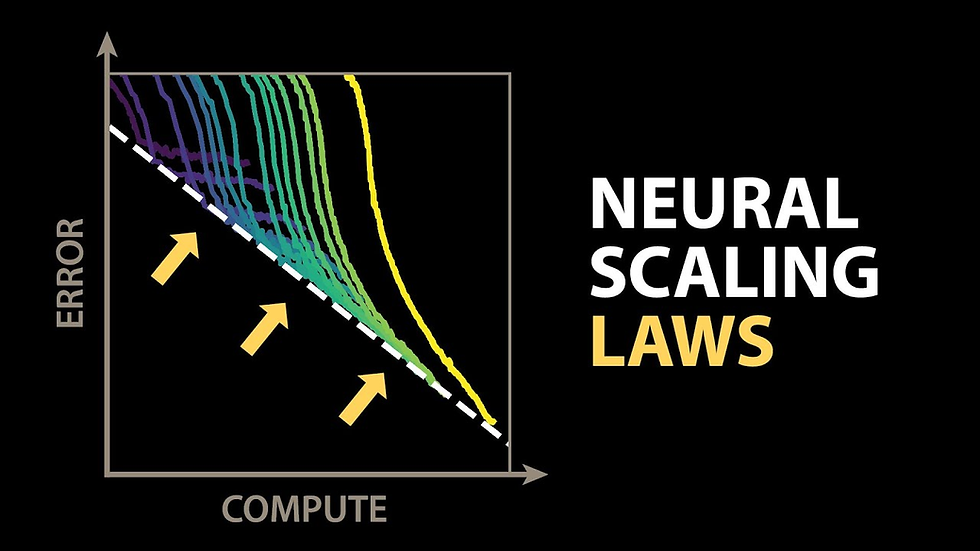

The AI scaling problem isn't about the size of models or the amount of compute they use; it's about a fundamental flaw in the current AI development paradigm. The prevailing belief is that to get smarter AI, we just need to build bigger neural networks and train them on more data. This "big data" mindset has been incredibly effective up to a point, but it's hitting a wall. The real problem is that our current methods scale with a combination of compute and data, and they are grossly inefficient in how they use that data.

At its core, the AI scaling problem is the challenge of designing algorithms that become more intelligent and capable primarily by using compute more effectively. Instead of just memorizing more of the internet, a truly intelligent agent should be able to take its experiences and, with more thinking time (compute), extract deeper insights, form new connections, and learn more from what it has already seen.

The illusion of progress comes from benchmarks that test for task completion, not learning ability. An AI can ace a test by having seen countless similar problems in its training data, but this doesn't mean it understands the underlying concepts in a flexible, generalizable way. True intelligence is defined by the ability to acquire and apply knowledge and skills. Current models are masters of application, but they are severely limited in their ability to acquire new knowledge after their initial, incredibly expensive training process is complete.

Why the AI Scaling Problem Is a Critical Barrier to AGI

Ignoring the AI scaling problem means we are building increasingly sophisticated but ultimately brittle systems. These models are like encyclopedias with a phenomenal search function—they contain a vast amount of information but lack the capacity to create genuinely new knowledge or adapt to scenarios far outside their training data. This leads to several critical limitations that prevent us from achieving AGI.

First, current AI struggles with personalization and niche tasks. Ask an LLM for creative ideas, and it will often produce generic, bland suggestions because it can't learn your unique style or context. Similarly, if you ask it to work with a less-popular programming library, it will likely fail because it hasn't seen the millions of examples required for it to "learn" that skill. An agent that needs millions of examples to grasp a new concept cannot, by definition, learn anything; it can only learn things for which massive datasets already exist.

Second, the current approach lacks the foundation for long-term reasoning and creativity. Human creativity is not born from a random sampling of existing knowledge; it emerges from a unique, continuous lifetime of experiences that shape our perspective. Without the ability to learn from a continuous stream of interaction, an AI can never develop this kind of nuanced, context-aware intelligence. It will remain a tool for remixing existing information, not an engine for generating truly novel insights.

The Evolution of Learning in AI: A Forgotten Path

Interestingly, the obsession with massive, static datasets is a relatively recent phenomenon in the history of AI. Early AI research was far more focused on creating systems that mimicked animal-like, continual learning. Thinkers as far back as the 1930s, like Thomas Ross with his "thinking machine", and later pioneers like Steven Smith with a robotic rat that could learn to navigate mazes, understood that intelligence was fundamentally about adaptation and learning from interaction with the world.

These early systems were designed with the understanding that learning is not a one-time event but a continuous process. An intelligent agent should exist in a single phase where it is simultaneously doing and learning. The modern pipeline, which strictly separates phases of training, fine-tuning, and deployment (after which the model's weights are often frozen forever), is a departure from this more organic and arguably more correct view of intelligence. By prioritizing static knowledge over dynamic learning, we may have veered off the most promising path toward AGI.

The Three Pillars to Overcoming the AI Scaling Problem

To get back on track toward a more meaningful form of AGI—one defined as a general-purpose learning algorithm—we must shift our focus to three critical areas of research that are often overlooked in popular discourse.

Embracing True Continual Learning

The first and most crucial pillar is continual learning: the idea that an AI agent should never stop learning. It must be able to integrate new information, update its understanding of the world, and acquire new skills throughout its existence.

Some might argue that current models already do this through methods like fine-tuning or in-context learning. However, these methods are insufficient. Fine-tuning, which involves retraining a model on a new task, often leads to a problem known as catastrophic forgetting, where the model forgets what it previously knew. Repeatedly fine-tuning can also cause a loss of plasticity, diminishing the model's ability to learn over time. In-context learning, where a model is given new information in its prompt, is also a temporary solution. The knowledge is only retained for that specific interaction and vanishes as soon as the information leaves the context window, as the model's core parameters remain frozen.

Learning from a Single, Experiential Stream

The second pillar requires a radical departure from current training methodologies. Instead of learning from billions of disjointed segments of text sampled randomly from the internet, an agent must learn from a single, continuous stream of experiential data, just as humans do.

Our lives are not a batch of random data points; they are a temporally correlated sequence of events. This continuous stream is what gives us episodic memory, an understanding of cause and effect over long time horizons, and a unique perspective that fuels our creativity. The author of the source transcript noted that their video idea came from a unique sequence of personal experiences in academia and startups—a history that a generic LLM could never replicate. For an AI to develop true reasoning and originality, it must be able to generate and learn from its own lifelong sequence of interactions with the world.

Redefining Scale—More Compute, Not Just More Data

The final pillar is to develop algorithms that scale with compute alone, not a combination of compute and data. We are rapidly approaching the limits of high-quality data available on the internet; tech insiders already report running into data scarcity problems for training next-generation models. This is not a "data problem" but an "approach problem". A human can become incredibly intelligent without ever reading the entire internet, which proves that the sheer volume of data is not the answer.

The future lies in creating methods that are more data-efficient. When given more computational power, these algorithms should be able to "think harder" about the data they already have, extracting more knowledge, discovering deeper patterns, and learning more from each moment-to-moment experience. Promising research areas like model-based reinforcement learning and auxiliary tasks for representation learning are already exploring this direction, but much more work is needed.

The Future of AI: A Shift from All-Knowing to All-Learning

The North Star of AI research needs to shift. We must move away from the unrealistic goal of creating a single, all-knowing agent that has memorized so much data it can generalize to any task. This view fails to incorporate the fundamental importance of learning.

The future of AI that we should be striving for is an agent that can learn from anything. An agent that has its own goals and can autonomously control its data stream to teach itself. An agent that we can teach through natural, back-and-forth interaction, just as we would teach a human. This is the vision of an "all-learning" AI, a system that continuously adapts, personalizes, and grows.

Conclusion: Key Takeaways on the AI Scaling Problem

The exhilarating progress in AI is real, but our interpretation of it is flawed. We have mistaken impressive performance on static benchmarks for genuine intelligence, leading us down a path that may never reach AGI. The AI scaling problem highlights this critical misstep: true intelligence is not about what you know, but about your capacity to learn.

To build the next generation of AI, we must reorient our efforts around the three pillars of a new paradigm:

True Continual Learning: Creating agents that learn throughout their existence without forgetting.

A Single Experiential Stream: Enabling AI to learn from a continuous, personal flow of data, fostering creativity and long-term reasoning.

Scaling with Compute: Designing data-efficient algorithms that use computation to extract deeper understanding from experience.

By shifting our focus from building all-knowing archives to cultivating all-learning agents, we can move beyond the current limitations and start making genuine progress toward artificial intelligence that is as dynamic, adaptive, and resourceful as our own.

Frequently Asked Questions (FAQ) about the AI Scaling Problem

1. What is the AI scaling problem in simple terms?

The AI scaling problem is the challenge that making AI models bigger and feeding them more data isn't leading to true, human-like intelligence. The core issue is that current AI is very poor at learning continuously from new experiences, instead relying on a massive, one-time training process. The goal is to create AI that can get smarter by using its processing power (compute) more efficiently, rather than just consuming more data.

2. Why can't AI like ChatGPT learn my personal preferences permanently?

ChatGPT and similar models can't learn permanently because of their underlying architecture. While they can use information you provide "in-context" for a single conversation, this knowledge is temporary and is forgotten once the conversation ends. Their core programming isn't designed for continuous, permanent updates from individual user interactions, a key limitation addressed by the concept of continual learning.

3. How is "continual learning" different from just retraining or fine-tuning an AI model?

Retraining a model from scratch is incredibly expensive and impractical. Fine-tuning is a partial update, but it often causes the model to forget its previous knowledge, a problem called "catastrophic forgetting". Continual learning, in contrast, is the ideal ability for an AI to seamlessly and efficiently integrate new information and skills over time without degrading its existing knowledge, much like a human does.

4. How can researchers start working on solving the AI scaling problem?

Researchers can focus on the three key pillars outlined in this article. This includes developing new algorithms for continual learning that overcome catastrophic forgetting; designing models that can learn effectively from a single, continuous stream of data instead of massive, random batches; and creating data-efficient methods where performance scales with available compute, allowing models to learn more from less data.

5. If we solve the AI scaling problem, what would an "all-learning" AI look like?

An "all-learning" AI would be far more collaborative and personalized than today's models. It could learn a user's specific work style, creative preferences, or a niche technical skill from a few interactions. It would be able to reason about long-term projects, generate truly novel ideas based on its unique experiences, and adapt to new information in the world without needing to be retrained by its developers. It would be less of a static encyclopedia and more of a dynamic, evolving intellectual partner.