Dario Amodei on Scaling Laws and AGI: The Bet on a "Country of Geniuses"

- Ethan Carter

- Dec 7, 2025

- 7 min read

There is a specific phrase Dario Amodei uses that tends to stop people in their tracks. He describes the immediate future of artificial intelligence not as a better chatbot or a faster search engine, but as a "Country of Geniuses in a Data Center."

It is a striking image. It suggests that the path to AGI (Artificial General Intelligence) isn’t paved with some undiscovered magical algorithm, but rather with brute force engineering and mathematics. According to Amodei, the CEO of Anthropic, the mechanism is simple: Scaling Laws.

The premise is that if you feed a model enough data and throw enough compute at it, intelligence emerges as a natural byproduct. This view has turned the industry into a high-stakes arms race. Yet, outside the San Francisco research labs, in the comment threads where real engineers discuss their daily workflows, the reception is mixed.

While Amodei predicts a near-future where Scaling Laws solve biology and software engineering, users on platforms like Reddit are grappling with a messier reality—one where AI helps, but still hallucinates, stumbles, and fails to understand the broader context of a codebase.

This disconnect between the theoretical trajectory of AGI and the practical implementation of today’s models is the defining tension of the current AI moment.

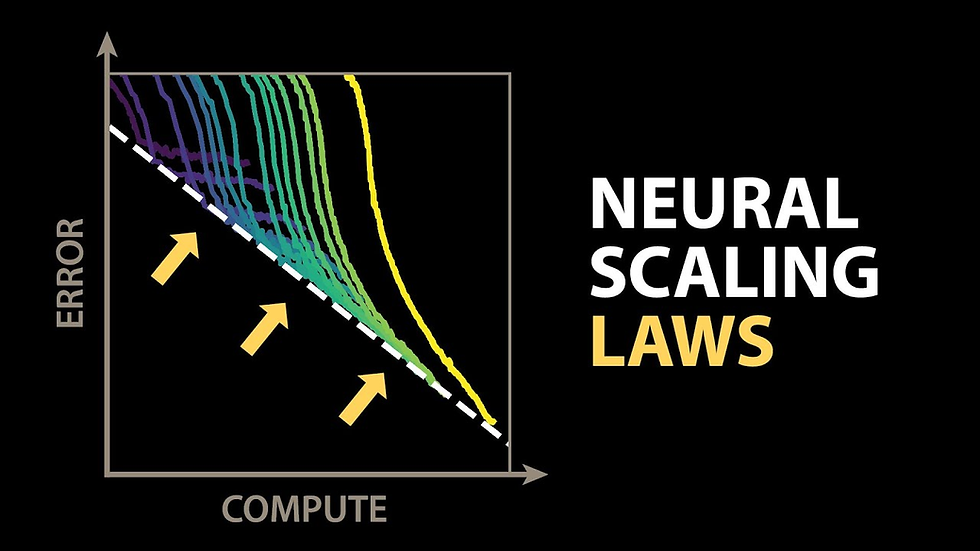

The Physics of Intelligence: Why Scaling Laws Are the New Gospel

To understand why Anthropic is betting the company—and billions of dollars—on this concept, you have to look at Scaling Laws as a form of physics for computer science.

For a long time, the assumption was that AI progress would plateau. You would reach a point of diminishing returns where adding more GPUs or more text data wouldn't yield a smarter model, just a more expensive one. Amodei’s argument, supported by the last five years of LLM development, is that we haven't hit that ceiling yet. In fact, we might not hit it for a long time.

The Transformer Architecture is the engine behind this. Unlike previous architectures that choked on complexity, Transformers digest information in a way that allows for massive parallelization. They don't just memorize; they learn patterns of reasoning. When you scale this up, you don't just get better grammar. You get "emergence."

Emergence is the moment a system exhibits behaviors it wasn't explicitly coded to perform. A model trained on internet text suddenly learns how to translate languages, write Python scripts, or solve logic puzzles.

For the believers in Scaling Laws, AGI is just the ultimate emergent property. It is what happens when the curve keeps going up and to the right. The implication is that we don't need a new invention. We just need bigger data centers.

The Problem of "Stateless" Transformers

However, technical skepticism remains. A significant portion of the engineering community points out that the Transformer Architecture is inherently stateless. It performs static matrix multiplication. It doesn't have a "memory" in the way a biological brain changes structure based on experience.

Critiques often focus on the idea that scaling essentially builds a massive lookup table of human knowledge. It can mimic reasoning, but can it genuinely innovate? If AGI requires the ability to operate continuously and learn in real-time without a reset, current Scaling Laws might eventually hit a hard wall.

Hitting the Wall: AGI, Data Scarcity, and Synthetic Data

If Scaling Laws are the vehicle to AGI, fuel is the immediate problem.

We are running out of internet. High-quality human text—books, scientific papers, clean code—is a finite resource. Estimates suggest that by the late 2020s, model trainers will have exhausted the public web.

This is where the concept of Synthetic Data becomes critical. If humans can't write enough high-quality data to feed the beast, the beast must feed itself. The theory is that a highly capable model (like Claude 3.5 or GPT-4) can generate textbooks, code samples, and reasoning traces that are cleaner and more logic-heavy than human data.

Using Synthetic Data to train the next generation of models is a controversial feedback loop. Detractors call this "model collapse"—where errors get amplified over generations like a xerox of a xerox.

But Anthropic and others argue that with strict filtering, this is actually the solution. A "Country of Geniuses" doesn't just answer questions; it generates new knowledge that serves as the training ground for the next iteration. This effectively bypasses the data bottleneck, allowing Scaling Laws to continue functioning even after human data is tapped out.

The Reality Check: Scaling Laws Meets AI Coding

The rubber meets the road in software engineering. This is the domain where the abstract promise of AGI collides with the daily grind of workers.

Amodei has predicted that AI will handle the vast majority of coding tasks in the near future. If you look at the discussion among developers using tools like Claude, the response is a polarized split between awe and frustration.

The Productivity Multiplier

On one side, you have senior engineers who view AI Coding tools as a force multiplier. They report distinct productivity gains—somewhere in the 2x to 5x range. For these users, the AI handles the boilerplate, the unit tests, and the initial scaffolding.

The human role shifts from writing syntax to "architectural review" and prompt engineering. In this view, Scaling Laws are working. The models are getting better at following complex instructions and managing context.

The "Hallucination" Barrier

On the other side, skepticism runs deep. Many developers report that while AI is great for scripts and isolated functions, it collapses when introduced to a massive, legacy codebase.

The "Country of Geniuses" seems to struggle with project-wide context. It introduces subtle bugs—variables that don't exist, libraries that are hallucinated, or logic that looks correct at a glance but fails under execution.

For these users, the idea that AGI is imminent feels disconnected from reality. They argue that debugging AI-generated code often takes longer than writing it from scratch. This suggests that while Scaling Laws improve text generation, they haven't yet solved the fundamental issue of reliability and logical consistency required for high-stakes engineering.

AGI Economics: Marginal Returns and the Hype Cycle

Dario Amodei is a CEO. His job is to sell a vision that justifies the billions of dollars required to build the clusters necessary to test Scaling Laws.

A recurring theme in community analysis is the fear of Marginal Returns. Moving from GPT-3 to GPT-4 cost roughly $100 million. The next leap might cost $1 billion. The one after that, $10 billion or even $100 billion.

The economic question is whether the intelligence gained is worth the exponential increase in cost. If a $100 billion model is only 10% better than a $10 billion model, the economic viability of Scaling Laws collapses long before the technical viability does.

Skeptics argue that industry leaders are incentivized to maintain the "AGI is near" narrative to keep the venture capital flowing. If the curve flattens, the valuation of these companies crashes.

However, Amodei seems to have earned a degree of trust distinct from peers like Sam Altman. His tone—often described as "sober" or "engineer-like"—lends weight to his predictions. When he talks about the cost, he frames it as a necessary entrance fee for solving problems that current economics can't touch.

Beyond the Code: AGI and Extending Human Lifespan

Why spend $100 billion on a computer program? Amodei’s answer pivots away from software and toward biology.

The most compelling long-tail argument for AGI isn't about automating customer service; it's about Extending Human Lifespan. Biology is complex, messy, and data-heavy—perfect for a system that thrives on pattern recognition at scale.

The vision is that an AGI system could simulate biological interactions, protein folding, and drug efficacy at a speed human researchers cannot match. It effectively compresses centuries of medical research into years.

This is the "Country of Geniuses" applied to cancer, aging, and chronic disease. If Scaling Laws hold, the value proposition shifts from "cheaper coding" to "curing death." This massively shifts the ROI calculation. Even if the Marginal Returns on text generation diminish, a 1% improvement in biological modeling could be worth trillions in economic value and human welfare.

The Outlook: Inference, Thinking Time, and the Future

We are entering a phase where training scaling—making the model bigger—is being supplemented by inference scaling. This is the idea of "thinking time."

Instead of just blurting out an answer, newer systems are designed to "think" before they speak, exploring multiple reasoning paths and self-correcting. This aligns with the community's desire for reliability over raw speed.

If Scaling Laws apply to this inference phase, we might see models that don't just know more facts, but ponder them more deeply.

The tension remains. On one hand, you have the grand trajectory of AGI promising to rewrite the rules of economy and biology. On the other, you have the gritty reality of engineers fixing hallucinated code and wondering if their jobs will exist in five years.

Dario Amodei’s bet is clear: scale is all you need. The rest of the world is waiting to see if the "geniuses" in the data center can actually keep the lights on.

FAQ

1. What is the main argument behind Dario Amodei's view on Scaling Laws?

Amodei argues that simply increasing the amount of computing power, data, and model parameters will inherently lead to Artificial General Intelligence (AGI). He believes we have not yet reached the point of diminishing returns, and that the same formulas driving current progress will continue to unlock advanced capabilities like reasoning and biological research.

2. How does the concept of "Data Wall" threaten the progress of AGI?

The "Data Wall" refers to the impending exhaustion of high-quality, human-generated text available on the internet for training AI. If models run out of new human data to learn from, progress could stall unless developers successfully utilize synthetic data—content generated by the AI itself—to train future generations of models.

3. What is the controversy surrounding AI Coding and the "Country of Geniuses" claim?

While Amodei envisions a "Country of Geniuses" that automates complex tasks, many developers find that current models still struggle with large, complex codebases. The controversy lies in the gap between the hype of fully automated software engineering and the reality where AI frequently "hallucinates" or introduces subtle bugs that require human intervention.

4. Why is Extending Human Lifespan considered a major goal of AGI scaling?

Proponents like Amodei believe that the high costs of training AGI are justified by its potential to decode complex biological systems. By applying massive compute to biology, AGI could accelerate drug discovery and disease curing, potentially extending human lifespan significantly more effectively than human researchers working alone.

5. Are Scaling Laws the only path to better AI, or are there other methods?

While Amodei focuses on training scaling (making models bigger), the industry is also exploring "inference scaling." This involves allowing the model more "thinking time" to process a query before answering, which improves reasoning and accuracy without necessarily increasing the size of the training dataset.