SingularityNET Bets Big on Supercomputer Networks for Next-Gen AI

- Ethan Carter

- Aug 19, 2025

- 10 min read

SingularityNET has announced a bold new initiative to build a global supercomputer network, backed by a headline $53 million infrastructure commitment aimed at accelerating the development of artificial general intelligence (AGI). This ambitious investment signals a pivotal shift in next-gen AI research, emphasizing distributed high-performance computing as the cornerstone for achieving AGI’s complex cognitive capabilities. By harnessing powerful supercomputing nodes interconnected across geographies, SingularityNET aims to create an open, decentralized platform that supports the computational demands of advanced AI models and cognitive architectures.

An immediate milestone in this infrastructure push is the arrival of the first supercomputer node within weeks, marking the tangible beginning of a distributed network designed to scale rapidly. Alongside hardware deployments, continuous ecosystem updates are rolling out to support software integration, developer access, and community governance. This article explores SingularityNET’s technical approach centered on the OpenCog Hyperon framework, details the supercomputer network’s infrastructure and data-center implications, examines the regulatory landscape, and highlights practical use cases and risks.

In doing so, we provide a comprehensive view of how the “SingularityNET supercomputer” initiative represents a major “AGI infrastructure investment” with potential to redefine “next-gen AI supercomputing.” This transformative project promises to reshape AI development through decentralized governance, cutting-edge technology, and strategic partnerships.

1. Background: SingularityNET, AGI and the role of supercomputer networks

SingularityNET’s mission is to create an open, decentralized AI ecosystem that advances artificial general intelligence (AGI)—AI systems capable of understanding, learning, and applying knowledge across diverse tasks at or beyond human levels. Unlike narrow AI that excels at specific functions, AGI requires complex cognitive architectures capable of symbolic reasoning, learning, memory, and adaptive behavior.

Infrastructure investment is central to SingularityNET’s long-term AGI strategy because achieving general intelligence demands computational power far beyond current isolated AI models. Their approach leverages distributed supercomputing networks that collectively provide the scale, speed, and resilience necessary for training and running large cognitive systems.

OpenCog Hyperon and AGI foundations

At the heart of SingularityNET’s AGI efforts lies OpenCog Hyperon, an advanced cognitive architecture designed to mimic human-like intelligence through an integrative framework combining symbolic reasoning, probabilistic logic, and neural networks. OpenCog Hyperon orchestrates multiple components—including attention allocation systems, memory storage, and learning modules—within a unified graph-based representation of knowledge. This design inherently requires substantial distributed compute resources due to its reliance on complex graph processing, reinforcement learning cycles, and real-time reasoning over large datasets.

OpenCog Hyperon’s architecture positions it uniquely among AGI research frameworks by focusing on cognitive synergy, where diverse AI subsystems interact fluidly rather than operate in silos. This synergy demands not only raw compute but also low-latency inter-node communication and sophisticated orchestration across hardware layers.

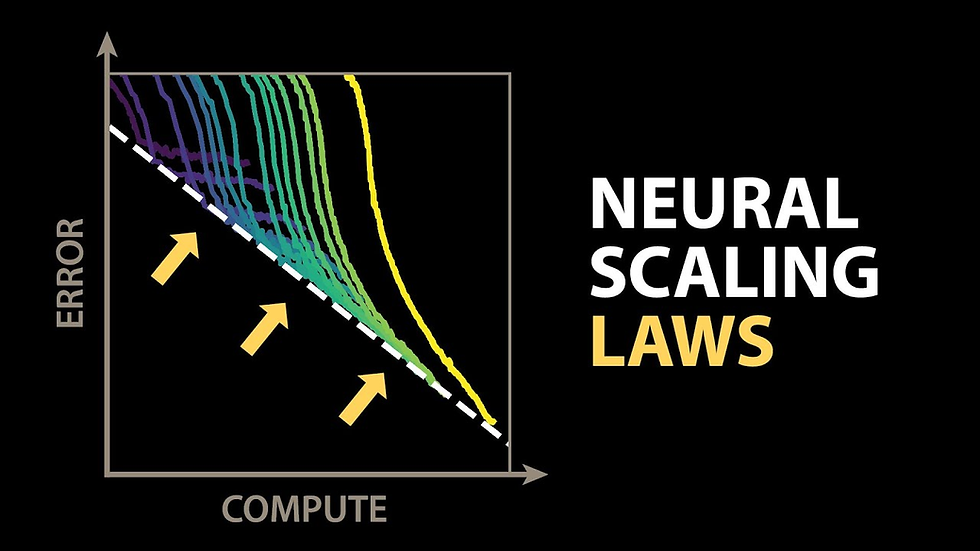

Why supercomputers matter for next-gen AI

Supercomputers have become indispensable in frontier AI research due to their unparalleled ability to handle massive parallel computations. Recent studies highlight rapid growth trends in AI supercomputer performance—measured in FLOPs (floating-point operations per second)—as well as increasing geographic distribution of these resources beyond traditional centralized facilities.

Networked supercomputers offer distinct advantages over single monolithic systems: they enable scalability by linking multiple compute nodes worldwide, enhance resilience via decentralization, and foster collaboration across research communities. These factors are critical for AGI development, which involves training complex multi-modal models and simulating cognitive processes that require vast computational throughput.

The global landscape of AI supercomputers is evolving from isolated behemoths to interconnected ecosystems—a trend SingularityNET seeks to lead with its distributed architecture.

SingularityNET’s organizational context and annual priorities

SingularityNET operates under a decentralized governance model emphasizing community participation, transparency, and shared ownership. Its 2024 annual report articulates advancing beneficial AGI through open collaboration while addressing ethical considerations and technological risk.

The $53 million infrastructure investment aligns with SingularityNET’s strategic priorities for 2024: scaling computational capacity; expanding ecosystem tools; promoting decentralized AI development; and fostering partnerships with data centers and research institutions.

This decentralized AI strategy seeks to balance robust technological innovation with responsible governance frameworks that mitigate risks while maximizing social benefit.

2. Investment and infrastructure roadmap for SingularityNET supercomputer network

SingularityNET’s announced $53 million investment is focused on establishing a high-performance distributed supercomputer network that supports its AGI development goals. This capital commitment targets building compute nodes with cutting-edge hardware, enhancing networking infrastructure for low-latency interconnectivity, developing a sophisticated software stack tailored to OpenCog Hyperon’s needs, and fostering ecosystem partnerships to accelerate adoption.

The $53M commitment: scope and funding priorities

The funding breakdown includes:

Compute Infrastructure (50%): Acquisition of GPUs, TPUs, CPUs, memory modules, and storage optimized for graph processing and symbolic reasoning workloads.

Networking & Interconnects (20%): High-speed optical connections between nodes ensuring minimal inter-node latency critical for distributed cognition.

Software Stack & Orchestration (15%): Development of orchestration layers managing workload distribution, fault tolerance, security protocols, and API integration.

Ecosystem Support & Partnerships (15%): Collaborations with data center providers, academia, regulatory bodies, and developer communities.

This targeted allocation reflects a comprehensive approach to building not just hardware but an integrated platform that enables scalable AGI research.

Global supercomputer network roadmap and first node timeline

The plan envisages a geographically distributed network of supercomputer nodes located strategically across key data centers worldwide. The first node—equipped with state-of-the-art GPU clusters—is scheduled to go live within weeks. This initial deployment will validate performance benchmarks and set the stage for phased rollouts expanding node count over the next 18–24 months.

The roadmap includes:

Phase 1: Initial node activation with baseline compute capability.

Phase 2: Network expansion adding additional nodes in North America, Europe, and Asia.

Phase 3: Full operational integration supporting multi-region workload orchestration.

This timeline emphasizes early functional delivery combined with long-term scale-up aligned with AGI research milestones.

Infrastructure architecture and decentralization model

Each node architecture integrates high-performance compute units (GPUs/TPUs), large-scale memory arrays optimized for graph databases, fast NVMe storage, and ultra-low-latency networking components. Crucially, nodes operate under decentralized control protocols that distribute power among stakeholders to prevent central points of failure or control.

This decentralized supercomputer architecture enhances resilience by enabling nodes to operate autonomously while cooperating on shared workloads. Governance mechanisms ensure transparent decision-making across operators while maintaining security against adversarial interference.

Such distributed AGI compute resources foster a robust ecosystem where innovation can thrive without dependence on centralized hyperscalers or monopolistic actors.

Recent technical updates provide insights at SingularityNET latest ecosystem updates — August 2024.

3. Technology stack: OpenCog Hyperon, compute needs and software-hardware integration

The technology stack powering SingularityNET’s supercomputer network is anchored by OpenCog Hyperon—a sophisticated software framework designed for AGI workloads requiring diverse computational patterns including graph processing, symbolic reasoning, and reinforcement learning (RL).

OpenCog Hyperon: software design and compute characteristics

OpenCog Hyperon represents knowledge as a hypergraph where nodes denote concepts or entities and edges represent relationships or interactions. Processing this graph involves intricate traversals and probabilistic reasoning algorithms demanding extensive parallelism.

Key computational workloads include:

Graph processing: Traversal algorithms that require efficient memory access patterns.

Symbolic reasoning: Logic inference engines operating over large knowledge bases.

RL training cycles: Iterative learning involving simulation environments requiring GPU acceleration.

These diverse tasks imply a need for heterogeneous hardware capable of handling both numeric-intensive deep learning operations and symbolic computation with low overhead.

Hardware and supercomputer design choices for AGI

GPUs (e.g., NVIDIA A100 or H100) optimized for matrix operations essential in RL training.

TPUs deployed selectively for tensor-heavy workloads where Google’s accelerators excel.

CPUs with high core-counts serving as backbones for symbolic processing and control tasks.

High-bandwidth memory to accommodate rapid graph traversal.

Inter-node networking using InfiniBand or custom optical links minimizing latency.

The choice between GPU vs TPU depends on workload specifics: GPUs offer versatility across symbolic and numeric tasks while TPUs provide peak performance on deep learning inference but less flexibility for symbolic reasoning.

This hardware orchestration balances power efficiency against performance needs critical for AGI training versus inference phases.

Software orchestration, benchmarking and performance validation

Managing these heterogeneous resources requires an advanced orchestration layer capable of dynamically scheduling workloads across nodes based on real-time performance metrics. Benchmarking strategies involve:

Measuring throughput on symbolic reasoning tasks.

Evaluating RL convergence times.

Validating low-latency graph traversals.

Performance validation is critical to ensure incremental improvements align with AGI capability milestones rather than traditional narrow AI benchmarks.

4. Industry context: AI supercomputer trends, data centers and competitive landscape

SingularityNET’s initiative takes place amid accelerating global trends in AI supercomputing marked by rapid growth in computing capacity concentrated in specialized data centers operated by hyperscalers like AWS, Google Cloud, Microsoft Azure—but also emerging distributed networks challenging this model.

Global AI supercomputer trends and distribution

Top AI systems are increasingly distributed geographically rather than centralized in singular facilities. Aggregate FLOPs across networks are scaling exponentially due to advances in chip technology and interconnects.

Distributed supercomputers enable flexible workload partitioning and resilience against localized disruptions—a key advantage for long-term AGI projects requiring sustained computation over months or years.

These trends reinforce the strategic rationale behind SingularityNET’s global supercomputer network roadmap.

Comprehensive analysis available at Trends in AI supercomputers (arXiv).

The data center industry’s role in AGI infrastructure

Data centers remain foundational components offering colocation services, power supply stability, cooling solutions, physical security, and fiber-optic connectivity essential for AI workloads. Energy efficiency is increasingly critical given environmental concerns associated with large-scale computation.

By partnering with existing data centers capable of hosting specialized AGI hardware under SingularityNET’s decentralized governance model, the initiative leverages mature infrastructure while mitigating upfront capital expenditure.

Understanding data center supply chain constraints helps anticipate deployment challenges and opportunities for efficiency gains.

See detailed discussion in The role of the data center industry in AI development (arXiv).

Competitive and partnership landscape

Industry players range from hyperscale cloud providers focusing on centralized infrastructures to research consortia exploring federated computing models. SingularityNET differentiates itself through its decentralized architecture promoting openness and community governance rather than closed proprietary systems.

Potential partnerships include academic institutions seeking shared compute resources and emerging blockchain-enabled marketplaces facilitating resource sharing under transparent protocols.

This decentralization versus hyperscaler dichotomy frames SingularityNET as a pioneering player in novel “AGI partnership models.”

5. Governance, policy and ethical considerations for SingularityNET’s AGI supercomputer network

Building frontier AI systems like OpenCog Hyperon-powered networks requires navigating complex regulatory landscapes focused on safety, transparency, accountability, and ethical development principles.

Frontier AI policy and regulatory frameworks

Emerging policy frameworks emphasize risk assessment tailored to frontier AI systems—those capable of transformative impacts including potential existential risks associated with uncontrolled AGI capabilities. Compliance includes:

Transparent audit trails.

Risk mitigation protocols.

Alignment with human values.

SingularityNET plans proactive engagement with regulators ensuring its decentralized infrastructure meets or exceeds evolving standards around “AI regulation for supercomputers.”

Decentralized governance, transparency and ethical safeguards

SingularityNET’s governance model empowers community stakeholders through voting mechanisms governing compute resource allocation, software updates, and ethical review boards guiding AGI development trajectories.

Transparency is prioritized by publishing audit logs accessible to researchers and regulators alike. Ethical guardrails are embedded into development pipelines preventing misuse or unintended consequences.

This decentralized AI governance aims to balance innovation speed with safety assurances critical for responsible AGI evolution.

6. Use cases, early applications and user scenarios for SingularityNET supercomputer services

The distributed AGI-oriented supercomputer network promises wide-ranging applications spanning research breakthroughs to commercial services enabled by scalable cognitive computing power.

Research and AGI development workloads

Researchers will leverage the network to conduct large-scale experiments requiring multi-modal data fusion—combining vision, language, audio—and complex cognitive system training unattainable on conventional infrastructure. This facilitates accelerated iteration cycles improving cognitive architectures like OpenCog Hyperon toward true general intelligence.

Such workloads benefit from the platform’s flexible resource allocation supporting experimental agility alongside robust performance guarantees.

Commercial AI workloads, marketplaces and decentralized services

On the commercial front, the platform supports machine learning model training-as-a-service tailored for enterprises needing scalable compute without vendor lock-in. Inference marketplaces allow third-party developers to offer specialized cognitive services via SingularityNET’s decentralized protocols enabling edge-to-cloud integrations—unlocking new business models based on trustless compute sharing.

These commercial AGI services create ecosystems where innovation can flourish aligned with user-defined priorities rather than proprietary agendas.

News and early case studies

Media coverage highlights the imminent launch of the first node as a watershed moment validating technical concepts underpinning this vision. Early partnerships include collaborations with academic labs testing distributed cognition workloads alongside commercial pilots exploring decentralized marketplaces.

These initial case studies illustrate practical feasibility paving the way toward broader adoption.

7. Challenges, risk mitigation and roadmap to achieving AGI with supercomputer networks

Despite promising prospects, building distributed AGI via supercomputer networks entails significant challenges spanning technical scalability, operational constraints, energy consumption, safety concerns, and governance complexity.

Technical and scalability challenges

Scaling computing beyond individual nodes introduces inter-node latency issues impairing tight integration required by cognitive architectures like OpenCog Hyperon. Software stack complexity increases exponentially managing fault tolerance, synchronization, consistency of knowledge graphs across distributed environments—and verifying emergent behaviors remains an open research problem.

Addressing these requires innovative protocols optimizing workload partitioning alongside rigorous validation regimes ensuring system reliability as it scales toward AGI capabilities.

Operational, energy and data center constraints

Running large-scale AI infrastructures demands vast energy resources subject to cooling limitations common in data centers worldwide. SingularityNET mitigates this by leveraging existing colocation facilities optimized for energy efficiency combined with scheduling algorithms minimizing peak loads—thereby reducing environmental impact while maintaining computational throughput.

Regulatory, safety and decentralization risks

Governance risks include potential misalignment between decentralized stakeholders leading to fragmented oversight or delayed response to safety incidents. Ensuring alignment between human values embedded in AGI systems requires rigorous safety protocols reinforced by transparent community oversight mechanisms supported by evolving regulatory frameworks targeting frontier AI risks.

These combined strategies reduce existential risks associated with uncontrolled AGI emergence while fostering innovation within ethical boundaries.

FAQ: Likely reader questions about SingularityNET supercomputer networks and AGI

Q1: What exactly is SingularityNET building and why is it significant?

SingularityNET is constructing a global distributed supercomputer network supported by a $53 million investment designed specifically to accelerate development of artificial general intelligence (AGI). This open platform combines cutting-edge hardware nodes interconnected worldwide under decentralized governance—aimed at overcoming current computational limits impeding next-gen AI breakthroughs.

Q2: How does OpenCog Hyperon fit into their plan?

OpenCog Hyperon is the foundational AGI architecture driving computational requirements; it uses graph-based cognitive models integrating symbolic reasoning with reinforcement learning that demand heterogeneous hardware support across a distributed network. Its unique design necessitates scalable compute resources provided by SingularityNET's supercomputer nodes.

Q3: When will the supercomputer network be operational and what to expect from the first node?

The first node will go live within weeks as part of an incremental rollout strategy validating key performance benchmarks including low-latency interconnects and heterogeneous workload processing capabilities. This milestone marks the transition from concept to operational distributed computation supporting early research trials.

Q4: What are the biggest risks and how is SingularityNET addressing them?

Major risks include technical scalability limits like inter-node latency; operational constraints involving energy efficiency; plus regulatory uncertainties around frontier AI safety. SingularityNET mitigates these through decentralized governance promoting transparency; partnerships leveraging efficient data centers; rigorous benchmarking; plus active engagement with evolving regulatory frameworks.

Q5: How can researchers or organizations access or contribute to the network?

Access models include participation via decentralized marketplaces offering compute resources as services; collaborative research partnerships; plus community governance channels inviting stakeholder input on infrastructure evolution. Updates are regularly published through official ecosystem communications.

Conclusion: Actionable takeaways and forward-looking analysis for SingularityNET and AGI supercomputing

SingularityNET’s $53 million infrastructure commitment marks a strategic inflection point in next-generation AI development by establishing a globally distributed supercomputer network tailored specifically for OpenCog Hyperon’s unique AGI architecture. This initiative stands out through its emphasis on decentralized governance paired with cutting-edge hardware-software integration—offering an alternative pathway distinct from centralized hyperscaler domination.

Stakeholders should engage early: researchers can explore new experimental paradigms; data-center operators can prepare for partnership opportunities emphasizing energy-efficient colocation; policy makers must monitor transparency standards; enterprises stand to benefit from emerging commercial offerings within this open ecosystem.

Looking ahead, key milestones include first node performance validation reports expected imminently; ongoing transparency audits assessing ethical guardrails; plus regulatory guidance shaping safe frontier AI deployment—each essential indicators tracking progress along SingularityNET’s ambitious AGI roadmap toward realizing transformative artificial intelligence capabilities at scale.