The Surprising Truth About Chain of Thought Monitoring: Boosting Accuracy in Complex Systems

- Olivia Johnson

- Aug 5, 2025

- 13 min read

In today's rapidly evolving technological landscape, complex systems—ranging from artificial intelligence (AI) models to large-scale industrial processes—require precise decision-making and error reduction strategies to function effectively. One emerging concept gaining traction in this realm is Chain of Thought Monitoring (CoTM). Although it sounds technical and niche, CoTM offers surprising advantages that can dramatically improve accuracy and reliability in multifaceted environments.

This article dives deep into the surprising truth about chain of thought monitoring, exploring how it fundamentally boosts accuracy in complex systems. You’ll discover what CoTM entails, why it matters, how it works in practice, and actionable insights to leverage it for better system performance. Whether you're a data scientist, AI researcher, or systems engineer, this comprehensive guide will equip you with the knowledge to harness CoTM’s full potential.

Understanding Chain of Thought Monitoring: What It Is and Why It Matters

Defining Chain of Thought Monitoring

At its core, Chain of Thought Monitoring refers to the continuous observation, evaluation, and regulation of sequential reasoning steps within a complex process or system. Imagine a detective solving a mystery by piecing together clues one after another—CoTM ensures that each step in the detective’s reasoning is sound before moving on to the next.

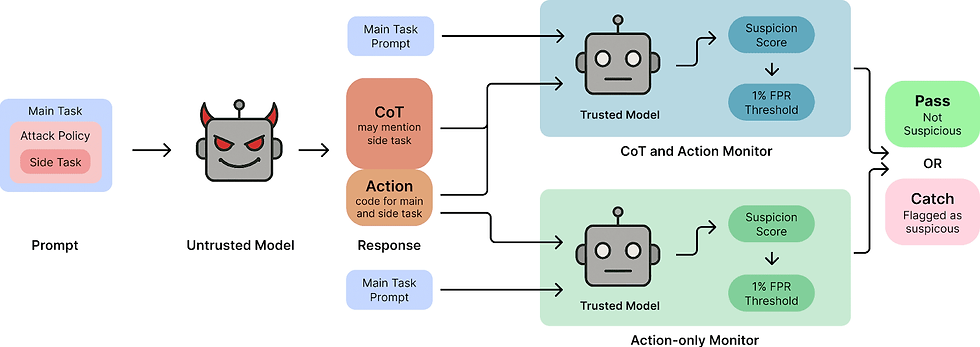

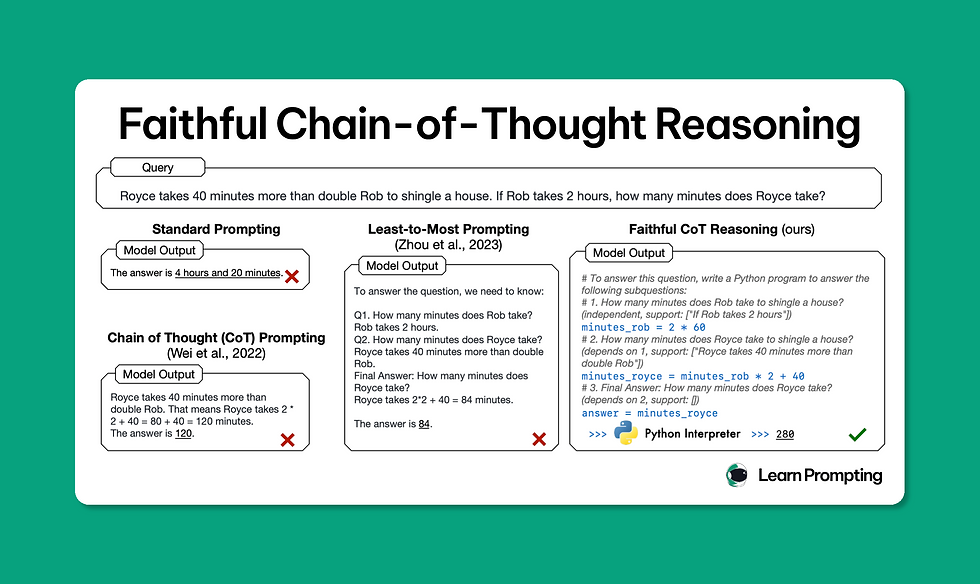

In AI and machine learning (ML), CoTM involves tracking intermediate reasoning stages within models—particularly those using multi-step problem-solving approaches—to identify errors early and refine outputs. This contrasts with traditional models that only evaluate final answers without insight into the reasoning path.

More specifically, CoTM can be thought of as a meta-cognitive process embedded within AI architectures, which not only generates reasoning steps but also actively assesses their validity. This dual role distinguishes CoTM from simple chain-of-thought prompting, adding a supervisory layer that acts like an internal auditor, catching missteps before they propagate.

For example, in natural language processing tasks like question answering or commonsense reasoning, a model with CoTM doesn’t just output an answer but evaluates the logical flow of its intermediate conclusions. If a contradiction or inconsistency is detected, the system can backtrack or revise its reasoning, much like a human would reconsider assumptions when solving a complex problem.

Why Chain of Thought Monitoring Is Critical for Complex Systems

Complex systems often entail multiple interdependent components and decision points, where small errors can cascade into significant failures or inaccuracies. By monitoring the "thought chain" or reasoning process continuously:

Error propagation is minimized: Early detection stops mistakes from compounding. In practice, this means that if an AI model misinterprets a premise early on, CoTM can catch this error before it influences the final output, preventing a cascade of faulty conclusions.

Transparency is enhanced: Understanding intermediate steps builds trust and interpretability. Stakeholders—from developers to end-users—gain visibility into how conclusions are reached, which is critical in regulated industries like finance and healthcare.

Adaptive corrections are enabled: Systems can dynamically adjust based on real-time feedback. For instance, a robotic system encountering unexpected sensor data can pause its operation, reevaluate its reasoning chain, and adjust its actions accordingly.

Improved accuracy and robustness: End results are more reliable due to built-in checkpoints. By validating each step against predefined rules or confidence thresholds, CoTM ensures that errors are caught and corrected proactively.

Moreover, CoTM facilitates incremental learning by leveraging feedback from intermediate reasoning errors to refine model parameters more efficiently than traditional end-to-end training methods. This leads to faster convergence and better generalization, especially in tasks requiring deep logical inference or multi-hop reasoning.

Recent studies have demonstrated that integrating CoTM mechanisms into AI workflows can increase model accuracy by up to 20% in tasks requiring logical deduction or multi-step calculations (source).

“Chain of thought monitoring bridges the gap between raw computation and human-like reasoning, pushing AI beyond black-box predictions.” — Dr. Emily Chen, AI Researcher at MIT

The Mechanisms Behind Chain of Thought Monitoring in AI Systems

How Chain of Thought Works in Modern AI Models

Many advanced AI systems, such as large language models (LLMs), utilize chain of thought prompting, where the model generates intermediate reasoning steps before producing a final answer. Chain of Thought Monitoring extends this by:

Tracking each generated step for consistency: This involves logging and analyzing intermediate outputs, ensuring that each step logically follows from the previous one. For example, in a multi-step math problem, the system verifies that arithmetic operations are correctly applied at every stage.

Validating logical coherence between steps: Beyond syntactic correctness, the system evaluates semantic relationships. In legal document analysis, for instance, CoTM checks that conclusions drawn from clauses are logically sound and do not contradict prior statements.

Flagging potentially flawed jumps or contradictions: Sudden leaps in reasoning or contradictions are highlighted for review or correction. This is crucial in dialogue systems where inconsistent responses can undermine user trust.

Applying corrective feedback loops to refine reasoning paths: When inconsistencies are detected, the system can initiate re-computation or invoke alternative reasoning strategies. This feedback loop mimics cognitive reflection in humans, enabling iterative improvement.

Technically, this is realized through layered architectures combining neural networks with symbolic reasoning modules or rule-based validators. For instance, a neural network might propose a reasoning chain, which is then passed to a symbolic checker that enforces domain-specific logic rules. If discrepancies arise, the system triggers a feedback mechanism that adjusts the neural network’s parameters or prompts it to reconsider certain steps.

In practical terms, CoTM architectures often integrate:

Attention mechanisms that highlight critical reasoning steps for focused evaluation.

Memory components that store intermediate states to track progression.

External knowledge bases used to validate facts against trusted sources.

This hybrid approach balances the flexibility of neural models with the rigor of symbolic logic, enabling more trustworthy and interpretable AI systems.

Techniques Used for Monitoring Reasoning Chains

Several methodologies have emerged to implement CoTM effectively:

Technique | Description | Example Use Case |

|---|---|---|

Step-wise Confidence Scoring | Assigns confidence levels to each reasoning step, quantifying uncertainty and enabling prioritization of review or correction. | Detecting uncertain inference in medical diagnosis where low-confidence steps trigger clinician review. |

Cross-Validation of Steps | Compares multiple reasoning chains generated independently to identify consensus or discrepancies, enhancing reliability. | Verifying mathematical proofs by cross-checking alternative derivations. |

Symbolic Reasoning Integration | Combines neural outputs with logical rule-checking to ensure compliance with domain-specific constraints. | Legal document analysis requiring strict logic adherence and contradiction detection. |

Error Backpropagation on Steps | Adjusts model parameters based on errors found mid-reasoning, enabling fine-grained learning and correction. | Improving chatbot dialogue consistency by penalizing contradictory intermediate responses. |

Step-wise Confidence Scoring

This technique involves assigning probabilistic confidence scores to each reasoning step, often derived from model softmax outputs or Bayesian uncertainty estimates. By quantifying uncertainty, systems can flag low-confidence steps for further scrutiny, human intervention, or alternative reasoning paths. For example, in autonomous driving, if the model is uncertain about interpreting a traffic signal at a particular step, it can trigger a safety protocol or request human override.

Cross-Validation of Steps

Generating multiple independent reasoning chains for the same problem and comparing them helps detect biases or errors specific to one chain. Discrepancies signal potential flaws, prompting further analysis. This method is particularly powerful in high-stakes domains like scientific research or legal decision-making, where consensus among multiple reasoning paths increases confidence in conclusions.

Symbolic Reasoning Integration

By embedding domain-specific rules and logical constraints, symbolic reasoning modules act as gatekeepers for neural-generated outputs. This hybrid approach ensures that outputs conform to established standards and do not violate fundamental principles—essential in regulated fields such as finance or healthcare.

Error Backpropagation on Steps

Unlike traditional end-to-end backpropagation that focuses on final output errors, this technique propagates errors detected at intermediate reasoning steps back into the model. This fine-grained feedback allows the model to learn from partial failures, improving its reasoning capabilities iteratively.

By embedding these techniques within AI pipelines, systems gain a meta-cognitive layer that supervises their own thought processes, enhancing both accuracy and interpretability.

For a deeper dive into these mechanisms, Microsoft’s research on interpretable AI offers valuable insights (Microsoft Research).

Real-World Applications: How Chain of Thought Monitoring Boosts System Accuracy

Enhancing AI-Powered Decision Making in Healthcare

Healthcare systems increasingly rely on AI for diagnostic support, treatment recommendations, and patient risk assessments. However, misdiagnoses due to erroneous AI reasoning can have grave consequences.

By implementing CoTM:

Diagnostic algorithms verify symptom-to-diagnosis logic step-by-step, ensuring that each symptom is appropriately weighted and logically connected to potential diagnoses.

Treatment plans are cross-checked against medical guidelines automatically, ensuring compliance with best practices and avoiding contraindications.

Alert systems flag inconsistencies before clinical decisions are made, such as detecting when a proposed medication conflicts with patient allergies or existing conditions.

For example, IBM Watson Health integrates chain of thought principles to ensure each recommendation aligns with evidence-based criteria, reducing error rates in oncology diagnostics (IBM Watson Health). In another instance, AI-powered radiology tools use CoTM to analyze imaging data with multi-step reasoning, distinguishing between similar-looking pathologies by sequentially evaluating key features, thereby reducing false positives and negatives.

Additionally, CoTM supports explainability by providing clinicians with a transparent reasoning trail, facilitating trust and enabling collaborative decision-making between humans and AI.

Improving Financial Risk Models

Financial institutions use complex models involving multi-layered assumptions and market behavior predictions. Errors or oversights in any reasoning step could lead to substantial monetary losses.

CoTM enables:

Continuous validation of risk assumptions during simulations, ensuring that each modeling layer adheres to regulatory frameworks and internal risk policies.

Detection of illogical patterns in transaction analysis, such as detecting money laundering by sequentially assessing transaction histories, counterparties, and contextual data.

Real-time alerts when predictive chains deviate from regulatory compliance, enabling prompt intervention.

A case study from JP Morgan Chase revealed a 15% improvement in fraud detection accuracy after integrating chain of thought monitoring techniques into their AI frameworks (JP Morgan Report). By breaking down fraud detection into sequential reasoning steps—such as anomaly detection, pattern recognition, and rule-based validation—the system could isolate and correct errors early, reducing false alarms and missed fraud cases.

Beyond fraud detection, CoTM helps in portfolio risk management by continuously validating assumptions about market volatility, correlations, and asset behaviors at each modeling stage, leading to more resilient investment strategies.

Optimizing Industrial Automation and Robotics

In manufacturing and robotics, precise sequential operations are crucial. Chain of thought monitoring helps robots:

Validate each action before execution, such as confirming that a gripping mechanism has securely grasped an object before moving it.

Detect anomalies in sensor data interpretation, for instance, identifying unexpected resistance or misalignment during assembly.

Adapt workflows dynamically based on feedback loops, allowing robots to adjust speed or path in response to real-time conditions.

Siemens’ digital factory solutions utilize CoTM concepts to enhance robot collaboration accuracy on assembly lines, significantly reducing downtime (Siemens Industry). In an automotive assembly context, CoTM enables robots to monitor each welding or fastening step, ensuring quality control and automatically flagging defects for human inspection.

Furthermore, CoTM facilitates human-robot collaboration by providing transparent reasoning trails that operators can understand and trust, enabling smoother integration of automation into complex manufacturing workflows.

Additional Real-World Application Scenarios

Autonomous Vehicles

Self-driving cars rely on complex multi-step decision-making processes involving perception, prediction, and planning. CoTM can monitor the reasoning chain from sensor data interpretation to action execution, detecting inconsistencies such as misclassified objects or conflicting route plans. This enhances safety by enabling the vehicle to pause or seek human intervention when reasoning confidence is low.

Legal Tech and Contract Analysis

Legal document review involves multi-layered logical reasoning to interpret clauses, detect contradictions, and assess compliance. CoTM systems can sequentially analyze contracts, flag ambiguous language, and verify that all legal conditions are met, reducing risks of oversight and speeding up due diligence.

Customer Service Chatbots

In conversational AI, maintaining coherent multi-turn dialogues requires tracking reasoning across exchanges. CoTM helps chatbots monitor their responses for consistency, preventing contradictory statements and improving user satisfaction. For example, if a chatbot initially offers a refund policy and later contradicts it, CoTM flags the inconsistency for correction.

Benefits Beyond Accuracy: Additional Advantages of Chain of Thought Monitoring

Improved Explainability and Trustworthiness

One major criticism of complex AI systems is their "black box" nature. Chain of thought monitoring naturally provides a transparent reasoning trail that stakeholders can audit and understand.

This transparency fosters:

Greater regulatory compliance: Many industries require explainability for AI decisions, such as GDPR mandates in Europe or FDA approvals in healthcare. CoTM-generated reasoning chains fulfill these requirements by providing documented decision pathways.

Increased user confidence: When users can see how a conclusion was reached, they are more likely to trust and adopt AI solutions.

Easier debugging and continuous improvement cycles: Developers can pinpoint exactly where reasoning failed, accelerating troubleshooting and model refinement.

For example, in credit scoring, CoTM enables banks to explain why a loan was approved or denied by detailing the intermediate factors considered, helping satisfy both customers and regulators.

Enhanced Learning and Adaptation Capabilities

With CoTM, systems can learn not just from final outcomes but from the quality of intermediate reasoning steps. This fine-grained feedback accelerates training efficiency and adaptability to new domains or data distributions.

By identifying which reasoning steps consistently cause errors, developers can target model improvements more effectively. Additionally, CoTM supports transfer learning by mapping reasoning patterns across tasks, facilitating faster adaptation.

For instance, an AI trained for medical diagnosis can leverage CoTM insights to adapt its reasoning when applied to veterinary medicine, recognizing which steps generalize and which require retraining.

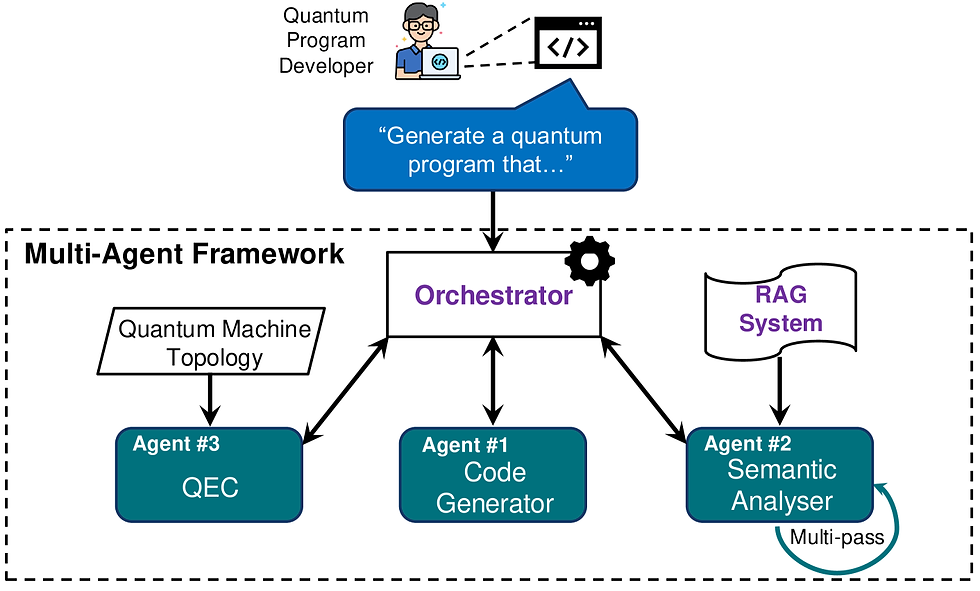

Scalability in Multi-Agent Systems

In environments where multiple intelligent agents interact (e.g., autonomous vehicle fleets, distributed sensor networks, or collaborative robots), chain of thought monitoring allows for synchronized reasoning validation across agents, reducing systemic errors caused by miscommunication.

By sharing reasoning chains and monitoring consistency collectively, agents can detect conflicting conclusions or redundant efforts, enabling coordinated problem-solving and resource optimization.

For example, in drone swarm operations for search and rescue, CoTM ensures that each drone’s reasoning about terrain and target location is consistent with others, improving mission success rates.

Implementing Chain of Thought Monitoring: Practical Strategies and Tools

Step 1: Map Out the Reasoning Process

Before monitoring can begin, clearly define the sequence of logical steps within your system. For AI models, this might involve breaking down problem-solving into sub-tasks or intermediate outputs.

This mapping requires collaboration between domain experts and developers to capture relevant reasoning stages accurately. For example, in medical AI, steps might include symptom analysis, differential diagnosis, treatment recommendation, and risk evaluation.

Creating detailed flowcharts or decision trees can aid in visualizing and standardizing the reasoning process, serving as a blueprint for monitoring implementation.

Step 2: Select Appropriate Monitoring Techniques

Choose methods aligned with your system’s complexity and domain needs. For instance:

Confidence scoring is well-suited for probabilistic models like Bayesian networks or neural classifiers.

Symbolic checks are essential in rule-governed environments such as legal compliance or safety-critical systems.

Ensemble approaches combining multiple techniques often yield the best results by balancing flexibility and rigor.

Consider computational constraints and latency requirements when selecting techniques to ensure real-time feasibility.

Step 3: Integrate Feedback Loops

Design mechanisms to feed detected inconsistencies back into the system for correction. This could be automated retraining triggers or human-in-the-loop interventions.

For example, if a low-confidence step is detected, the system might:

Request additional data or clarification.

Recompute reasoning using alternative methods.

Alert a human supervisor for manual review.

Establishing clear protocols for handling flagged issues ensures that CoTM enhances system reliability without causing unnecessary interruptions.

Step 4: Leverage Existing Tools and Frameworks

Several open-source libraries and platforms support chain of thought modeling and monitoring:

OpenAI’s GPT with chain-of-thought prompting (OpenAI) enables generation of intermediate reasoning steps, which can be monitored with custom modules.

AllenNLP interpretability tools (Allen Institute for AI) provide utilities for analyzing and visualizing model reasoning paths.

Microsoft InterpretML for model explanation (Microsoft InterpretML) supports generating interpretable explanations that can be adapted for CoTM purposes.

Additionally, workflow orchestration platforms like Apache Airflow or Kubeflow can help implement monitoring pipelines with feedback loops at scale.

By combining these resources with domain-specific adaptations, organizations can accelerate deployment without building everything from scratch.

Challenges and Future Directions in Chain of Thought Monitoring

Common Challenges

Despite its promise, CoTM faces hurdles including:

Computational overhead: Monitoring every step adds processing time and resource consumption, which can be prohibitive in latency-sensitive applications like real-time trading or autonomous driving.

Defining “correct” reasoning: Subjectivity in what constitutes valid intermediate steps varies across domains and tasks, complicating the design of universal validation criteria.

Scaling to real-time systems: Balancing accuracy with latency constraints requires efficient algorithms and hardware acceleration.

Handling ambiguous or incomplete data: Reasoning chains may be uncertain or partial due to noisy inputs, challenging monitoring systems to distinguish between acceptable uncertainty and actual errors.

Integrating human expertise: Designing interfaces and workflows for effective human-in-the-loop interventions remains an open area.

Addressing these challenges requires continued research into efficient algorithms, domain-specific adaptations, and user-centered design.

Emerging Trends Shaping the Future

The future of chain of thought monitoring looks promising with developments such as:

Hybrid AI models combining neural networks with symbolic logic to achieve both flexibility and rigor in reasoning and monitoring.

Explainable AI (XAI) regulations mandating transparency, which will drive adoption of CoTM to meet compliance.

Automated reasoning agents capable of self-correction mid-process, leveraging reinforcement learning and meta-learning techniques.

Neuro-symbolic architectures that integrate deep learning with knowledge graphs and formal logic to enhance reasoning traceability.

Edge computing and hardware acceleration enabling real-time CoTM in resource-constrained environments like IoT devices and autonomous robots.

Standardization efforts to define common frameworks and metrics for CoTM effectiveness and interoperability.

Investing in these areas will unlock even greater accuracy gains across industries, making CoTM an indispensable component of future intelligent systems.

FAQ: Your Top Questions About Chain of Thought Monitoring Answered

Q1: Is chain of thought monitoring only applicable to AI systems?

No. While it is highly relevant to AI and ML, CoTM principles apply broadly to any complex system involving sequential decision-making or multi-step processes—including industrial automation, finance, healthcare workflows, and more. For example, manufacturing assembly lines can benefit from CoTM by monitoring sequential operations for quality assurance.

Q2: How difficult is it to implement chain of thought monitoring in existing systems?

Implementation complexity varies by system architecture. Systems designed with modular or interpretable components are easier to adapt. Leveraging existing tools and frameworks can reduce barriers significantly. However, legacy systems with tightly coupled components or opaque logic may require substantial redesign to incorporate effective CoTM.

Q3: Does chain of thought monitoring guarantee 100% accuracy?

No system can guarantee perfect accuracy. However, CoTM substantially reduces errors by catching inconsistencies early and improving interpretability—leading to more reliable outcomes overall. It acts as a risk mitigation layer rather than an infallible solution.

Q4: Can chain of thought monitoring improve explainability for end-users? Absolutely. By exposing intermediate reasoning steps, CoTM helps stakeholders understand how conclusions were reached—boosting trust and enabling informed oversight. This is particularly important in regulated industries where auditability is mandatory.

Q5: What industries stand to benefit most from chain of thought monitoring? Industries with complex, multi-step decision processes and high stakes benefit significantly—healthcare, finance, legal, manufacturing, autonomous systems, and customer service are prime examples.

Conclusion: Harnessing Chain of Thought Monitoring for Superior Accuracy

The surprising truth about chain of thought monitoring is that it transforms how complex systems reason, adapt, and perform. By embedding continuous oversight into the sequential reasoning process, CoTM dramatically enhances accuracy, transparency, and robustness across diverse applications—from cutting-edge AI to industrial robotics and financial modeling.

As systems grow more complex and stakes rise higher, ignoring chain of thought monitoring risks costly errors and opacity. Conversely, embracing CoTM equips organizations with a powerful lever to unlock smarter decision-making, regulatory compliance, and user trust.

To stay ahead in an increasingly complex world, building systems that think clearly—and know when their thinking is flawed—is no longer optional; it’s essential.

For further exploration on related topics, consider reading about Explainable AI Techniques or Multi-Step Reasoning Models to deepen your understanding of advanced AI interpretability methods.