AI Scaling Limits Are Hitting a Wall: Why More GPUs Won't Fix AGI

- Ethan Carter

- Dec 15, 2025

- 7 min read

The narrative around Artificial Intelligence has been dominated by a singular belief: if we just keep adding more compute and more data, a god-like superintelligence is inevitable. For years, Silicon Valley has operated on this assumption. However, as we approach the midpoint of the decade, the math is starting to break. We are seeing clear signs that AI scaling limits are not just theoretical hurdles for the distant future—they are concrete barriers we are hitting right now.

From frustration in developer communities to high-level hardware analysis by experts like Tim Dettmers, the consensus is shifting. The era of exponential "free" gains is ending. Understanding why this is happening requires looking past the marketing brochures and examining the physical and practical realities of current technology.

Real-World User Experiences: Navigating AI Scaling Limits Now

Before dissecting the hardware physics, we have to look at how these limitations manifest for the people actually paying for and using these tools. The industry promised that Large Language Models (LLMs) would evolve into autonomous agents capable of complex reasoning. The reality on the ground tells a different story.

Practical Solutions for When AI Scaling Limits Reliability

Professionals attempting to integrate AI into their workflows are encountering a ceiling. The primary issue isn't that the models are "stupid," but that they lack a fundamental connection to truth. This is a direct symptom of AI scaling limits—adding more parameters hasn't solved the hallucination problem; it has often just made the models more confident in their errors.

The Developer Experience:Software engineers report that AI coding assistants frequently invent libraries that do not exist. This turns a time-saving tool into a debugging nightmare. When a model hallucinates a dependency, the developer spends more time chasing down a phantom solution than they would have spent writing the code from scratch.

The "Search" Fallacy:Users attempting to use LLMs as search engines are finding that accuracy rates are unacceptably low. Reports suggest that for factual queries, hallucination rates can hover between 30% and 50% depending on the model.

Actionable Advice for Current Users:If you are deploying these tools today, you must treat them as stochastic text generators, not logic engines.

Verify Everything: Never copy-paste code or citations without manual verification. The "trust but verify" approach is insufficient; assume the output is flawed until proven otherwise.

Narrow the Scope: Use LLMs for transformation tasks (rewriting, summarizing, formatting) rather than information retrieval or logic invention. The models excel at manipulating language but struggle with anchoring that language to reality.

Abandon Anthropomorphism: Stop treating the AI as a "who." It is a tool. Expecting it to "understand" or "reason" leads to poor prompting and disappointment.

Why Enterprise Agents Fail Against AI Scaling Limits

The corporate world is currently obsessed with "AI Agents"—software intended to perform multi-step jobs autonomously. However, feedback from early adopters suggests this is largely vaporware. Employees are being forced to sit through hours of training on how to manage these agents, only to find the tools require such precise, engineering-level instructions that they are useless for non-technical staff.

This failure of "agency" is a byproduct of AI scaling limits. We haven't cracked the code on reasoning; we've just built better autocomplete. When a task requires ten sequential steps, and the model has a 5% error rate per step, the probability of the agent failing the overall task is statistically guaranteed. Companies investing in replacing white-collar workers (accountants, middle managers) with these tools are likely setting money on fire. The tech simply isn't there, and scaling existing architectures won't put it there.

The Hardware Reality Behind AI Scaling Limits

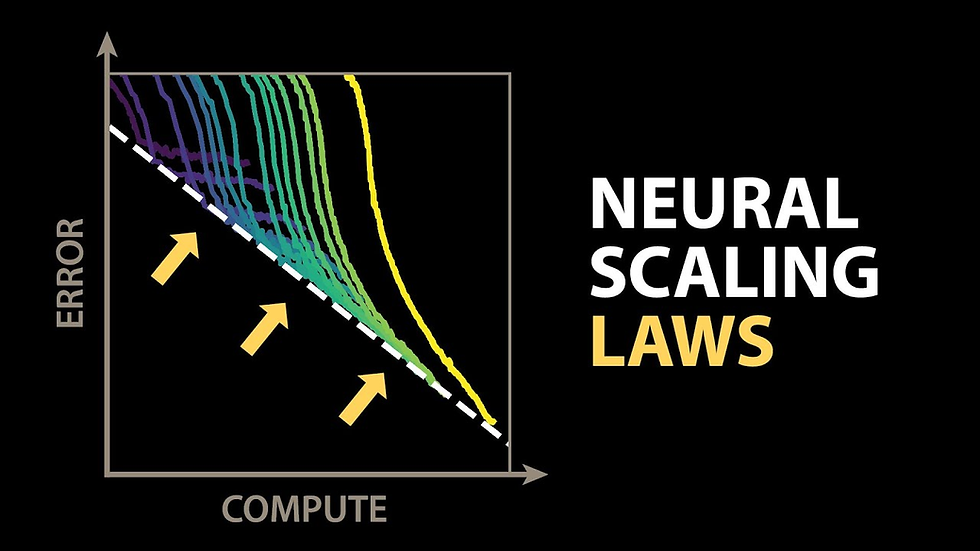

The skepticism felt by users is backed by hard data coming from the hardware sector. The belief that "bigger is better" relies on Moore's Law and efficient scaling laws remaining constant. According to Tim Dettmers, a researcher at the Allen Institute for AI and professor at CMU, those days are numbered.

The Data Proves AI Scaling Limits Are Physical

Dettmers predicts that the current scaling paradigm—making models smarter simply by making them bigger—has about one to two years of life left. We are approaching a point where AI scaling limits are dictated by the physical constraints of the chips themselves.

The hardware efficiency peak actually occurred back in 2018. Since then, manufacturers haven't been getting "smarter" performance gains; they've been cheating the system by lowering precision.

Nvidia Ampere: Used BF16 (16-bit) precision.

Nvidia Hopper: Moved to FP8 (8-bit) precision.

Nvidia Blackwell: Is pushing FP4 (4-bit) precision.

We are reaching the bottom of the barrel for quantization. You cannot reduce data precision forever without destroying the model's ability to learn. Once we hit the floor of FP4, there are no more "easy" multipliers to be found. The hardware industry is running out of tricks to mask the slowing of Moore's Law.

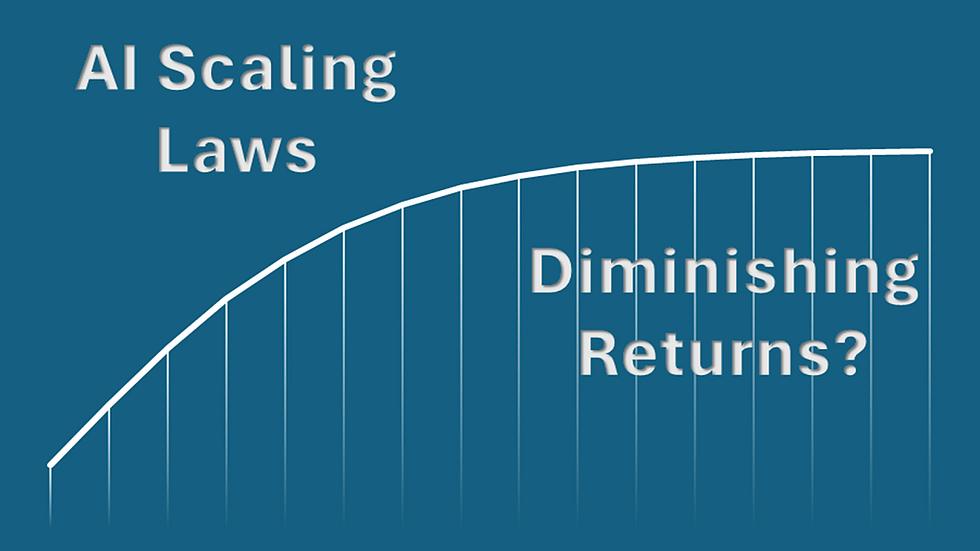

Energy Consumption vs. Performance Gains

The most damning evidence of AI scaling limits is the energy cost. We are currently in a phase of diminishing returns that makes the economics of AGI (Artificial General Intelligence) look disastrous.

Compare the last few generations of GPUs:

Ampere to Hopper: Performance increased by 3x, but power consumption rose by 1.7x.

Hopper to Blackwell: Performance increased by 2.5x, but power consumption rose by another 1.7x and the chip area doubled.

We are seeing a trend where exponential increases in energy and cost are required for linear increases in capability. Dettmers notes that rack-level optimizations, like Nvidia’s GB200 NVL72 which connects 72 GPUs, provide a temporary boost. However, this is a one-time architectural shift. By 2026 or 2027, once these cluster-level efficiencies are maximized, we will hit a hard wall. The physics of electricity and heat transfer will make further scaling economically unjustifiable.

The Economic Disconnect of AGI Hype

Silicon Valley operates on narratives, and the current narrative is that AGI is imminent. This belief drives billions in investment. Yet, the disconnect between this fantasy and the AI scaling limits described above is widening.

Cost-Benefit Ratios in the Face of AI Scaling Limits

The industry is trapped in a "scaling law" dogma that assumes if you feed a model enough data and compute, consciousness or superintelligence will emerge. This ignores the fact that LLMs are probabilistic systems. They predict the next token; they do not understand the nature of the universe.

The cost to train these models is skyrocketing, but their utility is not growing at the same rate. We are witnessing a classic economic bubble where the infrastructure investment (billions in GPUs) vastly outpaces the revenue generation capability of the software. If a model costs $1 billion to train but only provides a slightly better search experience than the previous version, the business model collapses.

Many voices in the tech community are now arguing that the pursuit of AGI through LLMs is a financial trap. It prioritizes a science-fiction goal over creating functional, profitable products. The expectation that AI will simply "replace" humans in complex fields like law or medicine overlooks the reliability gap. You cannot automate high-stakes professions with a system that hallucinates 30% of the time, regardless of how fast it runs.

A Pragmatic Future Beyond the Fantasy

Acknowledging AI scaling limits does not mean AI is useless. It means the "fantasy" phase is over, and the "utility" phase must begin. The most successful approaches moving forward will likely come from those who ignore the AGI race and focus on specific, verifiable applications.

Shifting Strategy Away from AI Scaling Limits

The obsession with a god-like digital intelligence is a uniquely Silicon Valley phenomenon. Other regions, notably China, are adopting a more pragmatic strategy. Instead of chasing a nebulous AGI, they are integrating existing AI capabilities into industrial processes, supply chains, and consumer tools where 80% accuracy is acceptable or where human oversight is already baked in.

The Frontier is Physical:To move beyond current limitations, AI needs to understand the physical world, not just the digital text of the internet.

Data Scarcity: We have scraped the entire internet. To get "smarter," models need data that doesn't exist online—physical world interaction data.

Robotics: The next breakthrough won't be a larger chatbot; it will be in robotics. Collecting data on how objects move, have weight, and interact is expensive and slow, but it is the only way to ground AI in reality.

Dettmers argues that AGI requires an agent to do everything a human can do. That includes tying shoelaces and fixing a sink. Text prediction cannot teach a machine physics. The future belongs to companies that stop trying to scale their way to magic and start engineering their way to utility.

The AI scaling limits are real. The hardware data confirms it, and user experience validates it. The companies that accept this and pivot to specialized, reliable tools will survive the coming correction. Those still waiting for the GPU fairy to deliver superintelligence will be left holding a very expensive bag of depreciating silicon.

FAQ Section

Q: What exactly are AI scaling limits?

A: AI scaling limits refer to the point where adding more computing power and data to a model no longer yields proportional improvements in intelligence. Current research suggests we are hitting this wall due to diminishing returns in hardware efficiency and the exhaustion of high-quality training data.

Q: Why does Tim Dettmers believe AI progress will stall by 2027?

A: Dettmers argues that hardware efficiency gains have stopped, and current performance boosts come from sacrificing precision (using fewer bits per calculation). He predicts that by 2027, physical constraints and the end of rack-level optimizations will make further scaling economically and physically impossible.

Q: Can we solve AI hallucinations by making models bigger?

A: No, simply making a model larger does not fix hallucinations. Hallucinations are a feature of the probabilistic nature of LLMs, not a bug caused by lack of size. Larger models are often just more articulate when presenting false information.

Q: Is the current investment in AI hardware sustainable?

A: Likely not. The energy consumption and cost of GPUs are rising exponentially while performance gains are becoming linear. Unless AI models can generate revenue that matches these massive infrastructure costs, the current investment levels are an economic bubble.

Q: What is the difference between AGI and current AI?

A: Current AI (like ChatGPT) is narrow intelligence focused on predicting text or recognizing patterns based on training data. AGI (Artificial General Intelligence) implies a system that can perform any intellectual task a human can do, including reasoning in novel situations and interacting with the physical world—capabilities current LLMs do not possess.